View the updated AI Voice report: 2025.

Now is the time to reinvent the phone call. Thanks to gen AI, no human will ever again have to make a call. Humans will spend time on the phone only when a call has value to them.

For businesses, this may mean: (1) time and labor cost savings from human callers; (2) potential to re-allocate resources towards increased revenue generation; and (3) reduced risk with more compliant and consistent customer experiences.

For consumers, voice agents can provide access to human-grade services without the need to pay or “match with” an actual human. Currently, this includes therapists, coaches, and companions — in the future, this is likely to encompass a much broader range of experiences built around voice. Like most other consumer software, the “winners” will be unpredictable!

Phone calls are an API to the world — and AI takes this to the next level.

Where We See Opportunity

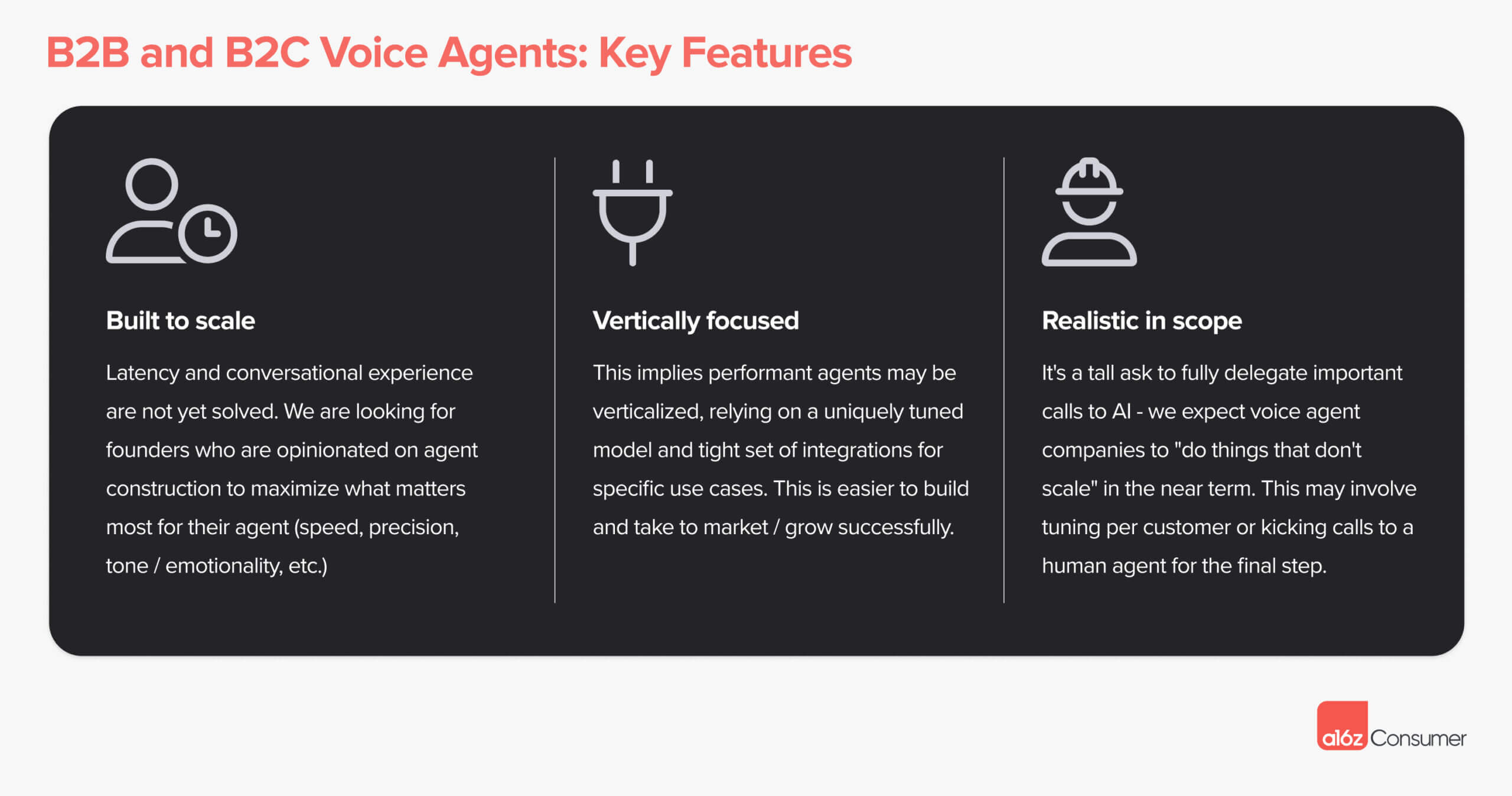

There is massive opportunity at each layer — infra players, consumer interfaces, and enterprise agents. For B2C and B2B voice agents, we have a few hypotheses around the most exciting emergent products:

If you are building here, reach out to omoore@a16z.com and anish@a16z.com.

The Stack: How Do You Build a Voice Agent?

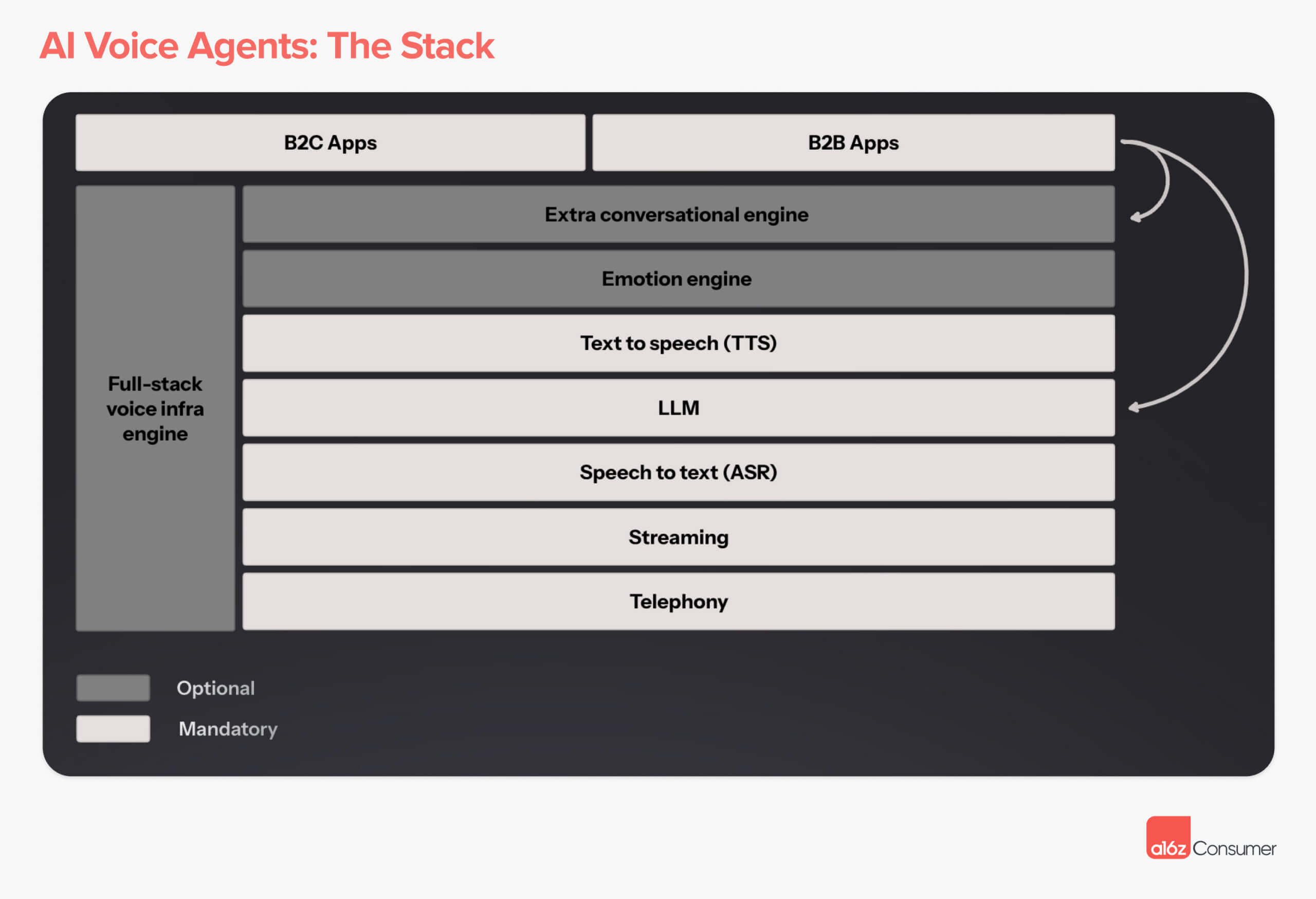

New, multi-modal models like GPT-4o may change the structure of the stack by “running” several of these layers concurrently via one model. This may reduce latency and cost, and power more natural conversational interfaces — as many agents haven’t been able to reach true human-like quality with the composed stack below.

To function, voice agents need to ingest human speech (ASR), process this input with an LLM and return an output, and then speak back to the human (TTS).

For some companies/approaches, the LLM or a series of LLMs handles the conversational flow and emotionality. In other cases, there are unique engines to add emotion, manage interruptions, etc. “Full stack” voice providers offer this all in one place.

Consumer (B2C) and enterprise (B2B) apps sit on top of this stack. Even using third party providers, apps (typically) plug in a custom LLM — which often also serves as the conversational engine.

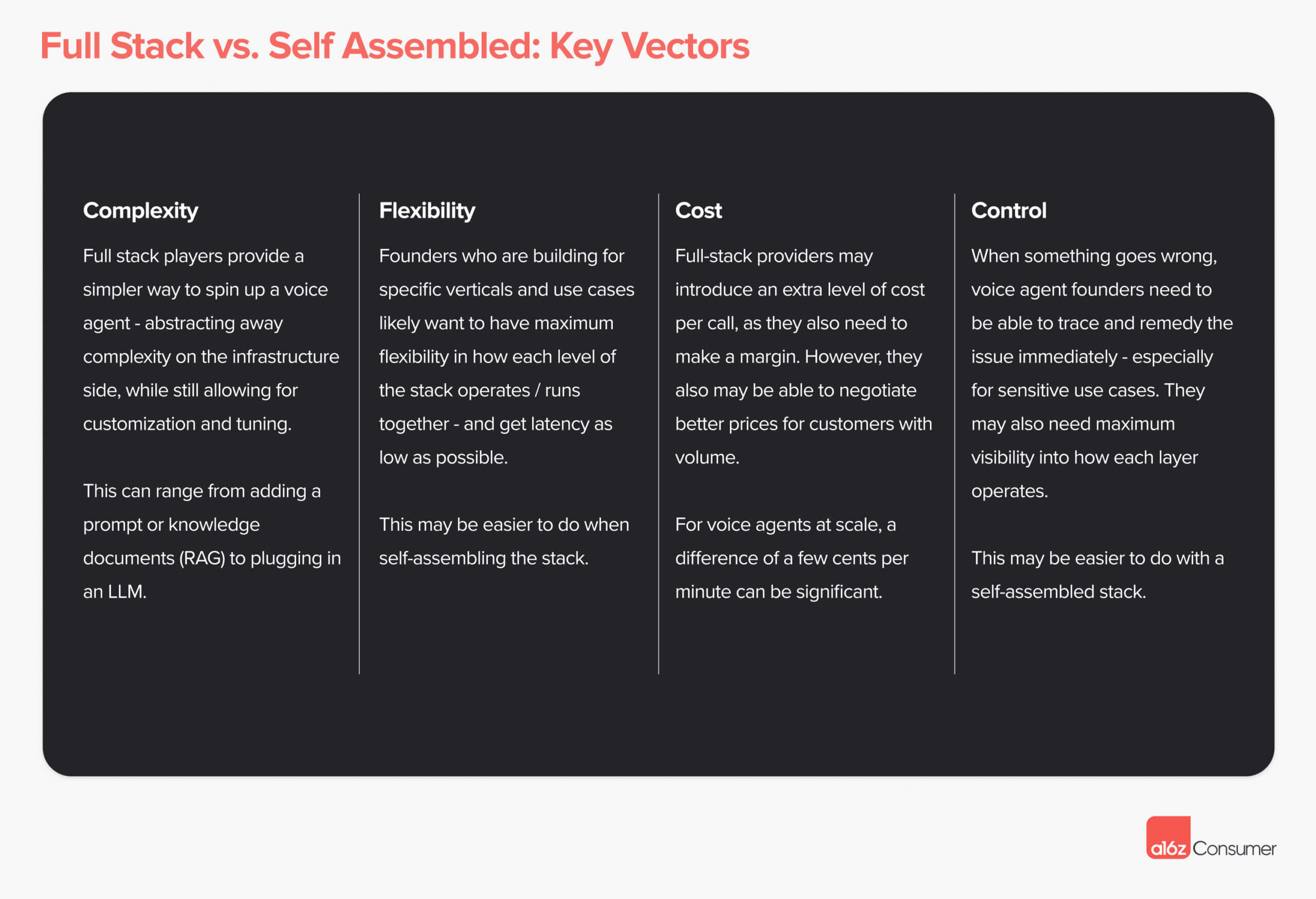

Full Stack vs. Self-Assembled

Voice agent founders can choose between spinning up an agent on a full stack platform (ex. Retell, Vapi, Bland) or assembling the stack themselves. In making this decision, there are a few key vectors:

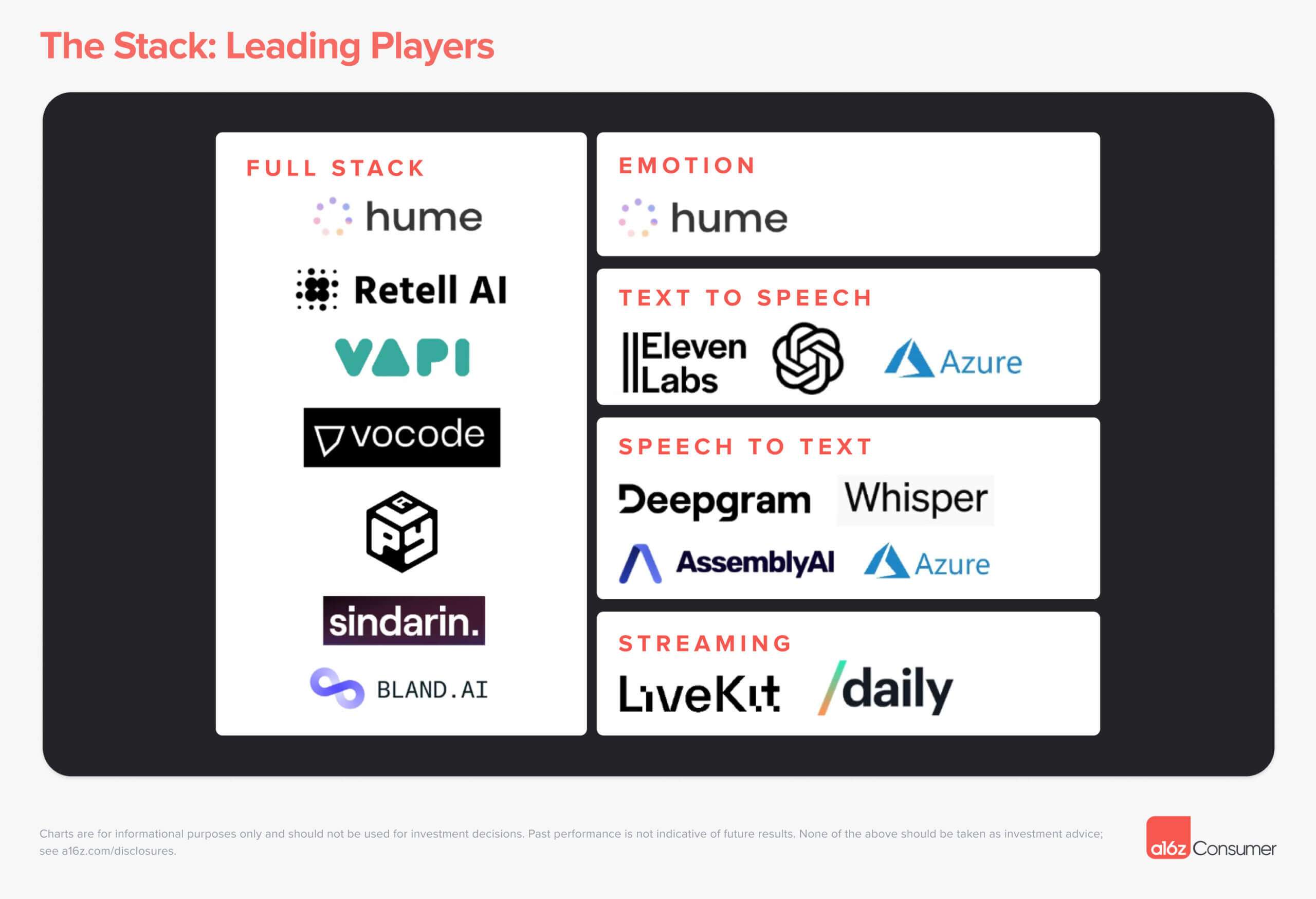

These are some of the leading players across each stack level now. This is not a comprehensive market map, but represents the names most commonly raised by voice agent founders. We expect this stack to change significantly as multi-modal models emerge.

If you’re working on a voice agent infrastructure company, reach out to Jennifer Li (jli@a16z.com) and Yoko Li (yli@a16z.com) on our team.

B2B Agents: Our Thesis

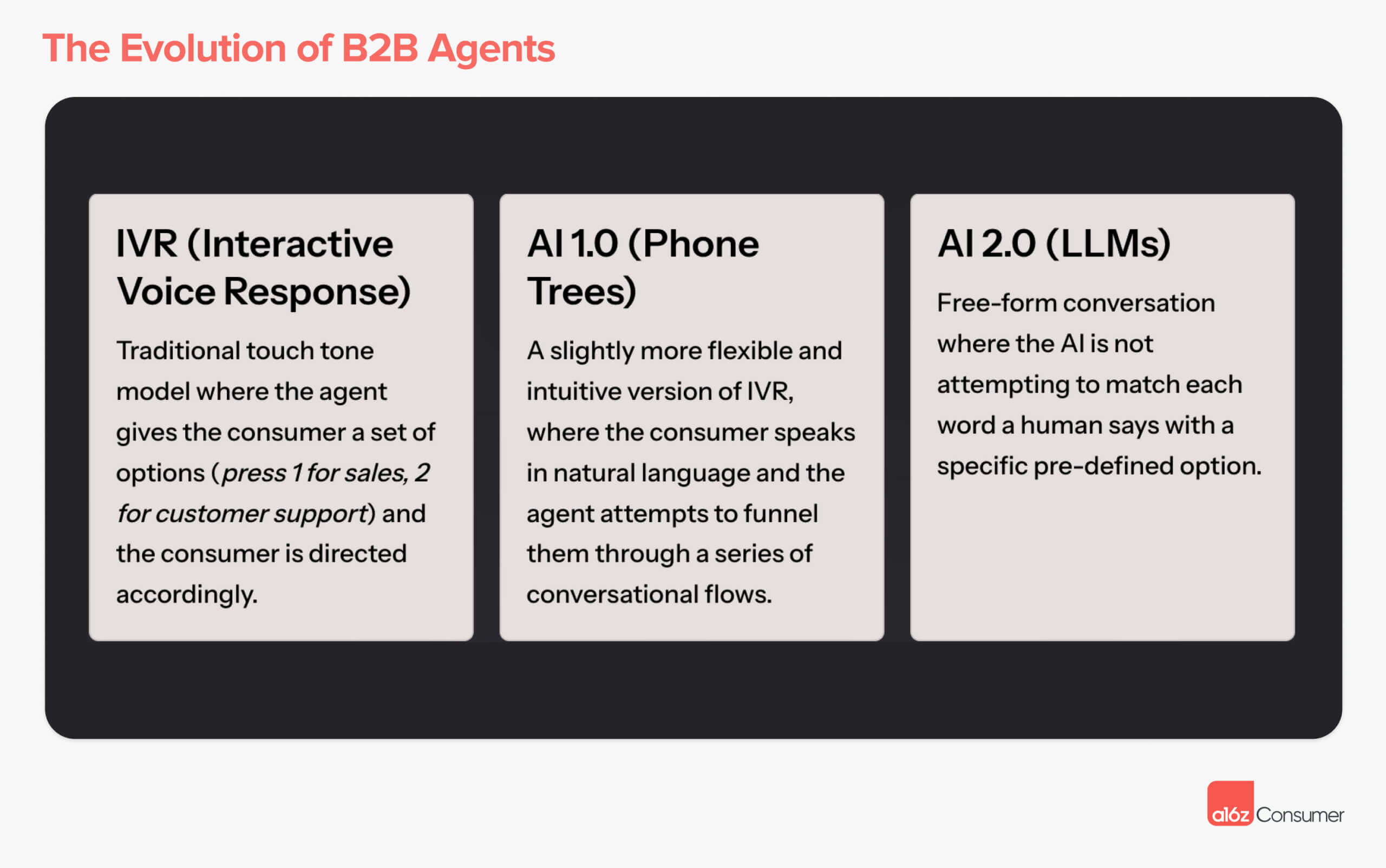

We are transitioning from 1.0 AI voice (phone tree) → 2.0 wave of AI voice (LLM-based). 2.0 companies have been emerging in the last 6 months or so. 1.0 companies may be more accurate now, but the 2.0 approach should be much more scalable and accurate in the long term.

There is unlikely to be one horizontal model or platform that works across all types of enterprise voice agents. There are a few key differences across verticals: (1) call types, tones, and structures; (2) integrations and processes; and (3) GTM and “killer features.”

This is likely to mean an explosion of vertical agents that are highly opinionated in the UI. This requires founding teams with deep domain expertise or interest. Labor is the #1 cost center for many businesses — TAM is large for companies that “get it right.”

The most near-term opportunities may be in industries that live and die by phone appointments, have significant labor shortages, and have low call complexity. As agents become more sophisticated, they will be able to tackle more complex calls.

B2B Agents: Evolution

We’ve seen three primary waves of tech in the B2B voice agent space:

Many voice agent companies are taking a vertical-specific approach for a specific industry (ex. auto services) or a specific type of task (ex. appointment scheduling). This is for a few reasons:

- Execution difficulty. The quality bar is high to entrust calls to an AI — and the conversational flow (plus the backend workflow on the customer’s side) can quickly become complex/specific. Companies that build for the “edge cases” in these verticals have a better chance of success (ex. unique vocabulary a general model will misunderstand).

- Regulations and licenses. Some voice agent companies face special restrictions, certifications needed, etc. A classic example of this is healthcare (ex. HIPAA compliance), though this is also cropping up in categories like sales where there are AI cold calling regulations on a national level.

- Integrations. Nailing the user experience (both for the business and the consumer) in some categories may require a long tail of integrations — or specialized integrations that aren’t worthwhile to build unless you are attempting to serve that specific use case.

- Wedge into other software. Voice is a natural entry into core customer actions like bookings, renewals, quotes, etc. In some cases, this will be a wedge into a broader vertical SaaS platform for these businesses — especially if the customer set still largely operates offline.

B2B Agents: Where We See Opportunity

LLM based — but not necessarily 100% automated from day one.

The “strong form” of an AI voice agent will be an entirely LLM-driven conversation, not an interactive voice response (IVR) or phone tree approach. However, because LLMs are not 100% reliable all the way through, there is likely to be some (temporary) “human in the loop” for more sensitive/larger transactions. This also makes vertical-specific workflows particularly important, as they can maximize the probability of success while minimizing human interference with fewer edge cases.

Tuning custom models vs. prompting LLM approach.

B2B voice agents will need to navigate specialized (or vertical-specific) conversations for which a general LLM is likely insufficient. Many companies are tuning per customer models (using a few hundred or low thousands of data points) and will likely extrapolate this back to a company-wide base model. The custom tuning may even continue for enterprise clients. Note: Some companies may tune a “general” model (to be used across clients) for their specific use case(s), and then prompt on a per-customer basis.

Technical teams with domain expertise.

Given their complexity, some prior AI background will be helpful — if not necessary — for spinning up and scaling high quality B2B voice agents. However, understanding how to package the product and wedge into the vertical is likely to be equally important — requiring either domain expertise or strong interest. You don’t need a PhD in AI to build and launch an enterprise voice agent!

Sharp POV on integrations + ecosystem.

Similar to the above, buyers in each vertical have a few specific features or integrations that they are typically looking to see before they will make a purchase. In fact, this may be the proof point that elevates the product from “useful” to “magic” in their assessment. This is another reason why it makes sense to start fairly verticalized.

Either “enterprise grade” or strong product-led growth (PLG) motion.

For verticals with significant revenue concentration in the top companies/providers, voice agent companies may start with enterprises and eventually “trickle down” to SMBs with a self-serve product. SMB customers are desperate for solutions here and are willing to test a variety of options — but may not provide the scale/quality of data that allows a startup to tune the model to enterprise caliber.

B2C Agents: Our Thesis

In B2B, voice agents largely replace existing phone calls to complete a specific task. For consumer agents, the user has to choose to continue to engage, which is challenging, as voice is not always convenient to interact with. This means the product bar is “higher.”

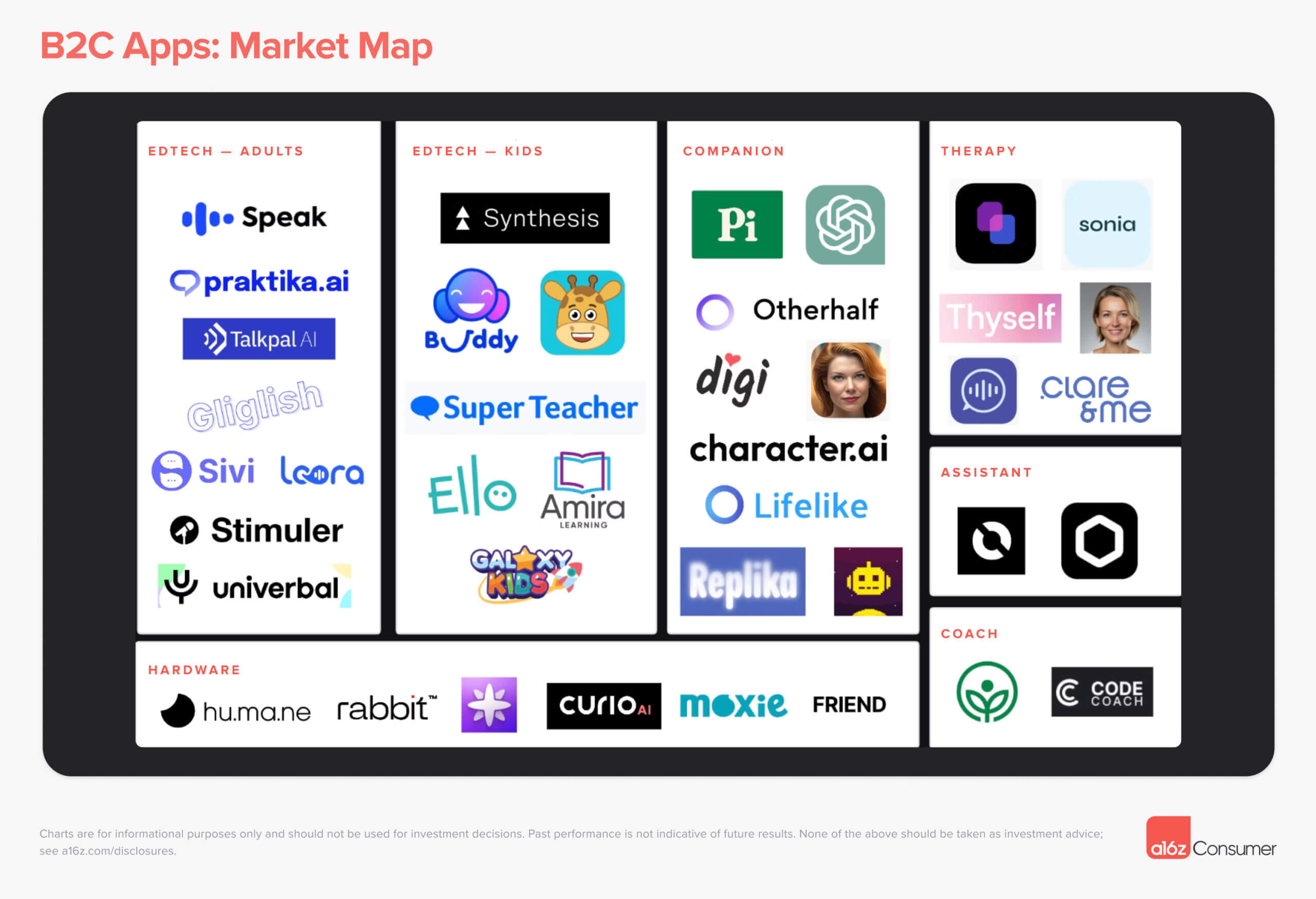

The first and most obvious application of consumer voice agents is taking expensive or inaccessible human services, and replacing the supplier with an AI. This includes therapy, coaching, tutoring, and more — anything dialogue-based that can be completed virtually.

However, we believe the true magic in B2C voice agents is likely yet to come! We’re looking for products that use the power of voice to enable new kinds of “conversations” that didn’t exist before. This may reinvent the form factor of existing services, or create entirely new ones.

For products that nail the UX, voice agents provide an opportunity to engage consumers at a level never before seen in software — truly mimicking the human connection. This may manifest in the agent as the product, or voice as a mode of a broader product.

B2C Agents: Evolution

So far, the dominant consumer AI voice agents are from large companies, like ChatGPT Voice and Inflection’s Pi app. There are a few reasons consumer voice has been slower to emerge:

- Large companies already have consumer distribution and best-in-class models in terms of accuracy, latency, etc. Voice is not easy to deliver at scale. This is especially true given the recent launch of GPT-4o.

- B2B voice agents are “plugging in” AI to an existing process — while B2C voice agents require users to adopt a new behavior. This can be slower/require a more magical product.

- Consumers have been negatively conditioned around voice AI due to experiences with products like Siri, so are not necessarily inspired to try new apps.

- Broad-based products are generally able to deliver on the basic use cases of voice AI — tutoring, companionship, etc. B2C voice startups are just starting to tackle use cases or create experiences that ChatGPT, Pi, etc. would not handle.

B2C Agents: Where We See Opportunity

Strong POV on why voice is necessary.

We are excited about products and founders that are opinionated on how voice brings unique value to the product — not just “voice for the sake of voice.” In many cases, a voice interface is actually a net negative versus a text interface, as it’s more inconvenient to consume and extract information from.

Strong POV on why real time voice is necessary.

While voice is difficult to consume, real time voice is even more difficult (vs. async voice messages). We are excited about founders that have a perspective on why their product needs to be built around live conversations — perhaps it’s for human-like companionship, a practice environment, etc.

Non-skeuomorphic to pre-AI “product.”

We suspect that the strong-form products will not be a direct translation of a previously human to human conversation, where the AI voice agent simply plugs in for the human provider. First, it’s difficult to live up to that standard — but more importantly, there is an opportunity to deliver the same value better (more efficiently, more joyfully) using AI.

Verticalized to the extent where model quality doesn’t = winner

The leading general consumer AI products (ChatGPT, Pi, Claude) have high quality voice modes. They can meaningfully engage in many types of conversations and interactions. And, they will likely win on latency and conversational flow in the near term as they host their own models and stack.

We are excited to see startups succeed either by tailoring or tuning for a specific type of conversation, or building a UI that provides more context and value to the voice agent experience — ex. tracking progress over time, or steering the conversation/experience in an opinionated way.

If you’re building an AI voice agent, reach out to omoore@a16z.com and anish@a16z.com — we’d love to hear from you.

-

Olivia Moore is a partner on the investing team at Andreessen Horowitz, where she focuses on AI.

-

Anish Acharya Anish Acharya is an entrepreneur and general partner at Andreessen Horowitz. At a16z, he focuses on consumer investing, including AI-native products and companies that will help usher in a new era of abundance.