2025 was the year of video. AI-generated ads went mainstream. Launch videos from seed stage startups got millions of views. Video podcasts and interviews exploded.

What you didn’t see was all the work behind the scenes. Cutting 90 minutes of footage into a three-minute short. Correcting lighting and audio in post-production because you couldn’t nail it in the shoot. Searching for the right music and sound effects.

A common rule of thumb in video production is that you’ll spend 80% of your time & energy on editing, and 20% on filming (or now, generating). Crafting compelling video is typically a long and tedious process – and few people have the “taste” to do it right. There’s a significant barrier to entry.

We now have the technology to hand over some of this work to AI agents, which can help us produce both filmed and generated content. Vision models can watch and comprehend massive amounts of video footage. Agents can analyze, plan, and use editing tools on your behalf. And we have enough training data to teach models what makes a video great.

Video agents will blow out the supply curve for quality video – the kind of content that requires days (or weeks) from professional video editors today. What Cursor did for coding, these agents will do for video production.

Why now?

There’s immense demand for agents that give anyone the skills (and taste) of a professional video editor. So why don’t these products already exist? There have been a few recent developments that have unlocked progress here:

- Vision models can now process large amounts of video. You have to understand video before you can edit it. This is a non-trivial challenge – there’s a lot of information to process, even in a short clip. We’ve seen a lot of progress with recent LLMs like Gemini 3, GPT-5.2, Molmo 2, and Vidi2, which are inherently multimodal and have longer context windows. Gemini 3 can now process up to an hour of video! You can upload it as an input and ask the model to generate timestamped labels, find a specific moment, or just summarize what’s happening.

- Models can now use tools. AI video editors need to be able to take action – not just describe what’s happening or suggest changes. We’re starting to see meaningful progress around LLMs as real agents that can use tools. One of my favorite examples of this is Claude using Blender (a notoriously tricky product that many humans haven’t mastered). You can imagine how this evolves as you give agents access to more tools.

- Image and video generation models have improved. I’m a big believer that many video production pipelines will be hybrid – a mix of AI and filmed content. Imagine filming interviews for a documentary, but generating B-roll or historical footage with AI. Or using a motion transfer model to take a reference animation and apply it to a real character. For any of these things to work, models needed to reach a level of quality & consistency to be valuable. Now, that’s finally happening.

What will these agents do?

A few examples of the types of tasks they’ll be able to tackle for us:

- Process – whether you’re filming or generating a video, you’ll likely end up with a lot more footage than you need (sometimes by a factor of hundreds – imagine how many “takes” there are for each scene of a movie or TV show). It’s often a challenge to sort through all this footage, organize it, and decide what to use. Products like Eddie AI can take hours of uploaded video and do things like identifying A-roll vs. B-roll, processing multiple camera angles, and comparing takes.

- Orchestrate – if we assume that many videos are going to include some element of AI in the future, we’re going to need agents that orchestrate all of the models. For example – imagine you want to add an AI animation to an educational video. You’ll need an agent that can generate the images, send them to a video model, and stitch the outputs together. Products like Glif are launching agents that coordinate between multiple models on a user’s behalf.

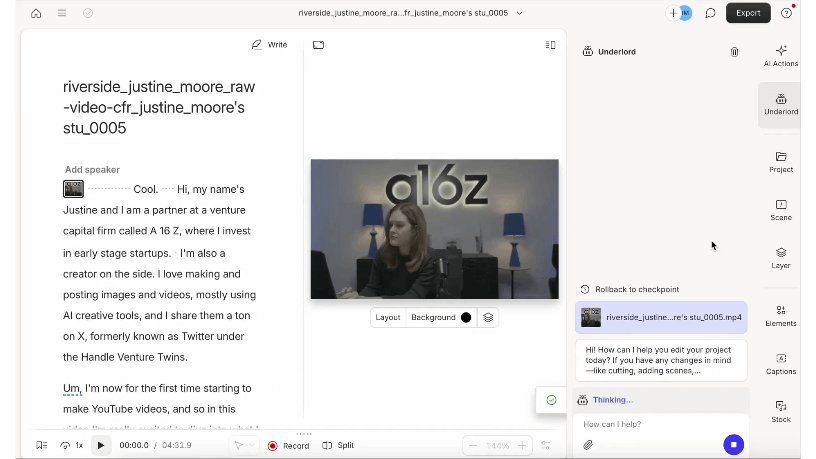

- Polish – fixing the small details often end up taking a video from good to great. But if you’re not a professional video editor, you may be overwhelmed by the flood of little tasks needed to polish a video. For example – you may want to adjust lighting between clips, clean noise out of the audio track, or take out filler words (“ummms” and “uhhhs”) during an interview. Products like Descript’s Underlord agent can take a video, make all these changes for you, and deliver the final version.

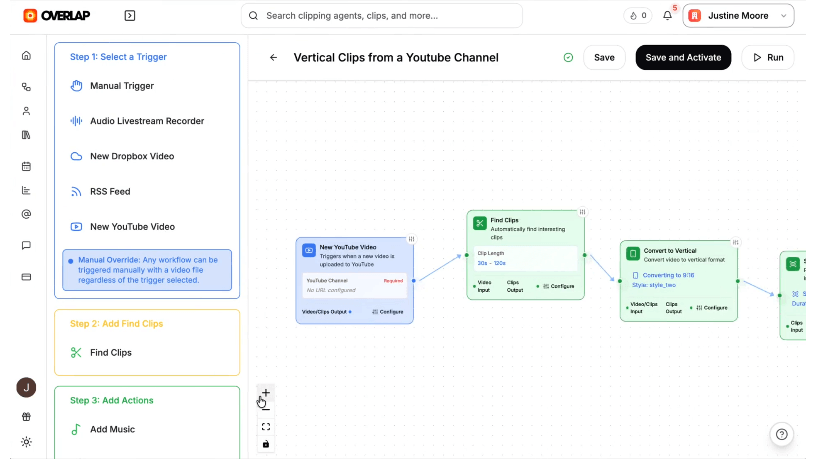

- Adapt – when you make a good video, it often makes sense to adapt it for more reach. For example, you may want to cut a YouTube podcast into short clips with different aspect ratios to post on your X, Instagram, and TikTok accounts. Or even translate a video into other languages (and re-dub the speakers) to reach an international audience. Platforms like Overlap allow you to set up node workflows for these adaptation tasks.

- Optimize – the ultimate goal isn’t just replacing manual tasks with AI. It’s building agents with taste that can make your videos better. There’s a reason people hire professional video editors: they make things look good. They spend years learning everything from how to hook viewers to pacing the storyline and using music to build an emotional reaction. There are thousands of micro-decisions there. YouTuber Emma Chamberlain famously said that she used to spend 30-40 hours editing a ~15 minute vlog.What if an AI agent could watch your footage, ask about your objectives, and then craft a few draft versions of a video for you to iterate on? You review and direct – “The opening is too slow.” “Cut the middle section.” “Make the ending hit harder” – and the agent executes.

Video has won. It’s how we learn, market, and connect. But the editing bottleneck keeps growing. More footage captured, more platforms to publish on, more formats required.

The good news is that technology to solve this exists. Vision models, tool-using agents, and massive amounts of training data have all matured in the past year. The pieces are in place.

This means that AI editing agents will dramatically increase the quality of all video we see in the coming months and years, along with the rate at which it’s created.

2025 was the year of video. 2026 is the year we let agents edit it.

If you’re building anything in the AI video space reach out on X at @venturetwins or email at jmoore@a16z.com. I especially want to talk if you’re building agents that can help me edit my companion video to this blogpost (see below!).