Back in 1996, Wired ran a piece on the battle between different search providers during the early days of the internet. The big takeaway from that period (pre-Google, pre-PageRank) was that all the startups were approaching internet search in wildly different ways. Yahoo had a catalog-like approach, where sites had to be painstakingly classified by human workers. Inktomi, a long forgotten search backend, used rudimentary crawlers and indexed results based on an in-house textual relevance algorithm. Excite was a user-facing search portal, which took an inverted index of the web and clustered sites with roughly similar profiles together (this was judged linguistically). It was a simpler time: in some ways, working on search in the ‘90s was more like being a librarian than a research engineer.

But all of those startups were left in the dust by Google, which was founded two years later in 1998. Google’s PageRank algorithm used the number of backlinks to judge a website’s legitimacy, and quickly became the best way for humans to browse the internet, and for brands, publishers, and SEO masterminds to promote themselves on the web. Until the age of AI, the problem of search on the internet was largely thought to be solved.

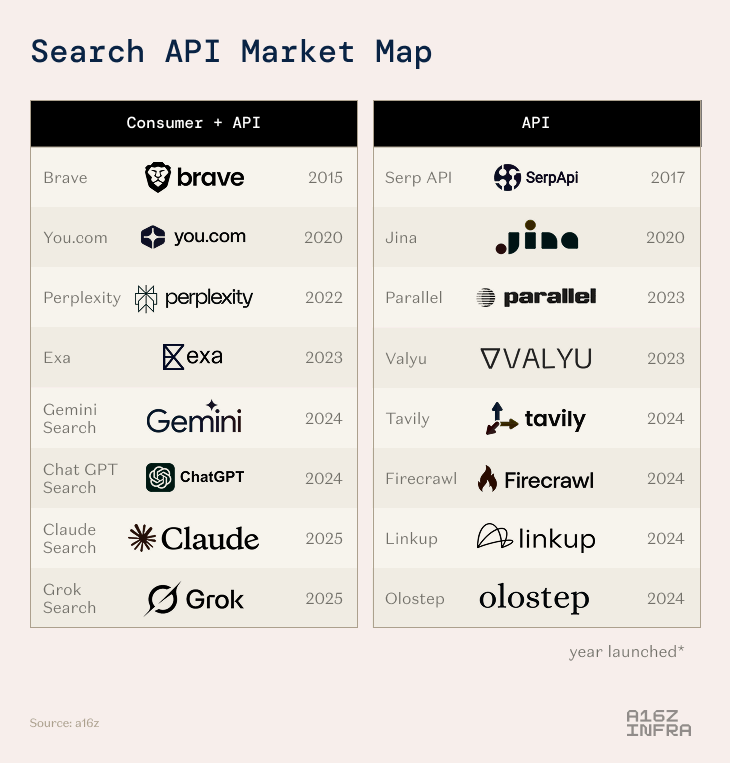

Things are changing again. We have a front row seat to a new search war fought amongst numerous startups. And with agents poised to crawl the web more than is physically possible for humans, the stakes are enormous:

- We need to rethink search for a world where agents are doing most of the browsing and searching instead of humans typing in the question, and dozens of startups are currently competing for the prize.

- Unlike 25 years ago, where each competitor was a search product (Yahoo, Excite, AltaVista…), this time around most of the competition is between API providers who can iterate very quickly and incorporate the rapidly changing state-of-the-art in AI search.

- Most consumer-facing companies with a web search use case (Deep Research, CRM, technical documentation…) are outsourcing to a specialist rather than building their own search stack. That said, a few of the larger labs and startups are building both a consumer-facing product and a developer-facing API service.

- Web search for the past 30+ years was built for humans. Now it’s being rearchitected for agents. But humans will be the ultimate beneficiary here, as information gets surfaced faster, time-intensive research gets accelerated, and new products get built.

Indexing the Web for AI

Web search as we know it today is primarily optimized for humans (or rather, it’s optimized for marketers). It surfaces SEO content, is littered with ads, and contains a lot of extraneous information. This forces developers to scrape large amounts of unnecessary data and summarize on top, which becomes costly and time-intensive. If you tried to add AI search on top of the web as it looks and exists today, you’d end up surfacing and synthesizing a lot of garbage: you’d get a terrible expression of LLMs’ potential, not a great one.

Search can (and should) be built from the ground up to be AI-native. An AI-native search layer should target the most information‑rich spans of text, with explicit controls for recency and length, ready to slot directly into an LLM context window. An AI-native search layer provides users with the most informative text segments, with fine-grained control over factors like content length and real-time freshness, making them immediately suitable for insertion into agentic workflows.

We’ve learned a few big things from our discussions with AI model providers, teams building AI search products, and teams building on top of AI search products. Overall: in contrast to the existing web, where there were a few search giants (one, really); this time around there could be many search providers,thriving on different dimensions and domains, while embedded into user-facing products.

While a few leading AI companies have chosen to develop their own in-house search capabilities, the majority of organizations are expecting to rely on third-party search providers. The high cost and technical complexity of maintaining web index, search infrastructure make outsourcing more attractive unless an organization operates at a massive scale. As one AI model builder put it to us, the decision comes down to whether it’s worth investing precious engineering time in search rather than other core product improvements. For that particular model team, it made more sense to go with an external provider.

This makes sense when you appreciate how much faster web engineering best practices are iterating and proliferating today versus in the 90s. Many tools that get built and productized at AI research labs start out as open source repositories that capture the attention and imagination of developers on GitHub and X before moving upstream. Conventions and research simply spread faster today, so it’s logical that we’re seeing engineering decisions in AI search converge. As a likely consequence, the current set of players are much more similar in their engineering decisions and architecture compared to the search players 25 years ago: they’ve found what works, and optimized for their choice of tradeoffs, much earlier in their lives.

Performance matters a lot, after all. Re-crawling and re-indexing the entire web in a high quality way like Google has is a non-trivial feat. It’s computationally expensive, requires robust infrastructure, and involves sorting through massive (petabytes) amounts of data. Some companies like Exa have taken an infrastructure-intensive approach by deploying their own supply of 144 H200s GPUs and gathering massive URL queues from which web data can be crawled, stored, and ingested into a custom neural database. Parallel, meanwhile, maintains its own large-scale index optimized for AI agents, continuously adding millions of pages daily and offering a programmable search API that surfaces fresh, token-efficient excerpts designed for downstream AI reasoning.

Other search companies like Tavily and Valyu have opted to crawl the web in a more periodic, compute-saving fashion, but employ RL models that help indicate when a certain page should be re-crawled. For instance, a blog post probably won’t update and won’t need to be recrawled, but a dynamic eCommerce site may need to be updated hourly to reflect up-to-date pricing and availability. This is an intentional tradeoff to save on compute, operating under the assumption that the most relevant and frequently visited corners of the web will still be surfaced and refreshed with sufficient accuracy.

The end goal is to balance cost, accuracy and performance while still indexing the web comprehensively. A good index means good coverage of content that humans (and agents) now find relevant.

A brief history of AI search

Recall back to the distant days of 2023. When ChatGPT launched, it didn’t have internet access, which led to frequent instances of outdated or incomplete responses. This resulted in some hilarious dialogues, like the chatbot inventing character names from The Matrix or hallucinating falsehoods about whether or not canned sardines are alive (these kinds of queries today get fact-checked by LLMs that can search). Amusement aside, it was also a massive bottleneck in terms of usefulness: programmers especially found that coding was almost impossible with early LLMs because they couldn’t access external documentation.

A team of engineers (which later became founding members of the AI search team Tavily) were among the first to address this problem through the open-source project GPT Researcher, a viral tool with over 20,000 GitHub stars that enabled agents to browse web sources and incorporate retrieved information into reasoning and execution loops.

GPT Researcher helped define a new paradigm of “retrieval for reasoning,” combining retrieval, summarization, and synthesis. This was a proto-form of the kind of deep research and reasoning tools we see today: an agent would browse the web, unearth sources, judge their relevance and accuracy, and use an LLM to curate useful takeaways. This kind of feature would be a first line of defense against incomplete LLM responses: it could search the web and verify, once and for all, that sardines are not actually alive (lest we have doubts). ChatGPT’s later integration of browsing capabilities in 2024 validated this approach and opened the door to a wave of innovation in search infrastructure and agentic research.

Two other major architectural shifts were vital in enabling AI search: retrieval-augmented generation (RAG) and test-time compute (TTC). RAG gives models live access to the world’s information by querying up-to-date, domain-specific, or proprietary data instead of relying solely on static training weights. TTC lets models allocate more reasoning power at inference, using iterative search, verification, or planning loops to improve answers. Together, they turn static models into dynamic reasoners: systems that can both find the right information and think harder about it. This enabled search to evolve from a static, blue-link utility into an interactive form of intelligence.

This became an interesting juncture in time. Users started realizing that AI-powered search was becoming powerful, but the exact abstraction layer was still undetermined. Would the ideal end product be built on top of ChatGPT, or would a completely new search infrastructure layer emerge? It’s become increasingly clear that more foundational search infrastructure is required, and the market has started moving in that direction.

Earlier this year, Microsoft quietly killed off their public Bing Search API and steered developers toward a paid “agent builder” that wraps Bing search inside an LLM workflow. Microsoft consciously phased out their index-based search offering, in favor of owning the stack and funneling users to build on top of their own platform. The symbolism was hard to miss: Microsoft was essentially saying “you should view our agent builder as a superior successor to the Bing API.” By that point, there were about a dozen other AI search companies in the field. We explore them below.

The playing field today: platforms vs products

If the same 1996 Wired search story was published today, but about the new startups in AI search, there would be a different angle.

Most AI search products today are converging towards similar forms of API platform offerings. Through a single integration, user-facing products can leverage an API or SDK that allows access to various search capabilities like returning ranked search, crawling, extracting information from specific web pages, and performing deep research.

By being a clean and easy-to-use developer interface, these search APIs can then be hooked up directly into agent workflows to provide access to external data and synthesize information directly on top. This results in customers, specifically developers, building their own products today with external search integrated: CRMs with automatic enrichment from the web, coding tools that can access live docs, etc. (more on use cases below). There are nuances here: some teams prefer an all-in-one, end-to-end solution from a single provider, while others opt to assemble their own stack — using one solution for search, another for reasoning, and so on. Actually, there are nuances all the way down: some API providers actually use external indexes, rather than building their own in-house.

There’s also a new class of search products emerging that are more consumer-facing. ChatGPT publicly released deep research capabilities in February 2025, and Seda is building more powerful consumer-facing research capabilities like branching and result specification. Exa launched Exa Websets to allow end users to access Exa’s search capabilities without having to integrate with their API.

These developer-facing search products are all typically built on existing AI search infrastructure and trade-off ease-of-use for flexibility. For instance with Exa Websets, GTM teams can immediately start enriching leads without looping in an engineering team for a custom integration, but aren’t able to customize search workflows if they want to add in custom logic.

Customers typically evaluate providers by benchmarking result quality, API performance, and cost. However, there’s no standardized methodology: testing can range from informal experiments to carefully crafted internal “exam-style” benchmarks. Companies often assess multiple providers side-by-side for specific use cases and then select whichever performs best. Some use multiple providers simultaneously to improve data completeness or coverage across domains, such as combining one provider for speed and another for complex or proprietary queries.

Use cases today

Deep Research

Deep research has emerged as one of the most compelling use cases for search APIs. It refers to an agent’s ability to conduct multi-step, open-ended research with both breadth and depth across the internet. These systems can execute in minutes what would take humans many hours, often surfacing information that would otherwise remain undiscovered.

OpenAI’s BrowseComp benchmark provides a strong illustration of the value deep research unlocks. Unlike simple fact retrieval, its 1,266 questions require multi-hop reasoning across scattered sources, creative query reformulation when initial searches fail, and synthesis of context across time periods.

Human experts solve only about 25% of these correctly within two hours. While contrived, this benchmark closely mirrors high-stakes real-world workflows: tracing regulatory filings across time periods, synthesizing competitive intelligence from fragmented data, mapping multi-layer corporate ownership, or conducting diligence where a single overlooked detail can change an outcome. Parallel offers a powerful deep research API that customers are already using to create research reports and conduct market research.

Our emerging view is that deep research will become the dominant and most monetizable form of agentic search. Customers already demonstrate willingness to pay for high-quality research results, and we expect this behavioral shift to accelerate as agents move from simple fact retrieval toward complex synthesis.

CRM Enrichment

A great early use case that’s emerged for AI-powered search is tackling lead enrichment for CRMs. Lead enrichment typically is a time-consuming and manual process that involves stitching together people or company data from many disparate sources. AI-powered search can automatically find and collect relevant information, and even update periodically to ensure freshness.

Technical documentation / code search

Coding agents need live, up-to-date access to code examples and documentation to generate accurate, high-quality code. Because frameworks, APIs, and syntax evolve rapidly, static datasets become outdated quickly. Search APIs bridge this gap by connecting agents directly to live web sources, ensuring they always reference the freshest and most relevant technical information.

We’re only beginning to see how these capabilities will be used. As search becomes a native layer in AI workflows, new and more compelling use cases are emerging—from agents that learn directly from community forums to systems that continuously adapt to new libraries and frameworks.

Proactive, personalized recommendations

Live web search unlocks new possibilities for delivering personalized, real-time recommendations. By tapping into continuously updated web data, applications and agents can proactively suggest relevant local events, trending activities, or emerging interests tailored to each user’s context and preferences.

Conclusion

Across the market, the 30+ search API customers we spoke with are seeing bounded early product differentiation among top AI search providers. Most players compete primarily on speed, pricing, and ease of integration, and offer similar functionality: ranked search, web crawling, document extraction, and deep research. That said, the landscape is changing rapidly. We’re beginning to see some teams break out and offer points of distinction, especially in deep research. The line between “search API” and “LLM-as-search-API” is also blurring: one can choose to use raw search results, and apply an LLM as a filter, while others prefer to have an LLM provide already-filtered results.

As we noted above, teams have taken different architectural approaches to how they index. As one enterprise customer noted to us, the “stack rank” of providers is constantly shifting and the space is one of the most exciting ones that we’re tracking closely as trade-offs that various players have taken in their approaches evolve into bigger differences over time.

It’s worth reiterating that the search was becoming bloated and unnavigable in the years leading up to AI: relevant results on Google were buried underneath sponsored links and websites themselves are blighted with pop-ups and ads. So making search more accessible for agents is another way of saying it’s also getting more accessible for humans. It took thirty years, but search on the internet is finally starting to evolve again. We’re excited by the teams pushing the field forward.

If you’re building in the space, reach out over email at jcui@a16z.com or X at JasonSCui.

-

Jason Cui is a partner at Andreessen Horowitz, where he invests in infrastructure and AI.

-

Jennifer Li is a general partner at Andreessen Horowitz, where she leads infrastructure investments with an eye on data systems, developer tools and AI.

-

Sarah Wang is a general partner on the Growth team at Andreessen Horowitz, where she leads growth-stage investments across AI, enterprise applications, and infrastructure.

-

Stephenie Zhang is a partner on the Growth investing team, focused on enterprise technology companies.

- Follow

- X