There is a unique window of opportunity today for a new 3D Creation Engine (aka game engine) to revolutionize the way we create games, film, virtual worlds, and simulations. Unity, Unreal, Roblox and Godot – the predominant 3D engines – are all 15+ year old technologies architected for a different era of computing. The emergence of generative AI, cloud computing, and new spatial platforms is poised to disrupt 3D creation end-to-end. And, in the case of Unity, the damaged trust from their recent pricing change execution has left the community seeking alternatives.

Brief History Of Game Engines

Humans have always desired to construct and explore worlds of our own imagination. From the age-old realms created in myths to the digital domains we traverse today in video games, the fidelity of these worlds has never been more immersive nor culturally impactful. And with AI, we are only beginning to understand the possibilities.

Up to now, bringing transcendent virtual worlds to our screens has been the domain of only a few supremely talented creators – legends like Shigeru Miyamoto, James Cameron and George Lucas. Today, we’re entering an age where creative technology will allow anyone to create virtual worlds and bring their imaginations to life.

A game engine is the canvas by which these real-time 3D interactive worlds are constructed and rendered. They combine 3D assets (characters, props/objects, environment, etc), animation (how they move), code (how they work), physics (how they interact), sound effects (how they sound), particle effects (smoke, fire, liquid, etc) and narration/speech (what they say).

Before the 2010s, most studios developed their own internal engines to build their games. Now, almost all games are built using the third party engines shown below, excluding some of the largest AAA budget games that still develop on their own (e.g. EA’s Frostbite, Infinity Ward Engine, etc).

Today, “game engines” are used not just to author video games but any virtual simulation – thus we’re renaming the game engine to 3D Creation Engine.

In the first half century of computer graphics, there was a bifurcation of tech, tools, and rendering pipelines to develop games and everything else that required high visual fidelity: animation/VFX, architectural visualization, advertising, etc. While games are rendered in real-time on end-user devices based on input, VFX/animation shots are rendered offline in data centers and “pre-canned.”

Around the same time that Unreal Engine (“UE”) was being developed, Pixar released RenderMan, which was the state of the art offline renderer for CGI/animation. The name RenderMan was coined in reference to the Sony Walkman for a futuristic rendering software that was so tiny it could fit inside a pocket. Of course, this vision came to reality through the games industry (which is often the tip of the spear for technological innovations). Now, as game engines’ graphics capabilities reach near photorealistic, the industries and toolsets are colliding.

What is a Next Generation 3D Creation Engine?

Below are some of the key technologies that will underpin the emergence of new 3D Creation Engines:

AI native engines unlock creativity at the speed of thought

We are moving towards a state where creation may start with a simple text box to generate a first draft of your project. And with that, the barriers to entry for new creators dramatically decrease. Given 3D experiences are an amalgamation of art, sound, animation, code, physics and more, a fully AI-native engine will not call underlying generative models in isolation but the models will be multi-modal, leveraging semantic scene or world understanding as input. You place a desk asset in an office setting. An AI copilot then suggests “auto-filling” the scene with appropriate office furniture, like a chair, computer, bookshelves, and office supplies. A character dropped into a room has a physics based understanding of the setting and inherently knows the animation it should enact to roll the chair out and sit on it without the developer having to choose and apply a specific animation file. The relevant sound effect is then generated in real-time.

With the help of AI, the creation experience moves towards auto-pilot but the creator should always be able to intervene and express directorial control over any decision. No longer will content creation be the project bottleneck but team collaboration and review. Creator roles within a studio will move from extreme specialization (e.g. riggers, environment artists, texture painters) towards creative generalists.

Cloud native engines maximize customization

Today’s engines are monolithic desktop applications originally designed before modern cloud architecture and the SaaS age. The engine should be highly modular, built on microservices and APIs allowing developers to assemble the right engine for the project at hand without the bloat that today’s engines can bring. Further, with the assets, data, and transformations co-located in the cloud vs locally, developers can scale compute elastically to complete a specific task. Also, rather than deliver a large static build of a game to the end player, assets can be streamed progressively from the cloud.

Multiplayer co-creation for fast iteration by distributed teams

Other creation suites in our toolbelt have moved to cloud native applications (Google Suite, Figma, Canva, Replit, Sequence, etc) but game development has lagged behind. A cloud native engine will enable creation of 3D experiences using any device from anywhere via a browser. It would allow creators to easily and instantly collaborate together in real time with a globally distributed team, increasing iteration speed. Version control systems would be built in natively, just like Google Docs.

UX that adapts to creators

Part of the magic of cloud native applications is that the UX can be adaptable. There are many personas in a game studio: from artists to developers with varying levels of technical knowhow. Yet, despite different personas and many different use cases for 3D Creation Engines (gaming vs VFX, etc), the editor UX remains constant. For a novice, it can be incredibly daunting. A cloud based, modular application can offer many different UX’s. The editor may gradually introduce more functionality and controllability to the user as they climb the learning curve. The engine may also have a marketplace of third-party editor UXs with each built to serve a particular use-case.

Community modding enabled out of the box

A next gen engine should be designed to enable a first-class modding environment out of the box that developers can choose to extend to end user creators. Because the editor, assets, and data will live in the cloud, a developer will have the option to extend a secure and controlled cloud sandbox environment – much like the one they used to develop the original game – to creators outside the studio. Modders will be able to remix another’s creation and republish with ease.

How Will a Next Gen Creation Engine Arise?

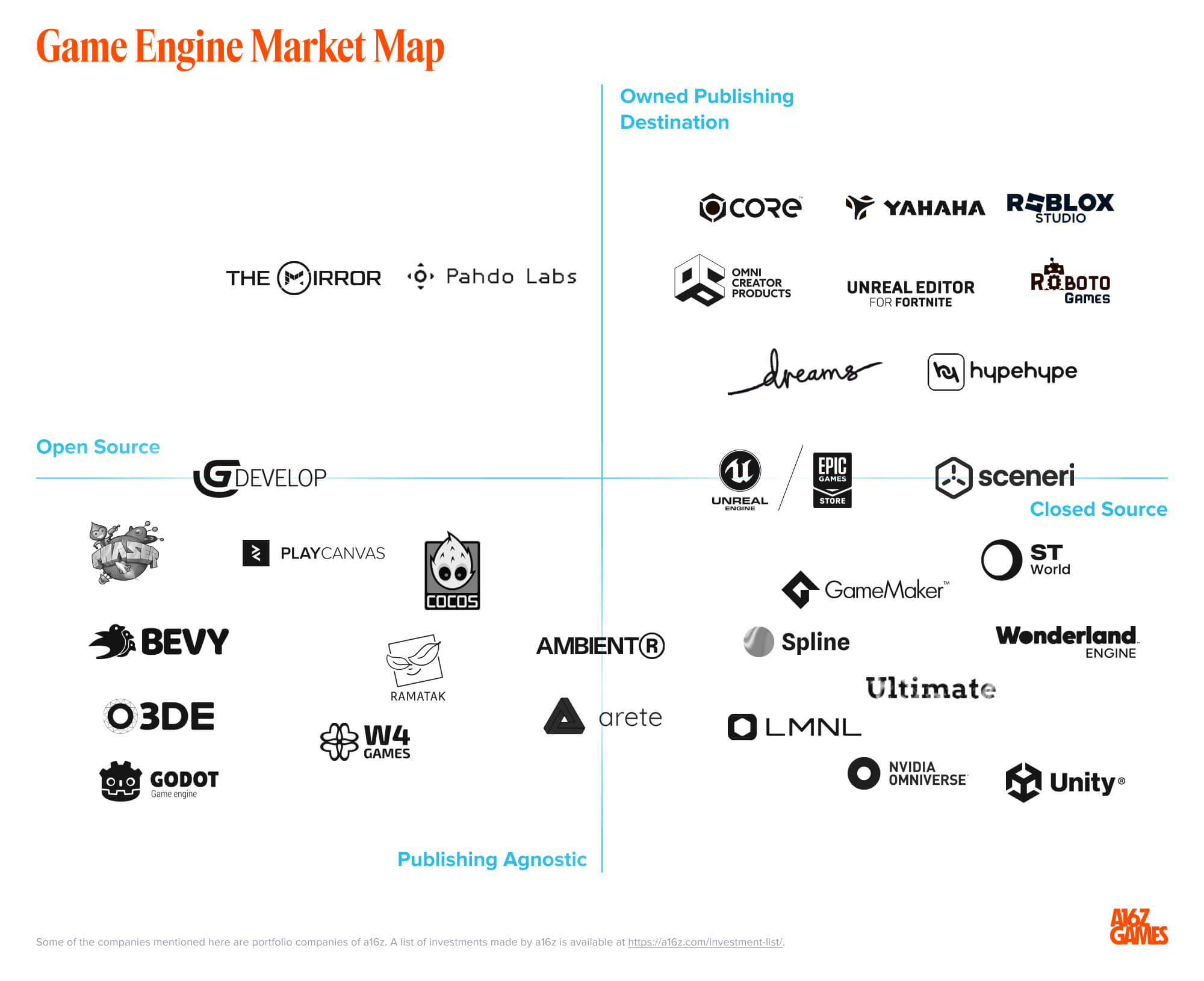

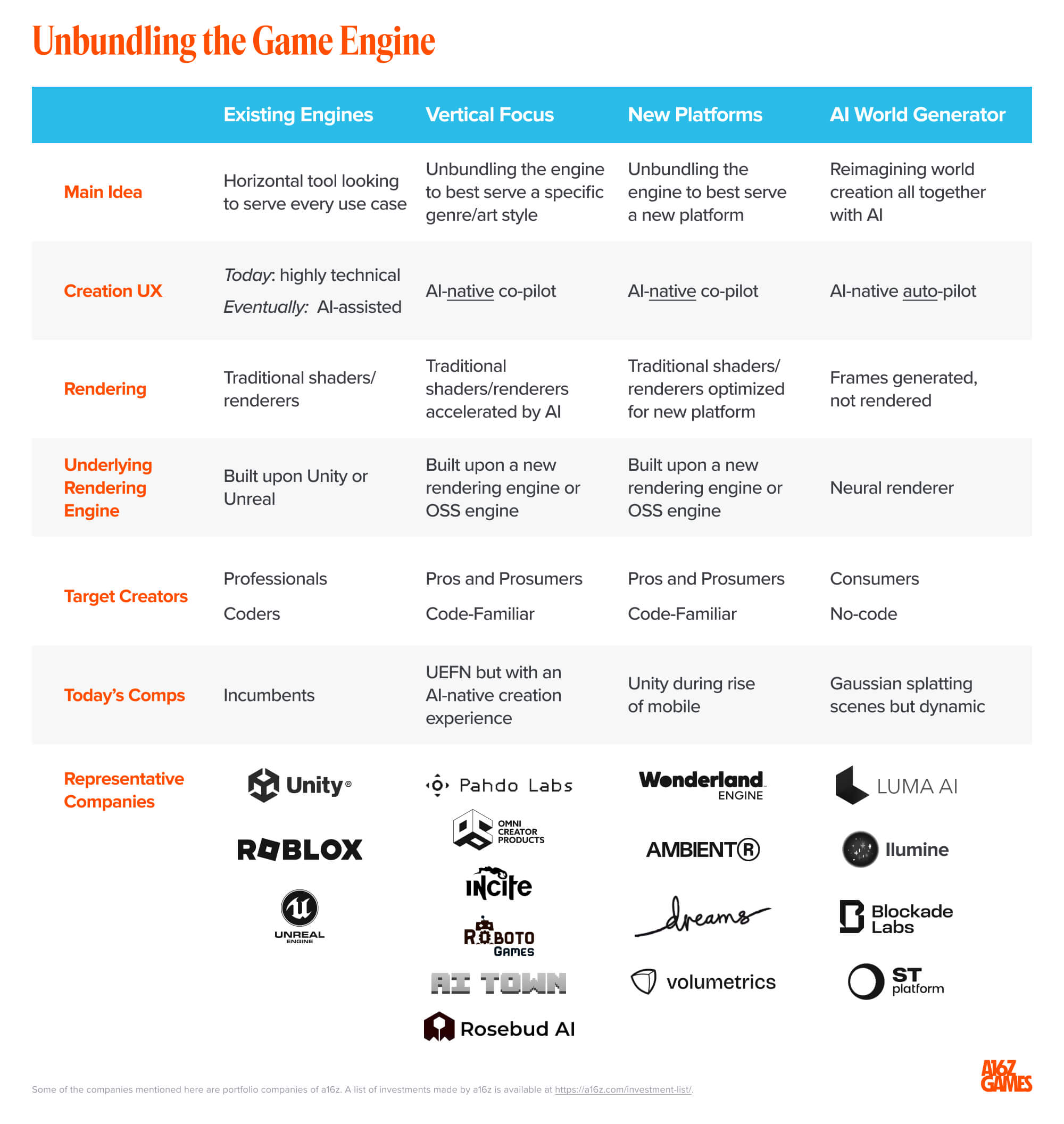

It is unlikely that a Next Gen Engine emerges that simultaneously tries to serve as many use cases on as many platforms as we see with Unity and Unreal today. It becomes an incredibly deep tech investment not just to get to an MVP but to then break through the developer cold start problem.

Rather than competing with the incumbent engines head on trying to serve every use case, a Next Gen Engine will likely arise through a few routes where a traditional game engine is unbundled:

- Vertical Focus: Best-in-class at serving a specific game genre or art style

- New Platforms: Optimizing for a specific platform

- AI World Generator: Building a consumer facing AI world building platform

The incumbent engines have the advantage of having a developer base of millions trained in their tools that studios can hire from. They have a vast marketplace of third party plug-ins, add-ons and integrations in addition to whatever proprietary plug-ins studios might have built themselves. There’s a wealth of community knowledge and learning materials documented in forums, textbooks and tutorial videos. Any professional studio choosing to build on a young untested engine does so at substantial risk to their production.

Unity, founded in 2004, took nearly 5 years of bootstrapping to launch the engine, cultivate a cult following of Mac hobbyist developers, raise venture funding and ascend the curve of relevancy such that studios with real budgets were willing to bet their projects on Unity. To boot, it likely would have failed – alongside a large graveyard of past engine failures – had it not been for the serendipitous luck that came with the launch of the iPhone and the fact that Unity was perfectly suited to support it as a build target.

For a new engine to break through, we’re more likely to see an unbundling with a focus on a few specific use cases to start.

Vertical Focus

The next widely used engine will likely start as a modding and vertical creation platform around a specific game genre or art style rather than a general purpose, horizontal engine. Think Unreal Editor for Fortnite, not Unreal Engine.

It’s difficult to convince developers to build on a new third-party engine that hasn’t been proven out by either the engine developer themselves or other successful game developers. Unreal Engine of course was born out of the development of Epic’s games Unreal and Unreal Tournament. Developers need to be inspired by the capabilities and art of the game and assured that the engine is battle tested. Even Unity grew out of a failed game called Gooball. Godot was used by the engine creators to ship games for nearly 10 years before the engine was open-sourced.

Therefore, as a GTM strategy and as a way to prove out the engine, we are likely to see the developer build the first game (or series of games) on top of the platform. Enabled by AI, doing so should be possible at a fraction of the time and cost to develop a game over the last decade. The first party content does not necessarily need to be a smash hit. It just needs to seed the platform and kickstart the creator flywheel until a viral hit (e.g. the Fortnite Battle Royale mode) is found.

This year Epic launched Unreal Editor for Fortnite (“UEFN”) which is a vertical UGC platform around Fortnite. The creation tools abstract a lot of the complexity from the flagship UE. The platform today is mostly a collection of custom training modes or maps for Fortnite Battle Royale with visions to expand out in terms of game types/genres.

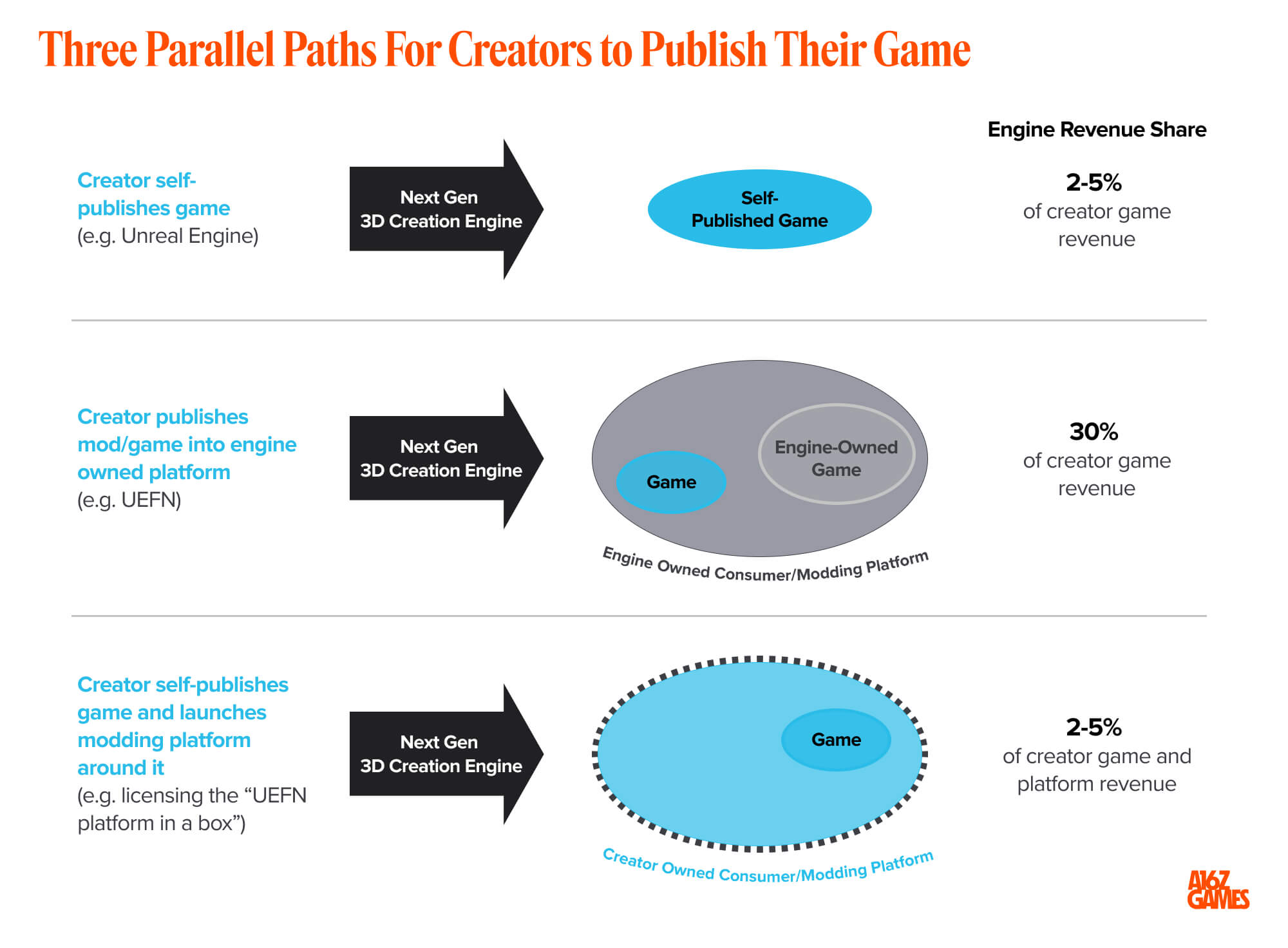

It’s worth noting that today the value of a consumer facing UGC platform far outpaces a game engine. Roblox can safely demand a 30% revenue share whereas UE takes less than 5% (and only 12% in total including the Epic Gaming Store contribution). The attractiveness of the UGC business model is why Epic’s investment behind UEFN likely now surpasses that of UE alone.

Rather than having two separate creation tool sets (UEFN and UE), however, if technically architected properly from the get-go, a next gen engine could allow a creator to publish a mod/game into the UGC platform or self-publish externally from the same tool. The modular engine could adapt to the skill level and specs of the project. When a Roblox developer “levels up” and is ready to self-publish their own game outside of the platform, they leave the ecosystem and have to go learn Unity or Unreal. With a next gen UGC platform and engine that would not be necessary.

We may see a collection of vertical, genre-focused platforms emerge. Where UEFN best serves lovers of Battle Royale/Third Person Shooter games, you may see an equivalent for Real-Time Strategy, sports, party or 2D games. For example, Pahdo Labs is building an anime ARPG game and creation platform around it. Roboto Games is developing a survival crafting game plus modding platform. Omni Creator Products is building a UGC platform around its sandbox game.

One of the vertical creation platforms we’re likely to see will be around AI native games equipped with AI NPCs alongside asset, voice and sound generation at runtime. Having a flagship game pave the way for how the underlying systems and AI models should best work together will create a great starting place for other creators to design even better games on top. In fact, in some ways we’re already seeing early signs of this with AI Town which is an AI “simulated dollhouse” game with autonomous agents. Creations like Zaranova are building off of the base project framework, which already has an AI agent stack, to create their own game designs off the core experience. Incite Interactive is building a platform to build AI native narrative-based games.

Some of these creation platforms may build the engine from scratch or they may fork an open source engine similar to how The Mirror has built their platform off Godot. Each platform though will need to deeply embrace AI into the creation workflows to make it 10x easier for new creators and kickstart the player to creator flywheel. While it’s unlikely you will see the current crop of professional studios building off these new engines from the start, new studios will emerge who are native to the tools just as we saw on mobile then Roblox and now UEFN.

New Target Platforms

Unity and Unreal will also be unbundled by new engines best serving new target platforms such as XR or underserved platforms like the 3D web. While Unreal and Unity try to support all platforms enabling cross-platform development, they make performance tradeoffs with the engine for the one-size-fits all approach. Both Unity and Unreal (to a greater degree) have heavy runtimes that make it challenging to deliver high performance 3D experiences to low powered XR devices and the browser. Having a built to suit engine designed at a low level for these devices would maximize graphics performance. For a few examples, Ambient.Run looks to support browser games and Wonderland supports WebXR.

We will also see the emergence of immersive/spatial 3D creation applications. It can be painful to develop a spatial computing experience from a 2D screen. Having an authoring experience in XR that is native to the device can be transformative in terms of achieving the full design intent. Certain 3D artistry tools like mesh sculpting feels tailor made for spatial computing with gestural inputs more akin to sculpting in the physical world. Volumetrics is building a native 3D authoring platform and engine for spatial apps.

We’ve previously predicted a rise in browser based games driven in part by WebGPU, WebAssembly, WebXR etc and for the distribution advantages developers enjoy over the app stores where they face the 30% fee. A more lightweight engine that can reach peak performance in the browser could earn market share.

In tech, there is always a constant pendulum swing between unbundling and rebundling. Ultimately, these engine unbundling approaches are market wedges to find soft spots where a new creation tool can provide 10x improvement over incumbents. Once they gain their edge, It’s likely that a next gen engine could expand its focus/potential over time, similar to how Roblox is now trying to level up to support photorealistic games. The modular engine that underpins a vertical creation platform could expand towards another genre (more easily than it took UE to expand from shooters to any genre). An XR-focused engine could expand to support cross-platform development.

AI World Generator

Just as 3D creation gets unbundled and streamlined, we will simultaneously see the emergence of new consumer-facing tools that reimagine 3D world building as we know it. These tools will seem so unorthodox that many game devs will initially think it’s a “toy,” because that’s exactly what it will be. In fact, the “game” will likely not be in the output but in the creation experience itself.

Imagine taking a single picture or a text prompt and generating a 3D scene on the fly. You step into the scene, potentially with a VR headset, and explore endlessly. See something you don’t like? Reprompt the scene either in part or in whole. Under the hood, as you move, the frames are each generated in real-time–not rendered in the traditional computer graphics paradigm.

Today, the closest representation we have of this experience would be static scenes captured using scanning apps like Luma or Polycam which allow you to step into an enclosed environment you’ve observed in the real world frozen in time. In recent months, we’ve seen massive technical leaps in generative AI towards this use case. 3D Gaussian Splatting, released in August, was a meaningful improvement over Neural Radiance Field (NeRFs) 3D capture methods offering much faster rendering speeds, more editability and better quality.

Soon, however, new applications will emerge allowing us to make the scenes dynamic, first by reanimating characters captured or dropping new objects into the scene with inpainting. Then, we will be able to expand beyond the captured scene, essentially out-painting and allowing the model to hallucinate an endless perimeter. The models may even begin to understand the laws of physics, which would allow you to actually interact with the environment.

In time, these scenes will be generated not by capturing several hundred images of a desired scene in the real world, but by prompting with a single image or text. Present the model with an image from your childhood and re-enter the world like a time machine.

It is hard to predict all the emergent use cases that are unlocked by this 3D worldbuilding potential. From the consumer side, the act of imaginatively building a world, recreating a memory or exploring a dream-like environment will be groundbreaking. As the tools mature and controllability refined, professional use cases will develop. From creating a virtual stage for a film to architectural visualizations and walkthroughs, the tools will move from the toybox to the enterprise.

So What Does This All Mean?

The landscape of 3D Creation Engines will change dramatically over the next decade which will in turn alter the face of the entertainment industry at large.

Existing game engines will persist…but only in the near-term

Unity and Unreal will likely maintain their dominance within traditional professional studios early on. They will introduce AI-assisted tooling into their legacy UX. But as these new age creation platforms arise off the back of AI, cloud computing and new hardware, a new generation of professionals will be ushered in just as we’ve seen in music and video. As the next gen engines mature, even legacy professional studios will adopt.

Roblox, and now UEFN, have a stronger foothold because of the network effects of their UGC platforms, which speaks to the value of enabling UGC.

We’ll witness a cambrian explosion in content diversity

As the training and cost to create approaches zero, the only limit is one’s imagination, not the technical skills required. With that, we’ll see a cambrian explosion of new games and interactive entertainment experiences. As we’ve seen in the past, modding and bringing new creators into the ecosystem creates genre defining games. Blizzard’s Warcraft II had a world editor used by a modder to create “Aeon of Strife” a MOBA style game which later inspired “Eul” to create DotA in Warcraft III’s editor which ultimately led to League of Legends, the iconic MOBA game.

3D Creation Engines should charge a revenue share vs SaaS pricing

We believe Unreal and (now) Unity have paved the way for a future engine to charge a revenue royalty share rather than a fixed SaaS price fee. Between 2-5% of revenue will likely remain the norm to use a commercial engine and all the services that come with it.

The next gen cloud engine equipped with native modding capabilities will enable the “UEFN platform in a box.” A developer building on the engine can build a game and instantly unlock a modding marketplace where they can benefit from a share of the end creator’s revenue. The engine developer themselves may also see a share of the revenue from the modding platform they power. This would be as if Epic licensed its entire tech platform (i.e. engine, multiplayer stack, economy/payments stack, etc) for UEFN to another developer.

Here’s an illustration of the monetization potential from the engine developer’s perspective:

Games and film become indistinguishable

Culturally, Hollywood and the games industry have already been colliding with one of the year’s most popular TV Shows (The Last of Us) and movies (Super Mario Bros) originating from gaming while one of the most popular new games of the year was Hogwarts Legacy.

The tech stacks have been merging too with 3D Creation Engines being used for VFX/animation pre visualization, then virtual production and soon final renders – per the original RenderMan vision.

From a production standpoint, soon The Last of Us or Harry Potter may not need separate 3D assets for their movies vs games. Film crews will be able to capture a scene with a camera then use AI and a “virtual camera” to generate the scene from a different vantage point. If they don’t like the cut – how the actor read a line, how the extras moved, etc – the director can fix it in post-production with a text prompt.

From a consumer perspective, Netflix (or a disruptor) will likely ship with a runtime player where we can hit pause at any moment and step into the world of Hogwarts and explore the virtual world. In time, we will even be able to go on my own quests and interact with the AI NPCs I encounter, which are as lifelike as Hollywood actors.

Endless games and finite attention time reshifts the landscape

First, known IP will matter more than ever. We can imagine known IP like Harry Potter becoming vertical creator sandboxes for these interactive worlds. Some experiences will employ intentional game design from creators. Creators who want to tell linear stories will also be able to within these “virtual sets”. Or if the consumer wants to explore the wizarding world in an unstructured way to create their own adventures that option will be available to them too.

Second, content recommendation algorithms will improve. As consumers interact with more content within each platform, the platform will be able to recommend better content based on their interests. While it’s possible 3D Creation Engines will eventually be able to automatically create personalized content – we think it is unlikely this content wins out with players. Human creative innovation will always reign supreme – AI will help bring it to life.

The future is interactive

Everything in the world of gaming and entertainment will change. Any world our imagination takes us to can be depicted on screens.

This will not happen overnight as there are many challenges to overcome: research problems remain unsolved, the compute requirements are tremendous, new business models will need to be invented, and likely most importantly, founders need to discover the right AI native creation UX to balance ease of use with power and depth.

Despite these challenges, this new future of entertainment that only recently seemed incomprehensible is just a few years away, and the future is interactive.

-

Troy Kirwin is a partner at Andreessen Horowitz and Cohort Lead for a16z speedrun.

-

Jonathan Lai is a general partner at Andreessen Horowitz, focused on a16z speedrun. He invests in early-stage teams building tomorrow’s AI x creative landscape—from developer tools to 3D simulations, vertical agents, and novel storytelling formats across video, games, audio, and more.