The early discourse on the Generative AI Revolution in Games has largely focused on how AI tools can make game creators more efficient – enabling games to be built faster and at greater scale than before. While true, we believe the largest opportunity long-term is in leveraging AI to change not just how we create games, but the nature of the games themselves.

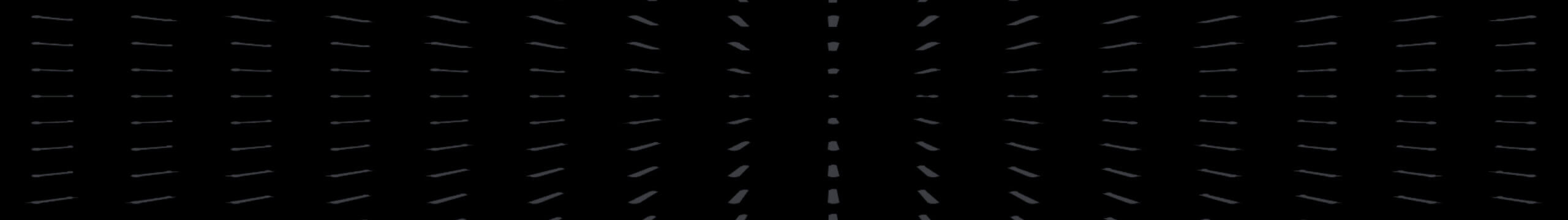

We’re excited about the opportunity for generative AI to help create new AI-first game categories and dramatically expand existing genres. AI has long played a role in enabling new forms of gameplay – from Rogue’s procedurally generated dungeons (1980) to Half-Life’s finite-state machines (1998) to Left 4 Dead’s AI game director (2008). Recent advances in deep learning enabling computers to generate new content based on user prompts and large data sets have further changed the game.

While early, a few AI-powered gameplay areas we’ve seen that are intriguing include generative agents, personalization, AI storytelling, dynamic worlds, and AI copilots. If successful, these systems could combine to create new categories of AI-first games that entertain, engage, and retain players for a very long time.

Generative Agents

The simulation genre was kicked off in 1989 by Maxis’ SimCity, in which players build and manage a virtual city. Today, the most popular sim game is The Sims, where over 70M players worldwide manage virtual humans called “sims” as they go about their daily lives. Designer Will Wright once described The Sims as an “interactive doll house.”

Generative AI could dramatically advance the simulation genre by making agents more lifelike with emergent social behavior powered by large language models (LLMs).

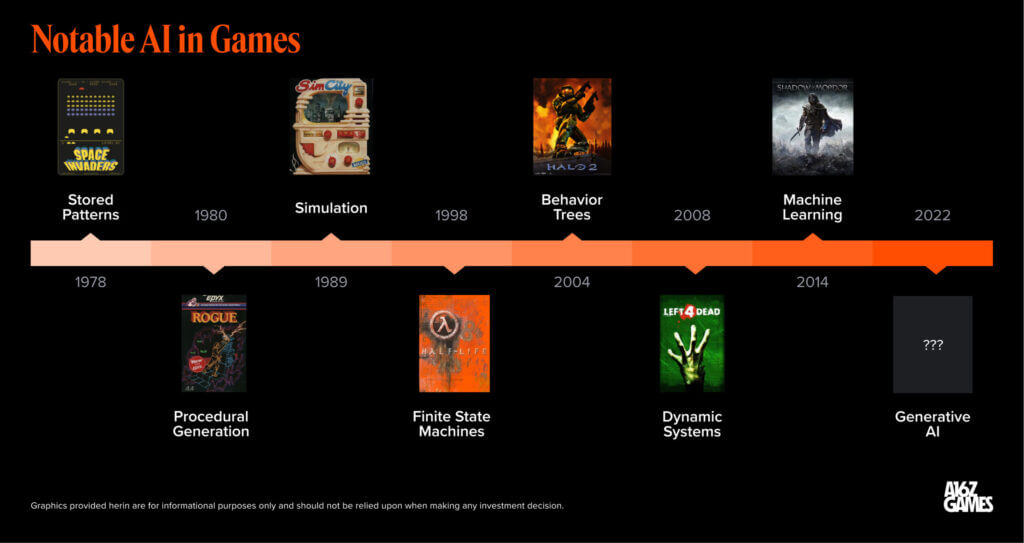

Earlier this year, a team of researchers from Stanford and Google published a paper on how LLMs can be applied to agents inside a game. Led by PhD student Joon Sung Park, the research team populated a pixel art sandbox world with 25 Sims-like agents whose actions were guided by ChatGPT and “an architecture that extends a LLM to store a complete record of the agent’s experience using natural language, synthesize those memories… into higher-level reflections, and retrieve them dynamically to plan behavior.”

The results were a fascinating preview of the potential future of sim games. Starting with only a single user-specified suggestion that one agent wanted to throw a Valentine’s Day party, the agents independently spread party invitations, struck up new friendships, asked each other out on dates, and coordinated to show up for the party together on time two days later.

What makes this behavior possible is that LLMs are trained on data from the social web, and thus have in their models the building blocks for how humans talk to one another and behave in various social contexts. And within an interactive digital environment like a sim game, these responses can be triggered to create incredibly lifelike emergent behavior.

The net-result from a player perspective: more immersive gameplay. Much of the joy of playing The Sims or colony sim RimWorld comes from unexpected things happening and weathering the emotional highs and lows. With agent behavior powered by the corpus of the social web, we might see sim games that reflect not just the game designer’s imagination but also the unpredictability of human society. Watching these sim games could serve as a next-generation Truman Show, endlessly entertaining in a way not possible today with pre-scripted TV or movies.

Tapping into our desire for imaginative “doll house” play, the agents themselves could also be personalized. Players could craft an ideal agent based on themselves or fictional characters. Ready Player Me enables users to generate a 3D avatar of themselves with a selfie and import their avatar into over 9k games/apps. AI character platforms Character.ai, InWorld, and Convai enable the creation of custom NPCs with their own backstory, personality, and behavior controls. Want to create a Hogwarts simulation where you are roommates with Harry Potter? Well now you can.

With their natural language capabilities, the ways we interact with agents have also expanded. Today developers can generate realistic sounding voices for their agents using text-to-speech models from Eleven Labs. Convai recently partnered with NVIDIA on a viral demo where a player has a natural voice conversation with an AI ramen chef NPC, with dialogue and matching facial expressions generated in real-time. AI companion app Replika already enables users to converse with their companions via voice, video, and AR/VR. Down the road, one could envision a sim game where players keep in touch with their agents via phone call or video chat while on the go, and then click into more immersive gameplay when back at their computers.

Note – there are many challenges to be solved still before we see a fully generative version of the Sims. LLMs have inherent biases in their training data that could be reflected in agent behavior. The cost of running scaled simulations in the cloud for a 24/7 live service game may not be financially feasible – operating 25 agents over 2 days cost the research team thousands of dollars in compute. Efforts to move model workload on-device are promising but still relatively early. We’ll likely also need to figure out new norms around parasocial relationships with agents.

Yet one thing is clear – there is huge demand today for generative agents. 61% of game studios in our recent survey plan to experiment with AI NPCs. Our view is that AI companions will soon become commonplace with agents entering our everyday social sphere. Simulation games provide a digital sandbox where we can interact with our favorite AI companions in fun and unpredictable ways. Long-term, the nature of sim games will likely change to reflect that these agents are not just toys, but potential friends, family, coworkers, advisors, and even lovers.

Personalization

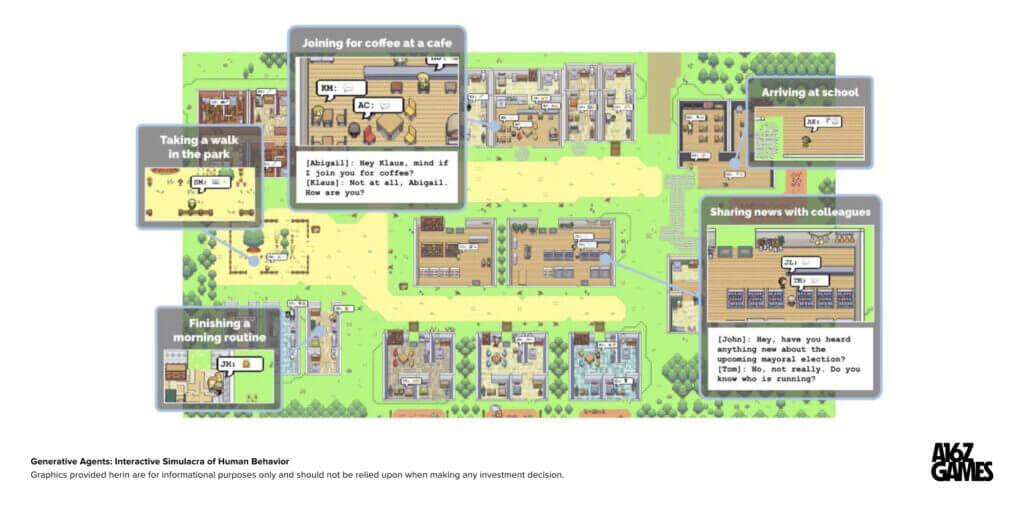

The end-goal in personalized gaming is to offer a unique gameplay experience for every player. For example, let’s start with character creation – a pillar of nearly all role-playing games (RPGs) from the original Dungeons & Dragons (D&D) tabletop game to Mihoyo’s Genshin Impact. Most RPGs allow players to choose from preset options to customize appearance, gender, class, etc. But what if you could go beyond presets to generating a unique character for each player and playthrough? Personalized character builders combining LLMs with text-to-image diffusion models like Stable Diffusion or Midjourney could make this possible.

Spellbrush’s Arrowmancer is an RPG powered by the company’s custom GAN-based anime model. Players in Arrowmancer can generate an entire party of unique anime characters down to the art and combat abilities. This personalization is also part of its monetization system – players import their AI-created characters into custom gacha banners where they can pull for duplicate characters to strengthen their party.

Personalization could also extend toward items in a game. For example, an AI could help generate unique weapons and armor available only to players who complete a specific quest. Azra Games has built an AI-powered asset pipeline to quickly concept and generate a vast library of in-game items and world objects, paving the way for more varied playthroughs. Storied AAA developer Activision Blizzard has built Blizzard Diffusion, a riff on image-generator Stable Diffusion, to help generate varied concept art for characters and outfits.

Text and dialogue in games is also ripe for personalization. Signs in the world could reflect the player achieving a certain title or status (“wanted for murder!”). NPCs could be set up as LLM-powered agents with distinct personalities that adapt to your behavior – dialogue could change based upon a player’s past actions with the agent, for example. We’ve seen this concept executed successfully in a AAA game already – Monolith’s Shadow of Mordor has a nemesis system that dynamically creates interesting backstories for villains based on a player’s actions. These personalization elements make each playthrough unique.

Game publisher Ubisoft recently revealed Ghostwriter, a dialogue tool powered by LLMs. The publisher’s writers use the tool today to generate first drafts of background chatter and barks (dialogue snippets during triggered events) that help simulate a living world around the player. With fine-tuning, a tool like Ghostwriter could potentially be used for personalized barking.

From a player perspective, the net impact of all this personalization is two-fold: it increases a game’s immersion and replayability. The enduring popularity of role-playing mods for immersive open world games like Skyrim and Grand Theft Auto 5 is an indicator of latent demand for personalized stories. Even today, GTA consistently sees higher player counts in role-playing servers than in the original game. We see a future where personalization systems are an integral live ops tool for engaging and retaining players over the long run across all games.

AI Narrative Storytelling

Of course, there is more to a good game than just characters and dialogue. Another exciting opportunity is leveraging generative AI to tell better, more personalized stories.

The granddaddy of personalized storytelling in games is Dungeons & Dragons, where one person, dubbed the dungeon master, prepares and narrates a story to a group of friends who each role-play characters in the story. The resulting narrative is part improv theater and part RPG, which means that each playthrough is unique. As a signal of the demand for personalized story-telling, D&D today has never been more popular, with digital and analog products hitting record sales numbers.

Today, many companies are applying LLMs to the D&D storytelling model. The opportunity lies in enabling players to spend as much time as they want in player-crafted or IP universes they love, guided by an infinitely patient AI storyteller. Latitude’s AI Dungeon launched in 2019 as an open-ended, text-based adventure game where the AI plays the dungeon master. Users have also fine-tuned a version of OpenAI’s GPT-4 to play D&D with promising results. Character.AI’s text adventure game is one of the app’s most popular modes.

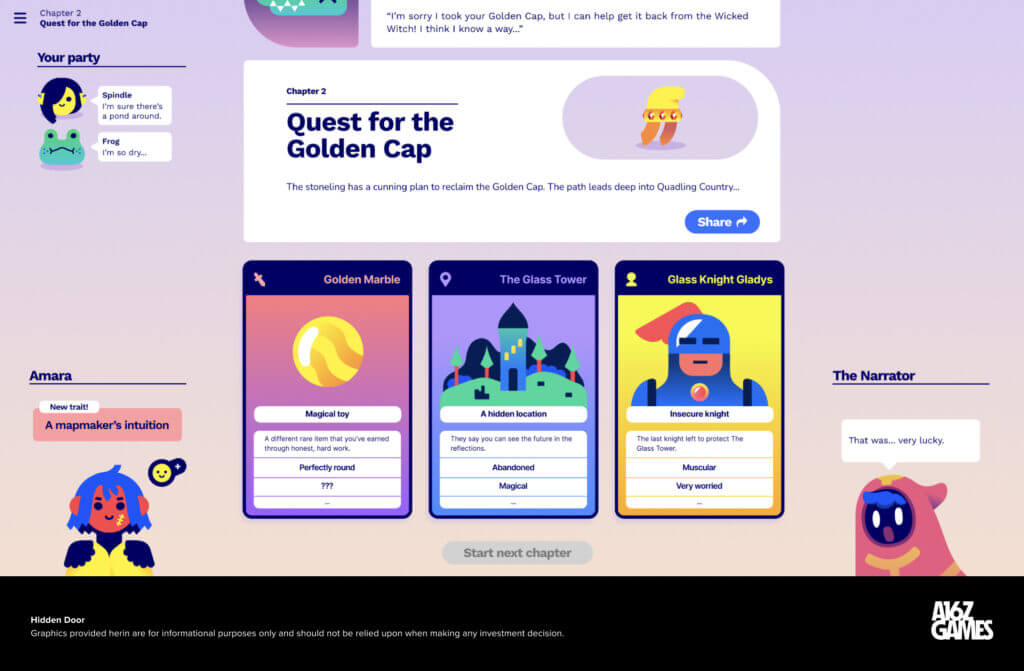

Hidden Door goes a step further to train its machine learning models on a specific set of source materials – for example the Wizard of Oz – allowing players to have adventures in established IP universes. In this way, Hidden Door works with IP owners to enable a new, interactive form of brand extension. As soon as fans finish a movie or book, they can continue adventures in their favorite worlds with a custom D&D-like campaign. And demand for fan experiences is booming – two of the largest online fanfic repositories Archiveofourown.org and Wattpad saw over 354M and 146M website visits in May alone.

NovelAI has developed its own LLM Clio, which it utilizes to tell stories in a sandbox mode and help solve writer’s block for human writers. And for the most discerning writers, NovelAI enables users to fine-tune Clio on their own body of work or even famous writers like H.P. Lovecraft or Jules Verne.

It’s worth noting that many hurdles remain before AI storytelling is fully production ready. An open-ended AI can easily go off the rails, which makes it funny but unwieldy for game design. Building a good AI storyteller today requires lots of human rule-setting to create the narrative arcs that define a good story. Memory and coherence is important – a storyteller needs to remember what happened earlier in a story and maintain consistency, both factually and stylistically. Interpretability remains a challenge for many closed-source LLMs which operate as black boxes, whereas game designers need to understand why a system behaved the way it did to improve the experience.

Yet while these hurdles are being worked through, AI as a copilot for human storytellers is very much here already. Millions of writers today use ChatGPT to provide inspiration for their own stories. Entertainment studio Scriptic utilizes a blend of DALL-E, ChatGPT, Midjourney, Eleven Labs, and Runway alongside a team of human editors to build interactive, choose-your-own adventure shows, available today on Netflix.

Dynamic World Building

While text-based stories are popular, many gamers also aspire to see their stories come to life visually. One of the largest opportunities for generative AI in games may be in helping to create the living worlds that players spend countless hours in.

While not feasible today, an oft-stated vision is the generation of levels and content in real-time as a player progresses through a game. The canonical example of what such a game could look like is the Mind Game from sci-fi novel Ender’s Game. The Mind Game is an AI-directed game that adapts in real-time to the interests of each student, the world evolving based on student behavior and any other psychographic information the AI can infer.

Today, the closest analogue to the Mind Game could be Valve’s Left 4 Dead franchise – which utilizes an AI Director for dynamic game pacing and difficulty. Instead of set spawn points for enemies (zombies), the AI Director places zombies in varying positions and numbers based upon each player’s status, skill, and location, creating a unique experience in each playthrough. The Director also sets the game’s mood with dynamic visual effects and music (translation: it’s super scary!). Valve founder Gabe Newell has coined this system “procedural narrative.” EA’s critically-acclaimed Dead Space Remake uses a variation of the AI director system to maximize horror.

While it may appear to be in the realm of science fiction today, it’s possible that one day with improved generative models and access to enough compute and data, we could build an AI Director capable of generating not just jump scares, but the world itself.

Notably, the concept of machine-generated levels in games is not new. Many of the most popular games today from Supergiant’s Hades to Blizzard’s Diablo to Mojang’s Minecraft use procedural generation – a technique by which levels are created randomly, different every playthrough, using equations and rulesets run by a human designer. An entire library of software has been built to assist with procedural generation. Unity’s SpeedTree helps developers generate virtual foliage which you might have seen in the forests of Pandora in Avatar or the landscapes of Elden Ring.

A game could combine a procedural asset generator with a LLM in the user interface. The game Townscaper uses a procedural system to take just two player inputs (block placement and color) and turn them into gorgeous town landscapes on the fly. Imagine Townscaper with a LLM added to the user interface, helping players iterate their way to even more nuanced and beautiful creations via natural language prompts.

Many developers are also excited about the potential to augment procedural generation with machine learning. A designer could one day iteratively generate a workable first draft of a level using a model trained on existing levels similar in style. Earlier this year, Shyam Sudhakaran led a team from the University of Copenhagen to create MarioGPT – a GPT2 tool that can generate Super Mario levels using a model trained on the original levels of Super Mario 1 and 2. There has been academic research in this area for a while, including this 2018 project to design levels in the first-person shooter game DOOM using Generative Adversarial Networks (GANs).

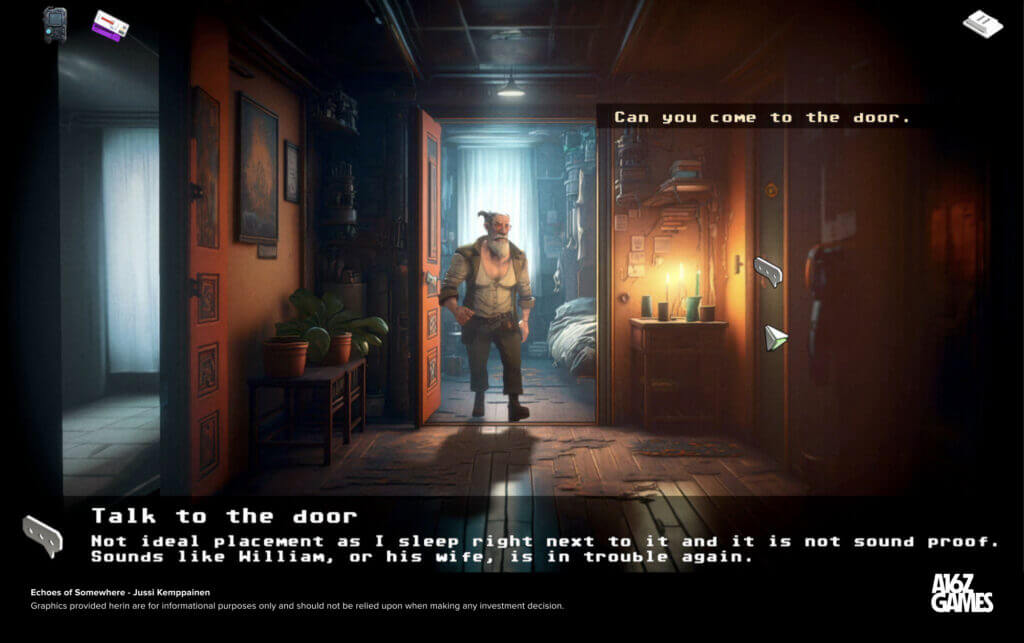

Working in tandem with procedural systems, generative models could significantly speed up asset creation. Artists are already using text-to-image diffusion models for AI-assisted concept art and storyboarding. Mainframe VFX lead Jussi Kemppainen describes in this blog how he built the world and characters for a 2.5D adventure game with the help of Midjourney and Adobe Firefly.

3D generation is also seeing a great deal of research. Luma uses neural radiance fields (NeRFs) to allow consumers to construct photorealistic 3D assets from 2D images captured on an iPhone. Kaedim uses a blend of AI and human in the loop quality control to create production-ready 3D meshes that are already being used by over 225 game developers today. And CSM recently released a proprietary model that can generate 3D models from both video and images.

In the long-run, the holy grail is to use AI models for real-time world building. We see a potential future where entire games are no longer rendered, but generated at run-time using neural networks. NVIDIA’s DLSS technology can already generate new higher-resolution game frames on the fly using consumer-grade GPUs. One day, you may be able to click “interact” on a Netflix movie, then step into the world with every scene generated on the fly and uniquely personalized for the player. In this future, games will become indistinguishable from film.

It’s worth noting that dynamically generated worlds on their own are not enough to make a good game, as evidenced by the critical reviews of No Man’s Sky which launched with over 18 quintillion procedurally generated planets. The promise of dynamic worlds lies in its combination with other game systems – personalization, generative agents, etc – to unlock novel forms of story-telling. After all, the most striking part of the Mind Game was how it shaped itself to Ender, not the world itself.

An AI Copilot for Every Game

While we previously covered the use of generative agents in simulation games, there’s another emergent use-case where the AI serves as gaming copilot – coaching us on our play and in some cases even playing alongside of us.

An AI copilot could be invaluable for onboarding players to complex games. For example, UGC sandboxes like Minecraft, Roblox, or Rec Room are rich environments in which players can build nearly anything they can imagine if they have the right materials and skill. But there’s a steep learning curve and it’s not easy for most players to figure out how to get started.

An AI copilot could enable any player to be a Master Builder in a UGC game – providing step by step instructions in response to a text prompt or image, and coaching players through mistakes. An apt reference point is the LEGO universe’s concept of Master Builders – rare folks gifted with the ability to see the blueprint for any creation they can imagine at time of need.

Microsoft is already working on an AI copilot for Minecraft – which uses DALL-E and Github Copilot to enable players to inject assets and logic into a Minecraft session via natural language prompts. Roblox is actively integrating generative AI tools into the Roblox platform, with the mission of enabling “every user to be a creator.” The effectiveness of AI copilots for co-creation has already been proven in many fields, from coding with Github Copilot to writing with ChatGPT.

Moving beyond co-creation, a LLM trained on human gameplay data should be able to develop an understanding of how to behave across a variety of games. With proper integration, an agent could fill in as a co-op partner when a player’s friends are unavailable, or take the other side of the field in head-to-head games like FIFA or NBA 2k. Such an agent would always be available to play, gracious in both victory and defeat, and never judgmental. And fine-tuned on our personal gaming history, the agent could be vastly superior to existing bots, playing exactly how we would ourselves or in ways that are complementary.

Similar projects have been run successfully in constrained environments. Popular racing game Forza developed a Drivatar system that uses machine learning to build an AI driver for each human player mimicking their driving behavior. The Drivatars are uploaded to the cloud and can be called upon to race other players when their human partners are offline, even earning credits for victories. Google DeepMind’s AlphaStar trained on a data set of “up to 200 years” of Starcraft II games to create agents that could play and beat human esports pros at the game.

AI copilots as a game mechanic could even create entirely new gameplay modes. Picture Fortnite but every player has a Master Builder wand that can instantly build sniper towers or flaming boulders via prompts. In this game mode, victory would likely be determined more by wand work (prompting) than the ability to aim a gun.

The dream of the perfect in-game AI “buddy” has been a memorable part of many popular game franchises – just look at Cortana from the Halo universe, Elle from The Last of Us, or Elizabeth in Bioshock Infinite. And for competitive games, beating up on computer bots never gets old – from frying aliens in Space Invaders to the comp stomp in Starcraft, eventually turned into its own game mode Co-op Commanders.

As games evolve into next-generation social networks, we expect AI copilots to play an increasingly prominent social role as coach and/or co-op buddy. It’s well-established that adding social features increases a game’s stickiness – players with friends can have up to 5x better retention. We see a future where every game has an AI copilot – following a mantra of “good alone, great with AI, best with friends.”

Conclusion

We’re still early in applying generative AI to games, and many legal, ethical, and technical hurdles need to be solved before most of these ideas can be put into production. Legal ownership and copyright protection of games with AI-generated assets is largely unclear today unless a developer can prove ownership of all the data used to train a model. This makes it difficult for owners of existing IP franchises to utilize 3rd party AI models in their production pipelines.

There are also significant concerns over how to compensate the original writers, artists, and creators behind training data. The challenge is that most AI models today have been trained on public data from the Internet, much of which is copyrighted work. In some cases, users have even been able to recreate an artist’s exact style using generative models. It’s still early and compensation for content creators needs to be properly worked out.

Finally, most generative models today would be cost prohibitive to run in the cloud at the 24/7, global scale that modern games operations require. To scale cost-effectively, application developers will likely need to figure out ways to shift model workloads to end-user devices, but this will take time.

Yet what is clear at the moment – is that there is tremendous developer activity and player interest in generative AI for games. And while there is also a lot of hype, we are altogether excited by the many talented teams we see in this space, working overtime to build innovative products and experiences.

The opportunity isn’t just making existing games faster and cheaper, but in unlocking a new category of AI-first games that weren’t possible before. We don’t know exactly what shape these games will take, but we do know that the history of the games industry has been one of technology driving new forms of play. The potential prize is enormous – with systems like generative agents, personalization, AI storytelling, dynamic world building, and AI copilots, we may be on the cusp of seeing the first NeverEnding games created by AI-first developers.