There are many competing visions for how we’ll build the Metaverse: a persistent, infinitely-scaling virtual space with its own economy and identity system. Facebook Horizon is an ambitious bet that it will be realized in VR. Epic Games is doubling down on a game-centric approach with Fortnite. But the most exciting part about the Metaverse is not its scope or infrastructure, but its potential to reinvent the way we interact with our friends and loved ones.

New social modalities will emerge in the Metaverse. Advances in cloud streaming and AI will enable new forms of engagement with friends—for example, the ability to pop into a persistent virtual world and discover new people and experiences together, entirely unplanned. This represents a subtle but important shift in how we socialize and play: from purposeful interactions centered around activities, to spontaneous interactions focused on people.

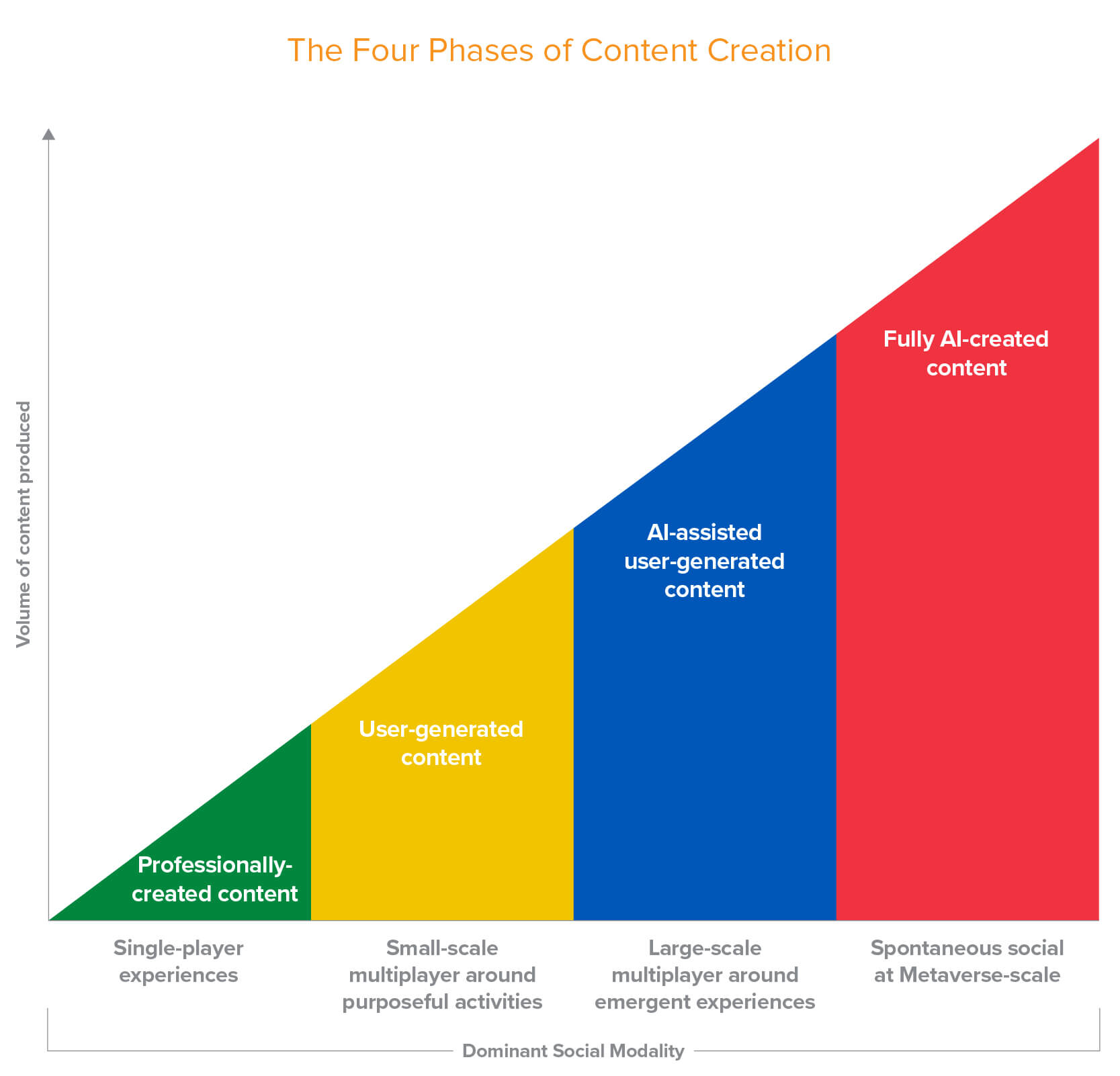

We’ve seen glimmers of these spontaneous interactions already in open-world, sandbox video games like Grand Theft Auto, yet these experiences have been limited by a finite supply of professionally created content. Fully emergent social experiences that model the serendipity of the real world will become the norm through two key trends: the proliferation of user-generated content (UGC) and the evolution of AI into both creator tool and companion. As we adopt new ways of creating content, we will also unlock new types of social experiences.

It takes a world to raise a virtual universe

Today, we’re in phases 1 to 2 of the pyramid above. The majority of popular entertainment across TV, film, music, and games is created by large teams of creative professionals. Most of these products are crafted for a solo audience, meaning the experience is largely unchanged with the addition of friends.

Multiplayer games have done the most to push the boundaries of social entertainment. Games like World of Warcraft and League of Legends have brought millions of players together in shared worlds. Yet even these games have fundamental social limitations. Multiplayer sessions today are limited in scale—even modern titles like Fortnite can only support up to 100 concurrent players in a server instance. In-game socializing also tends to be focused on specific activities, such as Fortnite’s battle royale mode or concerts.

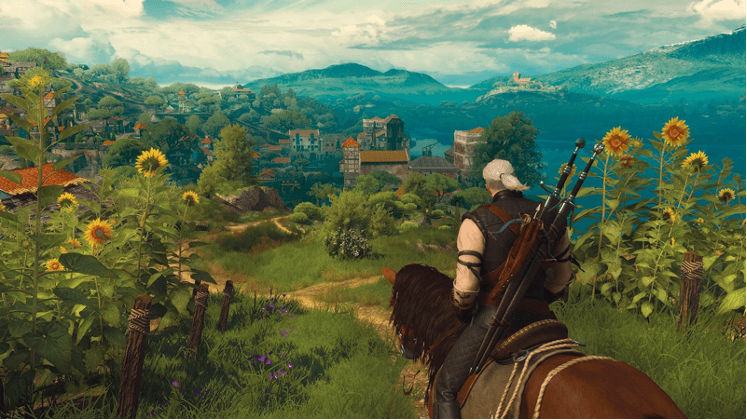

Open-world games such as Grand Theft Auto and The Witcher 3 have introduced unstructured play where players freely explore a virtual world and pursue objectives in any order they choose. Though this is a step toward spontaneous experiences, the scope of these worlds has also been limited by the amount of content a professional team can create.

The Witcher 3 places players in the shoes of a monster hunter in an open world. Source: WCCFtech

The Witcher 3 places players in the shoes of a monster hunter in an open world. Source: WCCFtech

In this respect, one of the biggest challenges with building the Metaverse is figuring out how to create enough high-quality content to sustain it. It would take a tremendous amount of content to populate the intricate worlds shown in Ready Player One’s OASIS. It would also be staggeringly expensive to create via professional development. The MMO Star Wars: The Old Republic famously cost EA more than $200 million to make and required a team of over 800 people working for six years to simulate just a few worlds within the Star Wars universe. In comparison, a true Metaverse would likely be composed of several galaxies of Star Wars-sized virtual worlds.

User-generated content offers a promising solution for cost-effectively scaling content production. Platforms such as YouTube and Twitch have assembled vast content libraries faster and more efficiently than any professional studio. YouTube serves over 1 billion hours of video daily via a community of 31 million channel creators. Twitch’s 6 million creators live-streamed over 10 billion hours of video in 2019. These platforms harness the collective creative energy of their communities to create an endless flywheel of content.

Yet UGC platforms have their own challenges, as well. Maintaining a high quality bar can be difficult, given the sheer volume of content being created. And due to the need for users to learn new tools and programming skills, content creators tend to be vastly outnumbered by consumers. YouTube’s 31 million creators represent only 1.2 percent of its 2 billion monthly user base. 3D game engines such as Unity and Unreal are powerful, but can be difficult to learn. Unity, for example, is used by only 1.5 million monthly creators today, a fraction of the 2.7 billion gamers worldwide.

UGC created by a small segment of human creators, while a meaningful step forward from professionally-created studio content, is likely only one side of the coin that we need to build the Metaverse and its new social systems.

AI as the co-creator

In order to enable emergent social experiences inside a Metaverse—similar to how we discover experiences in the real world today—there needs to be a vast increase in both the quantity and quality of user-generated content.

To this end, the next major evolution in content creation will be a shift toward AI-assisted human creation (phase 3). Whereas only a small number of people can be creators today, this AI-supplemented model will fully democratize content creation. Everyone can be a creator with the help of AI tools that can translate high-level instructions into production-ready assets, doing the proverbial heavy lifting of coding, drawing, animation, and more.

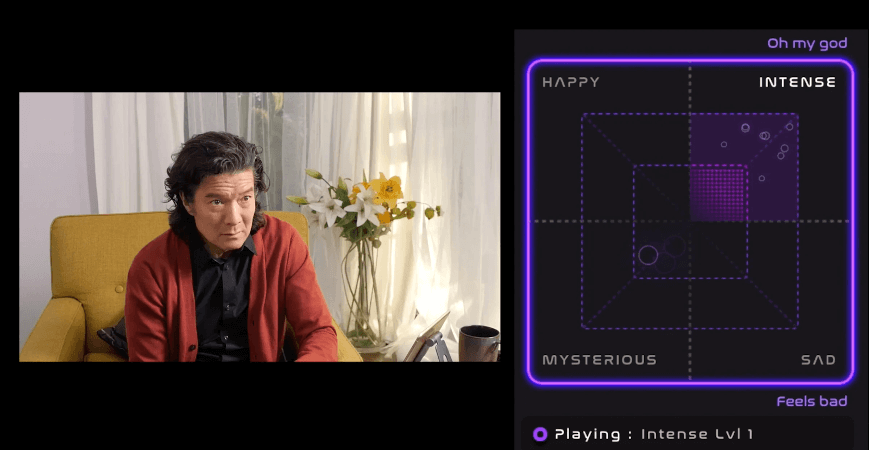

We’re already seeing early glimpses of this in action. Led by Siri co-creator Tom Gruber, LifeScore has built an adaptive music platform that dynamically composes music in real-time. After human composers input a set of music “source material” into LifeScore, an AI maestro changes, improves, and remixes music on the fly to lead a performance. LifeScore debuted in May as an adaptive soundtrack for the Twitch interactive TV series Artificial, where viewers were able to influence the music based upon how they felt about plot developments.

Viewers of the Twitch series Artificial can influence the soundtrack in real-time by indicating their emotional state. Source: WIRED

Viewers of the Twitch series Artificial can influence the soundtrack in real-time by indicating their emotional state. Source: WIRED

AI creation tools around text are already very powerful. Roblox uses machine learning to automatically translate games developed in English into eight other languages, including Chinese, French, and German. AI Dungeon uses GPT-3 natural language models to understand a script and generate the next few paragraphs, unblocking aspiring writers and D&D dungeon masters alike. And Nara uses AI to create natural-sounding audio stories from text, enabling content creators to write a story once and publish an audio version to any connected device.

Roblox uses machine learning to automatically translate games developed in English into other languages. Source: Roblox

Roblox uses machine learning to automatically translate games developed in English into other languages. Source: Roblox

After text and audio, graphics is the final frontier. AI-driven graphics creation has been more challenging than text and audio due to exponentially larger asset sizes and computational requirements. Yet we’re already seeing tantalizing signs of a future where creators will be able to use AI to fully render 3D characters and worlds. Nvidia recently unveiled Maxine, an AI video conferencing platform that employs generative adversarial networks (GANs) to analyze a person’s face and then algorithmically animate in video calls. This model can enable dynamic effects such as simulated eye contact and real-time speech translation. In an even more ambitious approach, RCT’s Morpheus Engine uses deep reinforcement learning to render text into 3D assets and animations from a library of natural world objects. The video below shows a rendering of “there is a man walking”:

While we’re still in the early stages, AI tools have the potential to democratize content creation and empower human creators to focus on higher-level design and story-telling. Both the quantity and quality of UGC will improve as these tools are adopted. And over time, as AI models improve, the quality of emergent, AI-created content may one day even surpass the caliber of human-created content, paving the way for a shift in how we socialize and spend time in virtual worlds.

Spontaneous social experiences will become the new norm

In an AI-powered Metaverse filled with a near-infinite supply of rich content, the way we socialize will also evolve. With worlds at our fingertips, socializing will shift from the purposeful what to the people-centric who. Instead of making plans around specific entertainment activities, as is frequently the case today, we’ll be free to explore the virtual realm as a shared experience with friends—and trust the AI to fill in the rest.

One analogy for this behavioral model is YouTube. Today you can visit YouTube without knowing exactly what videos you plan to watch. You can take a random “walk” through a nearly endless catalog of user-generated content. In a similar fashion, the Metaverse will be YouTube for social experiences—a place where we can meet and discover new experiences without an agenda. Seem farfetched? Teens in the US are already spending more time watching YouTube than Netflix.

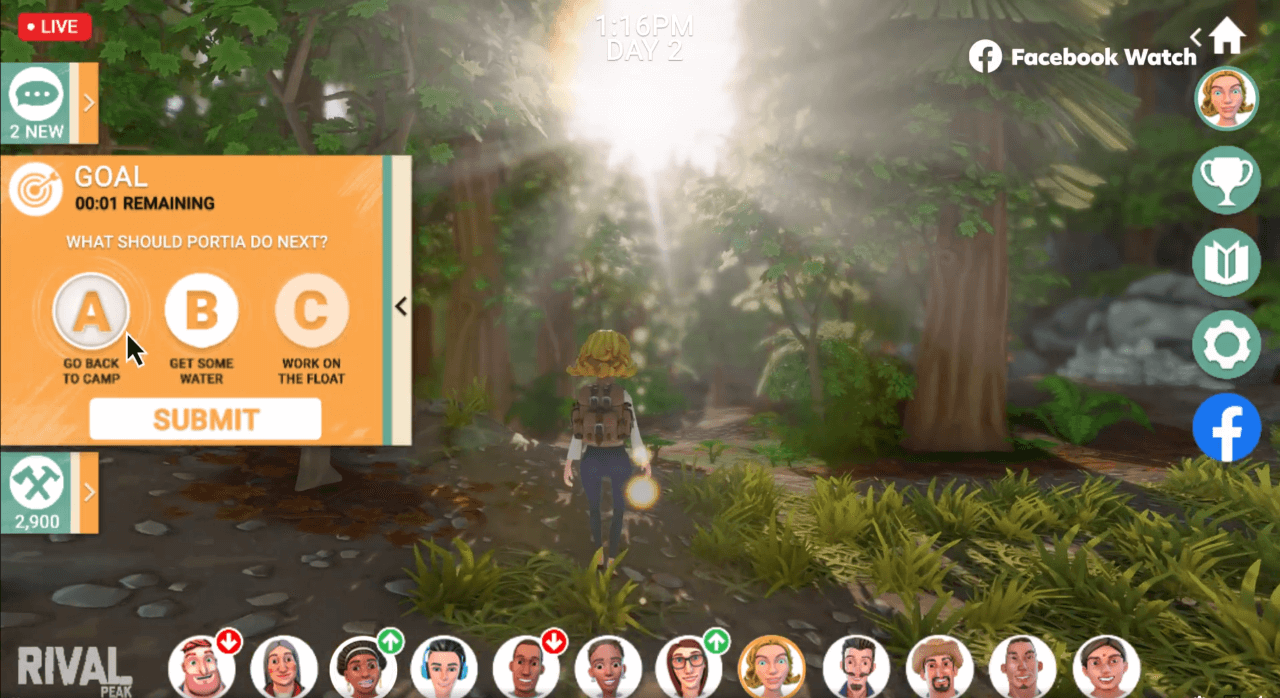

Cloud streaming combined with AI-powered characters will also create new formats of social experiences that are both spontaneous and interactive. Facebook and Genvid recently revealed Rival Peak, an experimental reality TV show featuring AI-powered digital contestants in which the viewing audience can vote to determine each contestant’s actions in real-time.

Rival Peak viewers on Facebook will be able to vote to direct the characters in real-time. Source: Facebook

Rival Peak viewers on Facebook will be able to vote to direct the characters in real-time. Source: Facebook

Westworld is another (albeit considerably more dystopian) example of spontaneous social experiences, where users can drop into a world with friends and experience a unique, personal journey that is different with every visit. In the HBO show, human guests to the park interact with hosts, artificially created humanoid beings programmed with personalities and backstories. The hosts react dynamically to guest behavior, resulting in emergent narratives and a world that evolves in real-time based on the cumulative choices of guests.

Our friends themselves will evolve in the Metaverse, as well. Most games today clearly separate human players and non-player characters (NPCs) who act out pre-scripted dialogue. The next evolution of NPCs in the Metaverse will likely be bots powered by AI models with natural language processing and cloud-connected data. Similar to the lifelike hosts in Westworld, a new friend in the future may very well be an AI.

We’re already seeing early previews of AI friendships. Companies like Pandorabots and Replika have created emotionally intelligent AI bots that can serve as a friend or romantic companion. Powered by neural networks, these bots use text messages to chat with human friends, play games, and even write songs and poetry. And in an approach combining art with GPT-3, Fable Studio is developing Lucy, a fully-animated AI virtual character that humans will be able to converse with live on a Zoom video call.

Lucy is a fully animated AI virtual character that you can converse with over Zoom, Facebook, and Google Hangouts. Source: Fable

Lucy is a fully animated AI virtual character that you can converse with over Zoom, Facebook, and Google Hangouts. Source: Fable

In a world where people feel increasingly lonely, the promise of these AI relationships is in their ability to provide always-available, nonjudgmental emotional support. While such relationships can be ethically complicated, the latent demand is real: over 7 million people use Replika today, for example.

Over time, virtual worlds populated with emotionally intelligent AI characters will be able to create truly lifelike social experiences. Ironically, the final evolution of virtual worlds may actually bring human social behavior back to its IRL roots—enabling serendipitous encounters and ad hoc friendships between strangers (or bots).

* * *

While many challenges remain as we pursue the Metaverse, user-generated content and AI are likely to be fundamental building blocks in constructing this galaxy of interconnected virtual worlds.

Yet the most exciting part about the Metaverse is not how we build it technically, but rather its potential to change the way we socialize with one another. Long-term, as AI takes a greater role in worldbuilding, social interactions in virtual worlds will evolve to more closely resemble the randomness and serendipity of the real world. In the not-too-distant future, our invitation to friends and family may simply be, “Meet me in the Metaverse!”

Everything you thought you knew about social networks is getting reinvented.

Our new series, Social Strikes Back, explores the hyper-social future of consumer tech.

See more