Editor’s note: It’s Summit week at a16z, so each day we’re sharing some of our favorite talks from the last few years. a16z Summit is an annual, invite-only event bringing together thinkers, builders, and innovators to explore and examine the future of tech. Our 2019 theme is “The Future Is Inevitable,” and you can get notified when this year’s talks go live by signing up here.

In this talk from Summit 2017, General Partner Peter Levine explains how cloud technology is changing for an edge cloud model. You can watch a video of Peter’s talk or read the transcript with slides below.

I’d like to show you a little bit of what I think the future is going to look like. I’m going to take you out to the edge, no pun intended. I know what you’re all thinking. How can I actually say that cloud computing is coming to an end when it hasn’t really started yet? I think you all think that I’m crazy.

If I had stood up here 20 years ago and told you that Microsoft Windows might go away in the future, or 30 years ago that Digital Equipment Corporation might be out of business, or 15 years ago that Sun Microsystems might not be here, you’d probably put the same slide up here and say, “Boy, that guy, he’s gone crazy. These companies or these technologies will be around forever.”

The Forrest Gump Rule of Investing

Everything that’s popular in technology always gets replaced by something else. The beauty of the business is that things actually go away, and part of our job as investors is to look not at where the puck is today, but where the puck is going to be in the future, typically five or 10 years out. It takes a long time for companies to build up and you want to hit that puck where it intends to be.

It’s actually a very simple exercise that I would encourage you all to do if you ever want to predict the future. Subtract something that’s important today and fill it with something else, and you’ll start to think out of the box, as opposed to sequentially. I believe that if you subtract something, you can actually fill it with other dimensions that you might not think about otherwise.

I call it my Forrest Gump Rule of Investing. It’s so simple: you just subtract something and replace it with something else. About six months ago, I started to think, “Well, what happens when cloud computing goes away? It’s so popular now, how could it go away?” Yet, I think it’s actually happening right under our noses, and let me explain why I think that’s occurring.

Changes in the Cloud

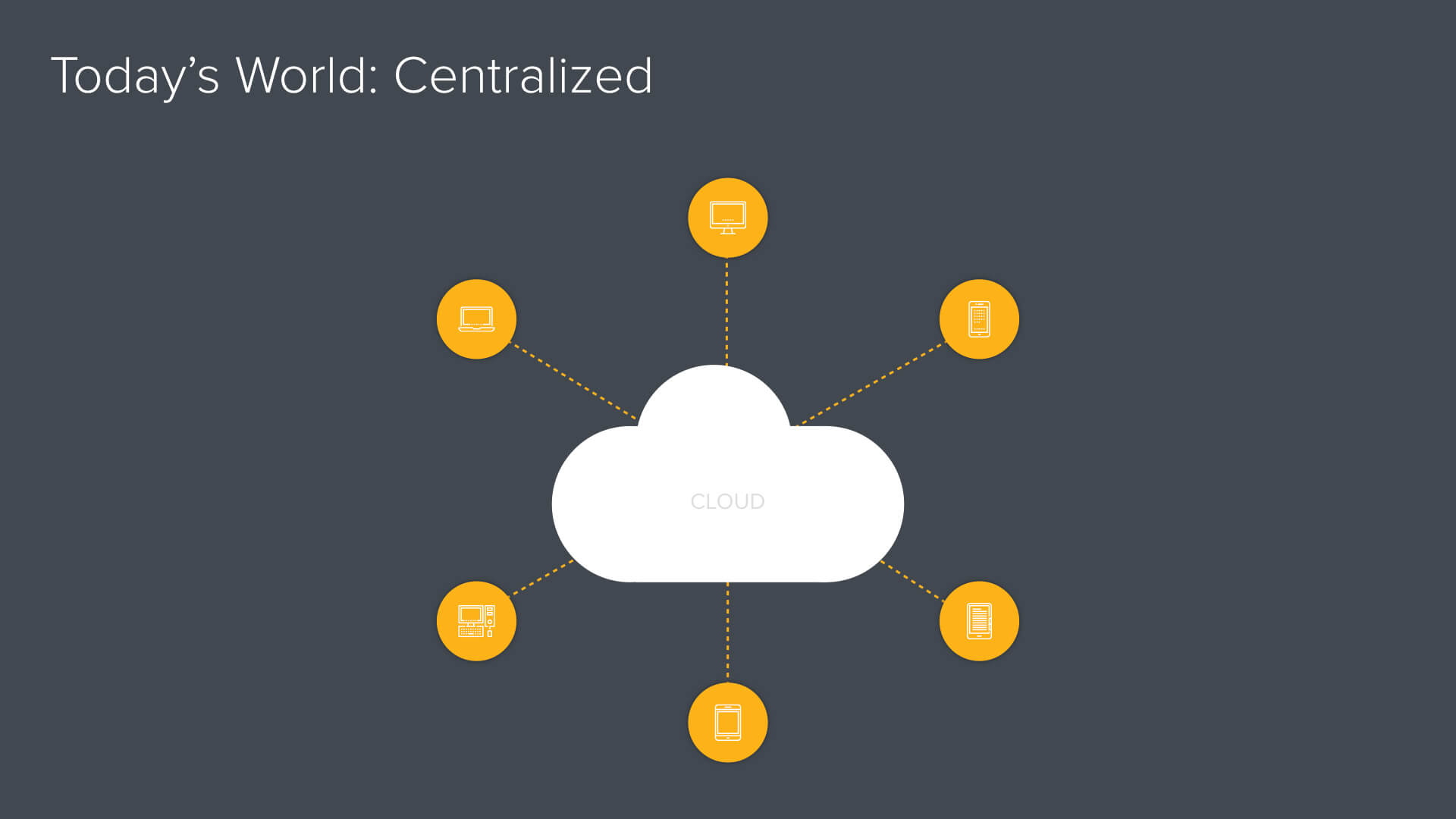

Today’s world is a centralized world, and the central cloud is where all processing gets done. When we do a Google search or whenever you type something in your phone, that little stream of information goes back to the cloud, it gets processed, and then the information comes back to your phone. All of that’s being done centrally. To some degree, your mobile devices are a terminal or a vehicle that displays what’s happening in the cloud itself. We’re living in a very centralized model of the world.

When it comes to the end of cloud computing, I thought, “Well, maybe our mobile devices get more sophisticated.” When I text anyone in this room, my text doesn’t go directly to you. It goes to some data center in Norway and then comes back here.

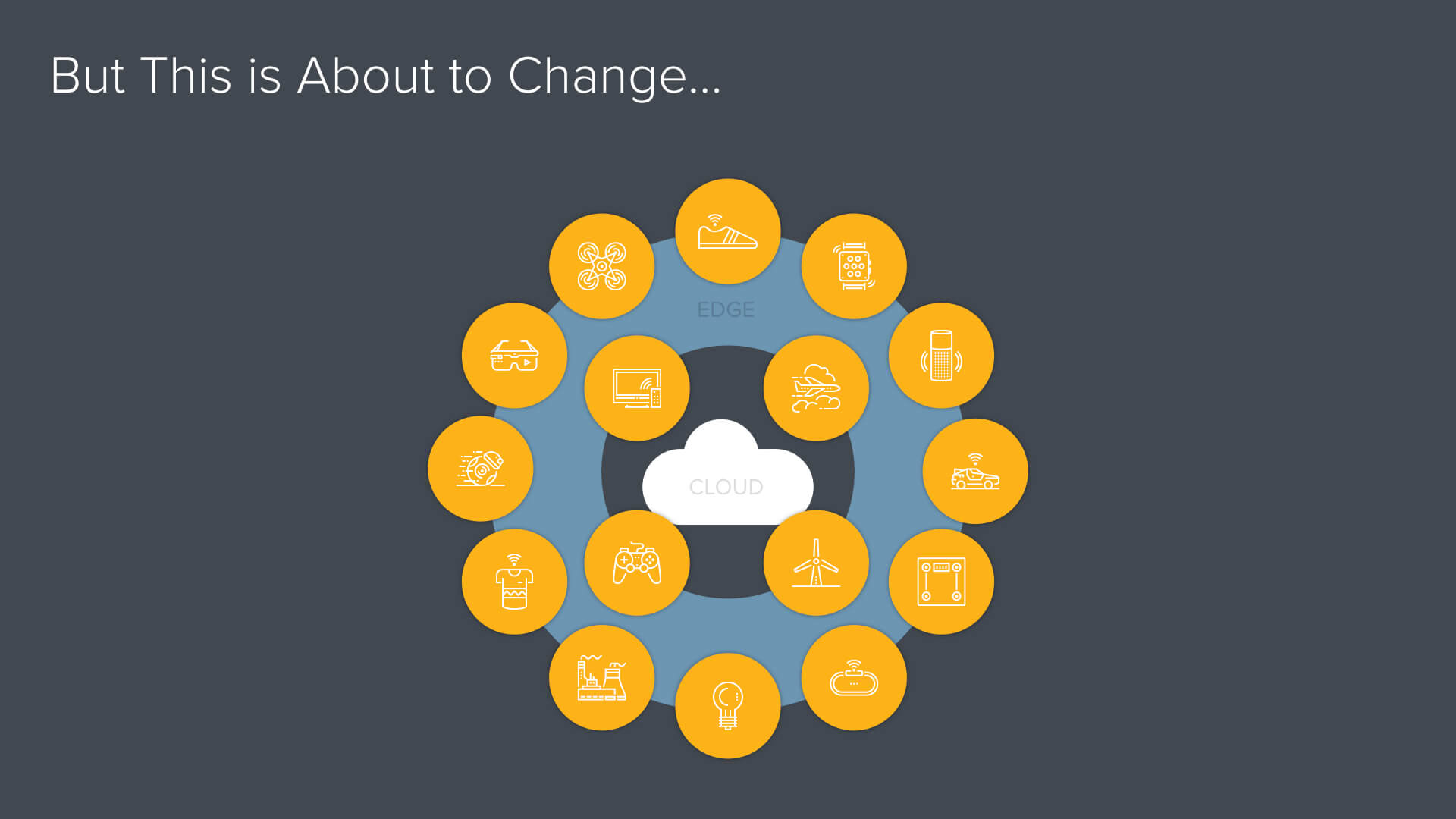

I started to think, “Maybe mobile devices become this next generation and albeit the need for the cloud.” Intellectually, however, this became a cul-de-sac, and I thought, “Well, it isn’t mobile devices but all the other things that are going to be out at the edge that will truly transform cloud computing and put an end to what we know as the cloud.”

The change is that the edge is going to become a lot more sophisticated. Not with mobile devices but broadly speaking — with the Internet of Things.

I know we hear a lot about drones, robots, and all the Internet of Things objects that will be created over the next 10 years. A self-driving car is effectively a data center on wheels, a drone is a data center with wings, a robot is a data center with arms and legs, a boat is a floating data center, and it goes on and on.

These devices are collecting vast amounts of information, and that information needs to be processed in real time. There isn’t the time for that information to go back to the central cloud and get processed in the same way that a Google search gets processed in the cloud right now. This shift is going to obviate cloud computing as we know it.

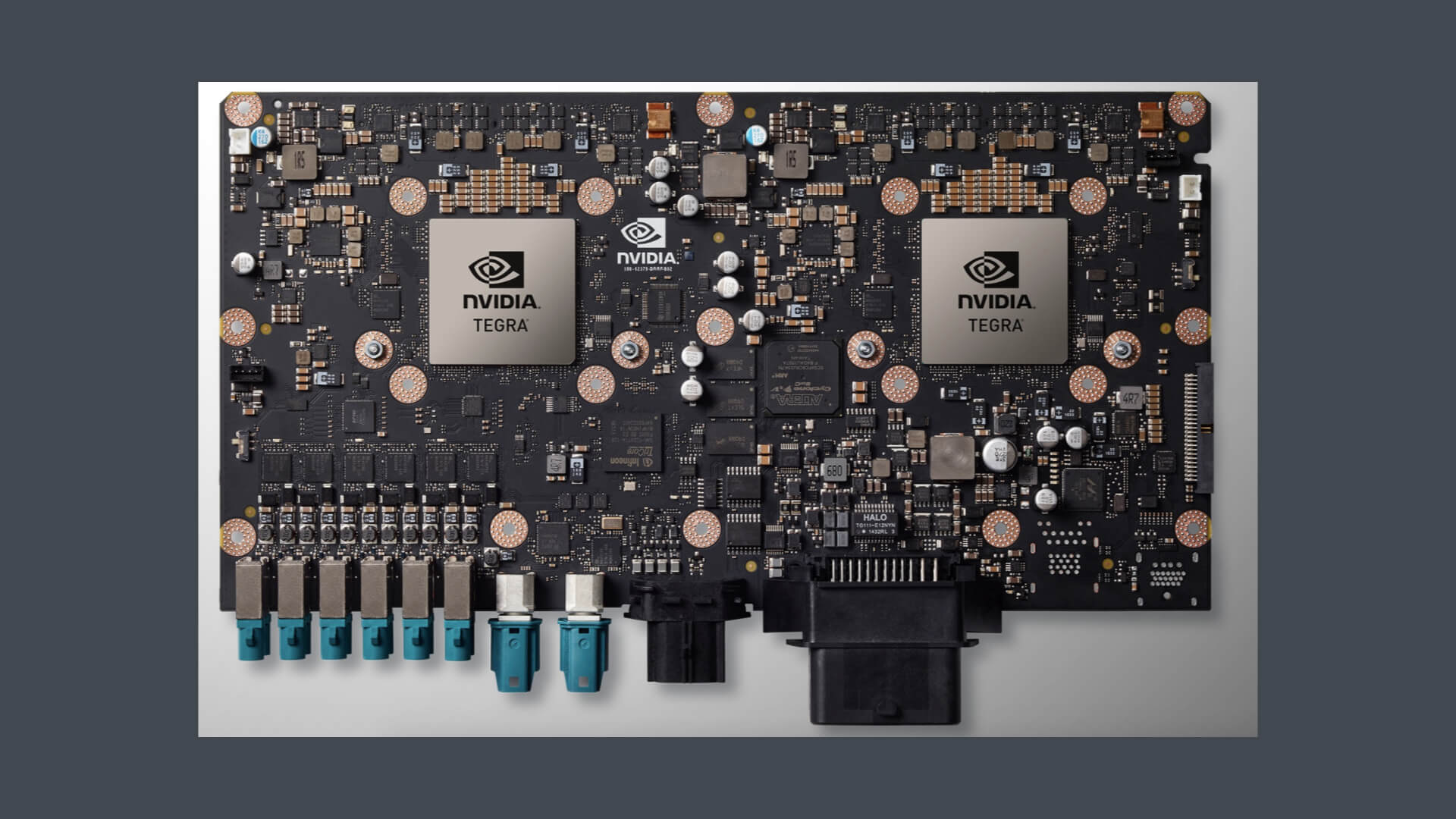

This is a circuit board from the inside of a luxury automobile today, not of a self-driving car. It has about 100 CPUs in it. Now, a self-driving car in the not-too-distant future may have 100 to 200 of these cards. These hundreds of connected computers in a car become a data center. When you then think about connecting thousands of cars together, it becomes a massive distributed computing system at the edge of the network. We are entering the next world of distributed computing, just like we saw in the past. It’s literally “Back to the Future” where processing gets done because of these very sophisticated endpoint devices.

“Back to the Future”: Data-Driven Change

It’s interesting to note that it’s really all about the data. For the first time in computing history, we’re collecting real-world data about our environment, whether that’s vision, location, acceleration, temperature, gravity information, etc. We’re collecting the world around us visually through very sophisticated sensors.

Up until now, computing has fundamentally been humans typing things via keyboard, or a computer generating log files or information from a database; but this is the first time we’re collecting the world’s information. The data is massive.

Real-time data processing will need to occur at the edge where real-world information is being collected. Let’s say we’re collecting real-world information on a self-driving car. It’s collecting images, and as part of an image, there’s a stop sign. We take that data and send it off to the cloud to decide whether there was a stop sign or a person crossing the road. The car would have blown through the stop sign and run over 10 people before the cloud came back and said, “Hey, you need to stop.” The notion of real-time becomes a very important ingredient given the massive amounts of real-world information.

We’re not talking text information. This is about collecting video and streams of information. Both of these things need to work together, and data will absolutely drive this change.

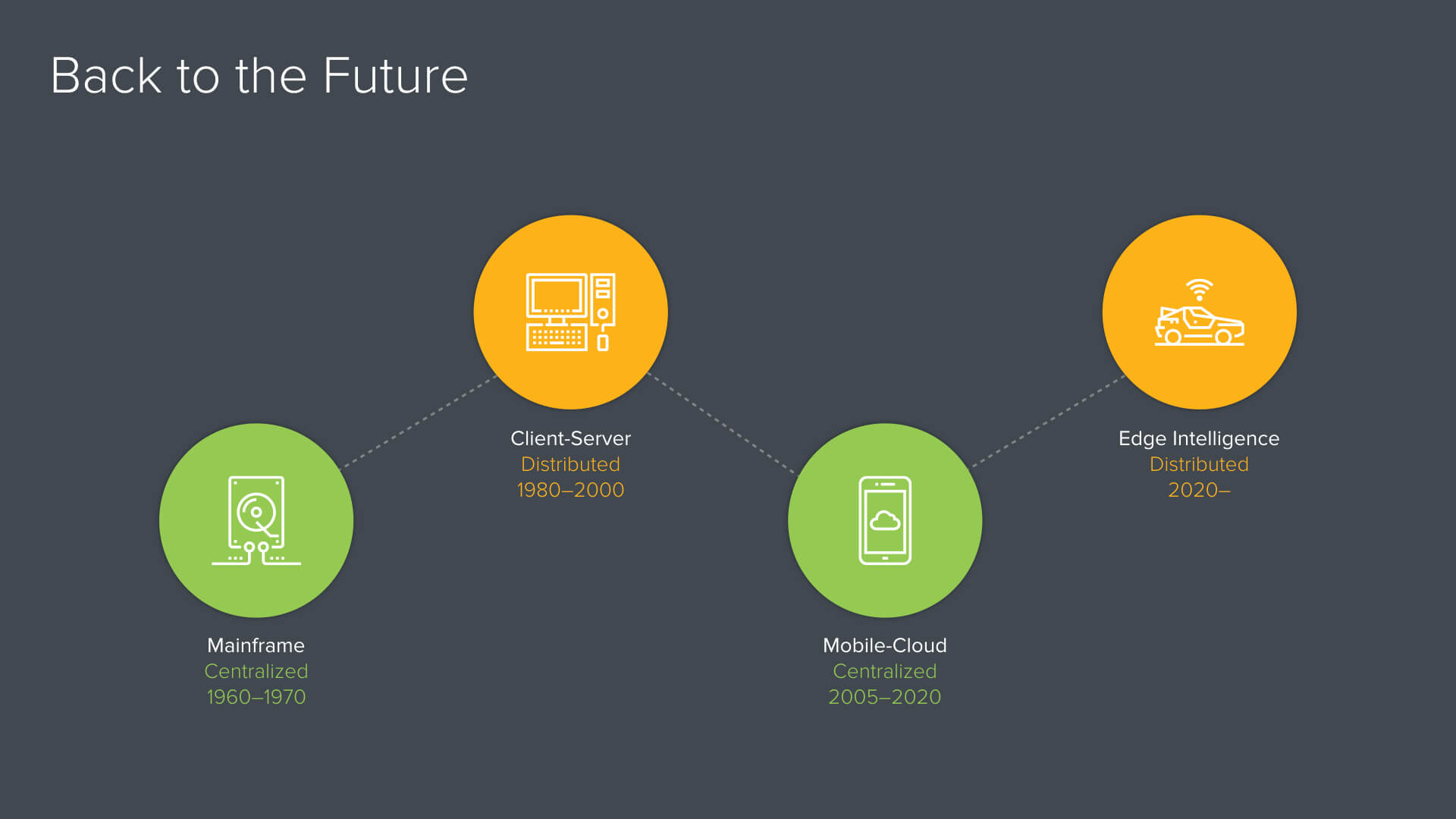

I mentioned distributed computing. We are now returning to “Back to the Future.” When you think about the trends in computing, we started with Mainframes as the beginning of the modern computer era. That was a centralized model. We then moved to a Client-Server model in the ’80s and ’90s and that was a distributed model. Mobile-Cloud brought us back to a centralized model, and believe it or not, we are returning to an Edge Intelligence Distributed computing model. This absolutely follows the trends in computing from centralized to distributed.

Within each of these trends, we have a massive uptake in the number of users and devices that are out there. In the best case scenario, there were 10,000 mainframes and 1,000 people per mainframe, so that’s about 10 million people max on mainframes. When we moved to PCs, we had about two billion people using PCs. We begin to see increasing orders of magnitude.

I’ve always thought a total addressable market for computing is the number of humans on the planet. After all, once every human has a mobile device, why do we need more computers?

Well, the Internet of Things actually drives us to an entirely new dimension because computers are no longer attached to humans. Let’s say everyone on the planet has a mobile phone. That makes nearly seven billion phones. I can imagine a world where there will be trillions of devices out there. We’ll soon face the opportunities and challenges of management, security, data, and all of the issues of distributed computing. It’s happening right now with cars and drones, and it will proliferate to other devices in the not-too-distant future.

Machine Learning at the Edge

The other ingredient is the intersection of machine learning and endpoint data that we’re collecting.

I believe that machine learning catalyzes the edge adoption. There’s massive amounts of real-world information that requires a machine learning approach to decipher the nuances of the real world. The only way that we can look into an image or massive amounts of data is through machine learning. The algorithms and applications employing machine learning will run at the endpoint. However, it’s not going to be machine learning running in the cloud. So, what does the cloud become in this case?

The cloud becomes the last point of information storage and a place where learning occurs. Important information will still get stored in a centralized cloud but much of the processing in this new world will move out to the edge, and that’s where the most important decisions will get made.

This matters because it turns out that humans are notoriously poor at making decisions. I have a Tesla, and I think that Autopilot, which is a very simple step towards a world of automation, is hugely beneficial. My Autopilot is a better driver than I am, even with all its flaws. I’m a terrible driver because I text and yell at my kids, but Autopilot has none of those issues — it just goes down the road. We can see how data and machine learning is more helpful to us than we ourselves are helpful to our own data.

Within the Edge

Let me take you inside the edge and talk about what’s happening at the edge, and then centrally in the cloud.

At the edge, three things are happening: Sensing, Inference, and Action. There’s actually a very interesting parallel here with fighter pilots. Colonel John Boyd, a top dogfighter, invented a feedback loop called the OODA loop to train pilots. OODA stands for Observe, Orient, Decide and Act. Basically, if he could take a fighter pilot and create the fastest loop in his or her brain to process information during a dogfight, then they can win every battle against the enemy. The faster the reaction to that loop, the better the chances of winning.

The OODA loop prioritized agility over power. When we think about this new edge computing paradigm, it really is agility over power. The endpoint device is nowhere near as powerful as the cloud itself, but we can be more agile because that information loop is going quickly to the edge, processing just the information that it needs. Over time, that loop, which I’ll call the Sense, Infer and Act Loop, will get faster and tighter against new information as processing and machine learning gets more powerful. There’s a big parallel between this new world and the frameworks for fighter pilots.

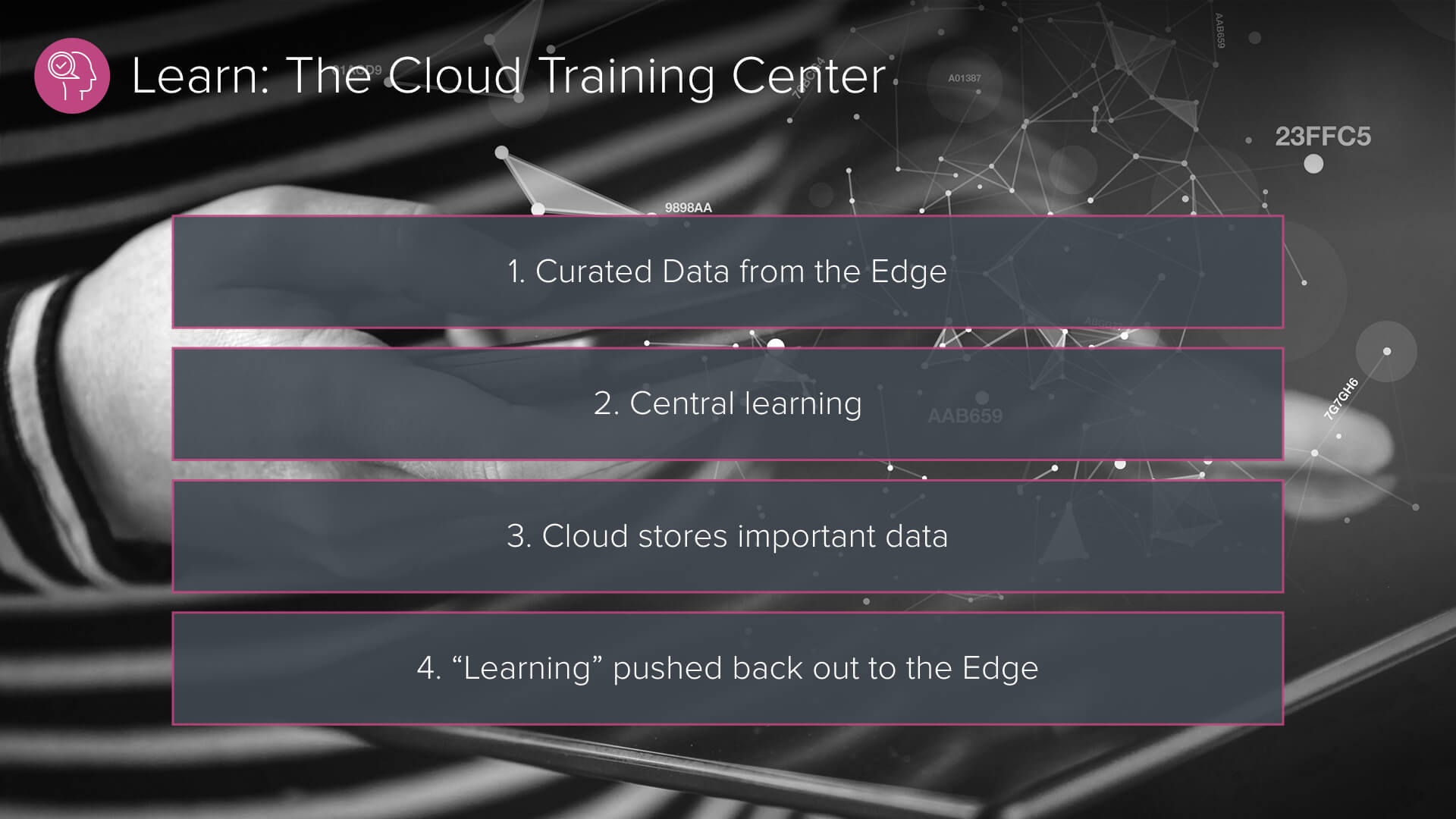

Meanwhile, the cloud is going to be all about central learning. I’m going to take all of this information that I have out at the edge and connect these devices. I’ll curate the information, while learning occurs centrally, and then propagate that information back to each endpoint device, creating an ever tighter, more agile loop that happens at the edge.

Within the Edge: Sense

The first is Sense. Sensors will include cameras, depth sensors, radar, accelerometers and will be everywhere generating massive amounts of information. A self-driving car generates about 10 gigabytes of data per mile. That’s a lot of data. A lytro camera, which is a data center in a camera, generates 300 gigabytes of data a second. Sensors will be in just about everything, not just complex devices like a car or a camera.

I put a picture of a running shoe here because in the not-too-distant future, we will have sensors in running shoes. The sensor will collect information and a machine learning algorithm with a very fast loop will run so that when you’re running, your shoe will indicate, “Hey, you’re going up a hill. You should shorten your stride,” or “You’re not doing as well as yesterday,” or “You should pack it up for today and go home and rest.” Simple devices like a running shoe can generate a lot of information on the world around it.

The data collected is extremely valuable but also too much to be pushed back every time. Imagine if I had to push 10 gigabytes of data in a car for every mile back to the centralized cloud to get processed. It won’t happen there. It has to be done at the edge.

Within the Edge: Infer

The second is Inference. The data being collected at the edge is very unstructured. It’s the first time that we’re seeing such massive amounts of unstructured and highly variable data. Inferences occurs when machine learning extracts relevance out of the data itself, whether it’s a stop sign, a person, a tree, or your running cadence. This will give us powerful task-specific recognition that requires training and data to again, keep the loop getting ever so tight.

Within the Edge: Act

Action is the final element after we Sense and Infer. Critical safety responsiveness of edge systems will require real-time data decisions. That is, waiting for the response from the cloud is going to be too slow.

I was around when the world entered the first wave of distributed computing and it was exactly the same argument. If I had a workstation on my desk and I could do processing at the edge, I don’t have to wait for information to go back to the centralized server. The data accumulates at the edge, and the processing stays near the data. As IOT devices get more sophisticated and intelligent, sensors and computing power will increase. We’re witnessing the beginning of it right now. In the future, there will be sensors and machine learning algorithms. Things will get more complex in terms of the amount of data that’s being collected.

Within the Cloud: Learning

So what happens to the cloud? The cloud becomes a training center for all of this information. One of the fundamental aspects of machine learning is that it needs lots of data in order to learn. This new Edge Cloud model has large amounts of data coming into a centralized repository and gets smarter on that information. We may have hundreds of thousands of automobile collecting information, and that information will be curated at the edge. Not all information comes back. The cloud will only store important information, and then the learning will propagate back to the edge devices.

That’s how things are going to get smarter. The cloud does have a purpose and won’t completely go away. SaaS applications will continue to work in the cloud, but this new model will take it to a whole new level.

Opportunities and Challenges in the New Edge Cloud

Here are some predictions of what happens from this point forward.

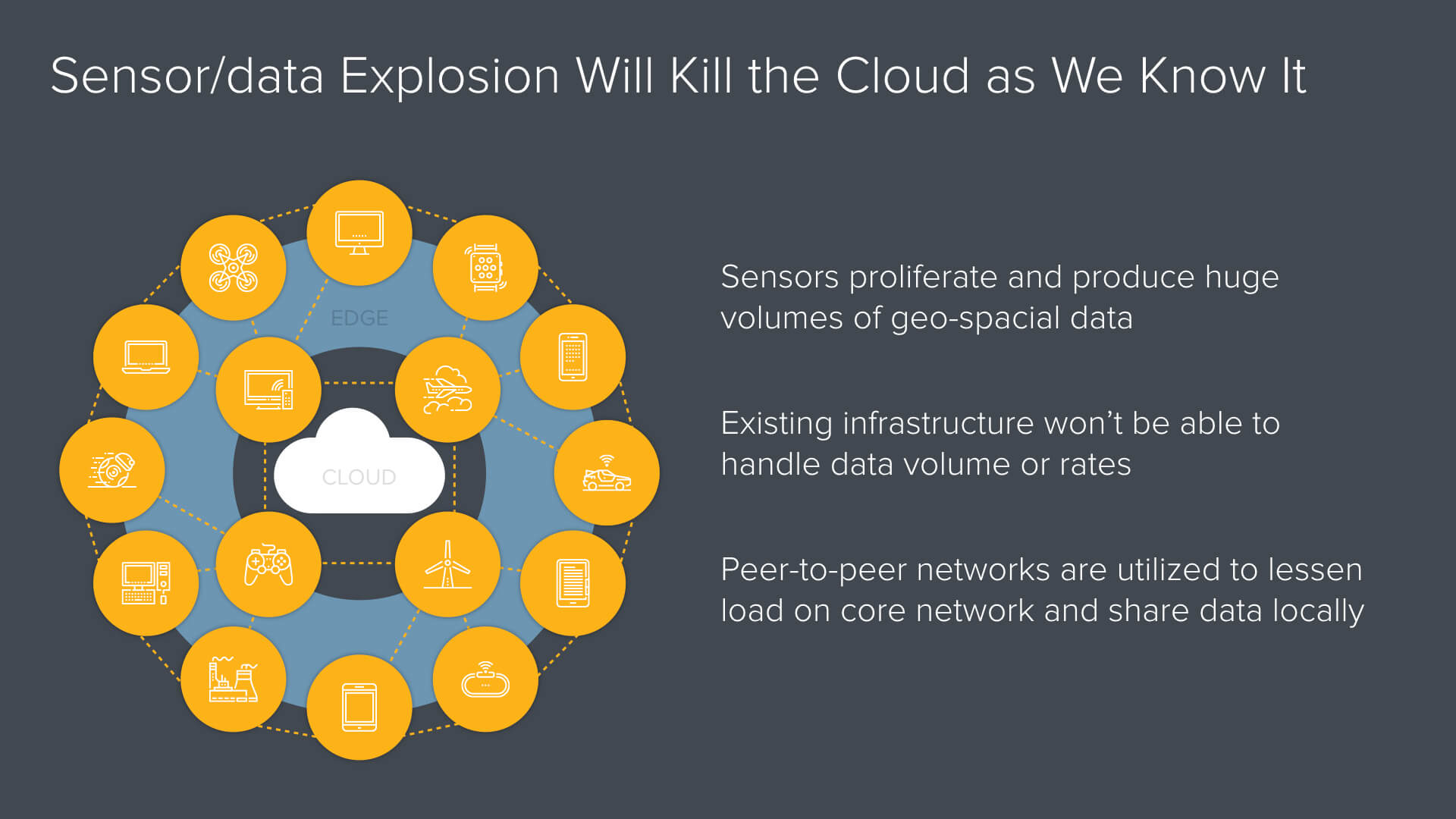

Sensor data explosion will kill the cloud. Sensors will produce massive amounts of data, but the existing infrastructure will not be able to handle the volumes or the rates. The data will be stuck at the edge and computing is going to move along with that data at the edge. We are absolutely going to return to a peer-to-peer computing model, where the edge devices connect together, creating a network of endpoint devices not unlike the distributed computing model.

This will impact a variety of things, including network and security. Security challenges of data collection and networking challenges of connecting trillions of peer-to-peer devices that are communicating and processing information together without a centralized information pool will be either the opportunity or challenge of this next era of computing.

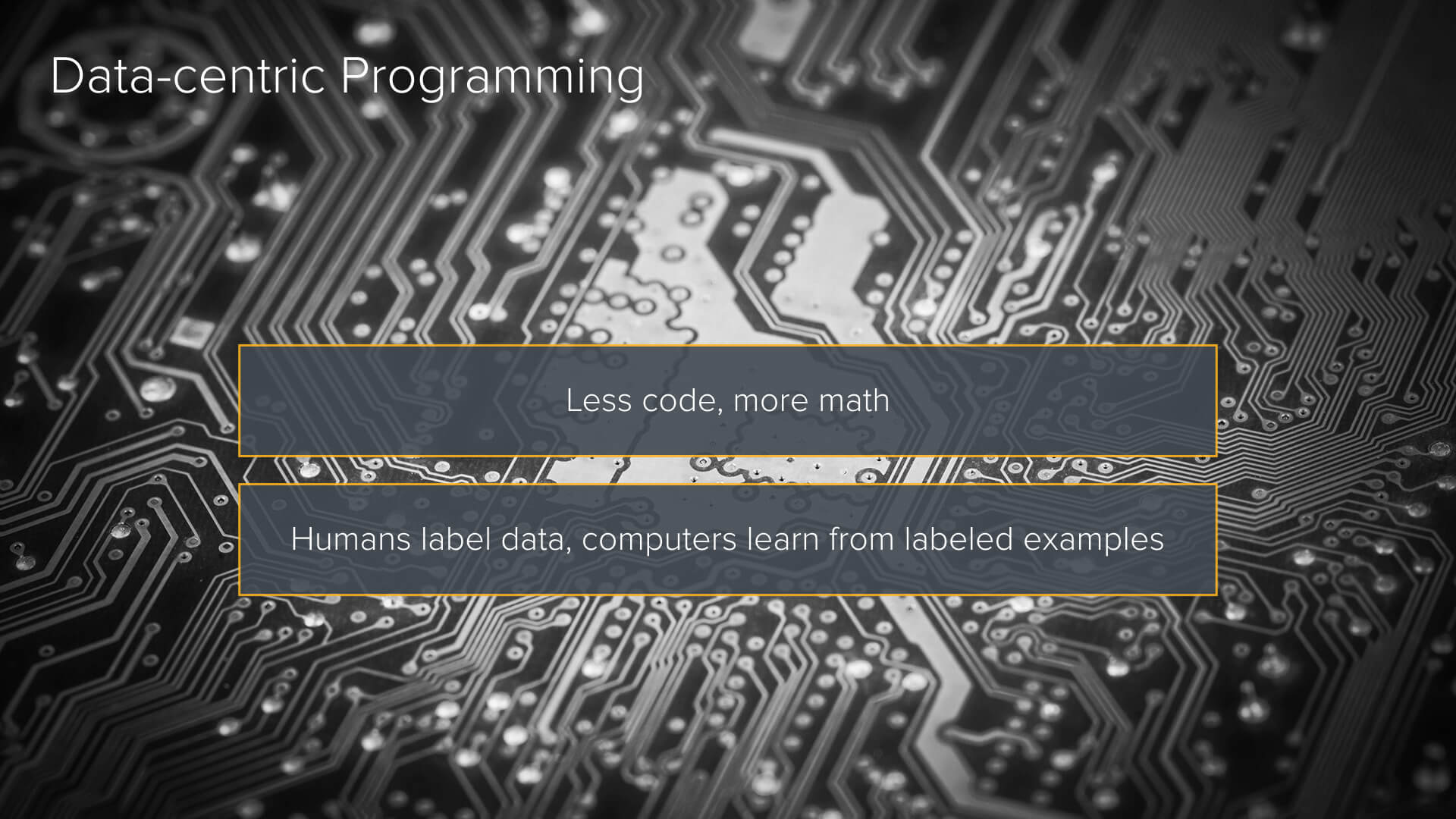

We are going to move to a world of data-centric programming. I believe the cloud disaggregates into this new model. Right now, we’re teaching everyone to code logic. However, we’re going to use data to actually solve problems, and so the next generation of coders will have to be mathematicians and data analysts. There’s a transformation of the type of talent that we’re going to be needing in terms of this particular information. I also believe that there will be new programming languages developed specifically around the notion of data processing and data analytics that are very specific to use cases.

Power Increases, Price Decreases

And finally, as the processing power of the edge increases, the price will decrease. We’ve seen this with every transformation of computing. If you think about the mobile supply chain, 20 billion mobile phones have been sold and 5 billion are in use. That’s a great supply chain. Memory, CPUs, networking and all this stuff in a mobile phone is so inexpensive because there’s been 20 billion of phones produced.

Imagine when there will be trillions of these IOT devices. Think about the impact of the supply chain on helping to commoditize processing power and sensors. Just as some anecdotal evidence here, the current iPhone 7 has 3.3 billion with AB transistors. The original Pentium processor in 1993 had 3.1 million with M transistors. Think about the power increase between then and now — it’s only going to accelerate.

From a cost standpoint, we’re already seeing sensors come down to a cost where I can put sensors in running shoes. $100,000 sensors can’t go into running shoes; otherwise you’re not going to buy a running shoe. LIDAR is used for detection in self-driving cars, which you’ve probably seen on top of a Google Car. The first LIDAR for a Google Car was $75,000. Now, LIDAR is sub $500. There aren’t even self-driving cars out there. I bet you LIDAR goes to 50 cents in the not-too-distant future.

I can put sensors in literally everything. From shoes to glasses to your ears, wherever. So if power goes up by several orders of magnitude and price comes down, what happens? It all needs to be connected.

A Connected World

The entire world becomes the domain of IT. For those of you who think that your job as CIOs or IT managers has gotten easier, this will be an opportunity of a lifetime. You move from five billion devices to a trillion devices that all need to be managed and coordinated together. Every industry will be subject to this. If I’m in the insurance industry and I run a fleet of drones to inspect houses, who’s going to manage them? There will be so many applications that will combine what we think as consumer-oriented applications with enterprise manageability.

As we saw with distributed computing in the late ’80s and early ’90s, and with mainframe and the cloud, there is a big disruption on the horizon. It’s going to impact networking, storage, computing, programming languages, security, and of course, management. I would encourage you to get ready for one of the biggest transformations to occur on the computing landscape. It’s happening right underneath our eyes. What do you think now?