Editor’s note: It’s Summit Week at a16z, so each day we’re re-sharing some of our favorite talks from the last few years. a16z Summit is an annual, invite-only event bringing together thinkers, builders, and innovators to explore and examine the future of tech. Our 2019 theme is “The Future Is Inevitable,” and you can sign up here to get notified when we post this year’s talks.

In this talk from Summit 2018, Crypto Deal Partner Ali Yahya explains how cryptonetworks could lead to the beginning of a new era of trust and human cooperation. You can watch a video of Ali’s talk or read the transcript with slides below.

The Building Blocks of Trust

The rise of human civilization is a story of ever-increasing scale of cooperation. Even today, it’s hard to conceive of pressing human problems that can’t be solved primarily through more cooperation—cooperation between people who might reside across political divides, speak different languages, and adhere to fundamentally different ideologies. So much of what we have built, our greatest successes as a species––like the Internet or space travel––have been efforts that are truly global. But how is this scale of cooperation even possible?

It is all driven by trust. Trust is an elusive concept. It is a word that we use a lot, but what does it actually mean? When you boil it down, it’s a certain kind of confidence that doing something or interacting with someone is likely to go well for you.

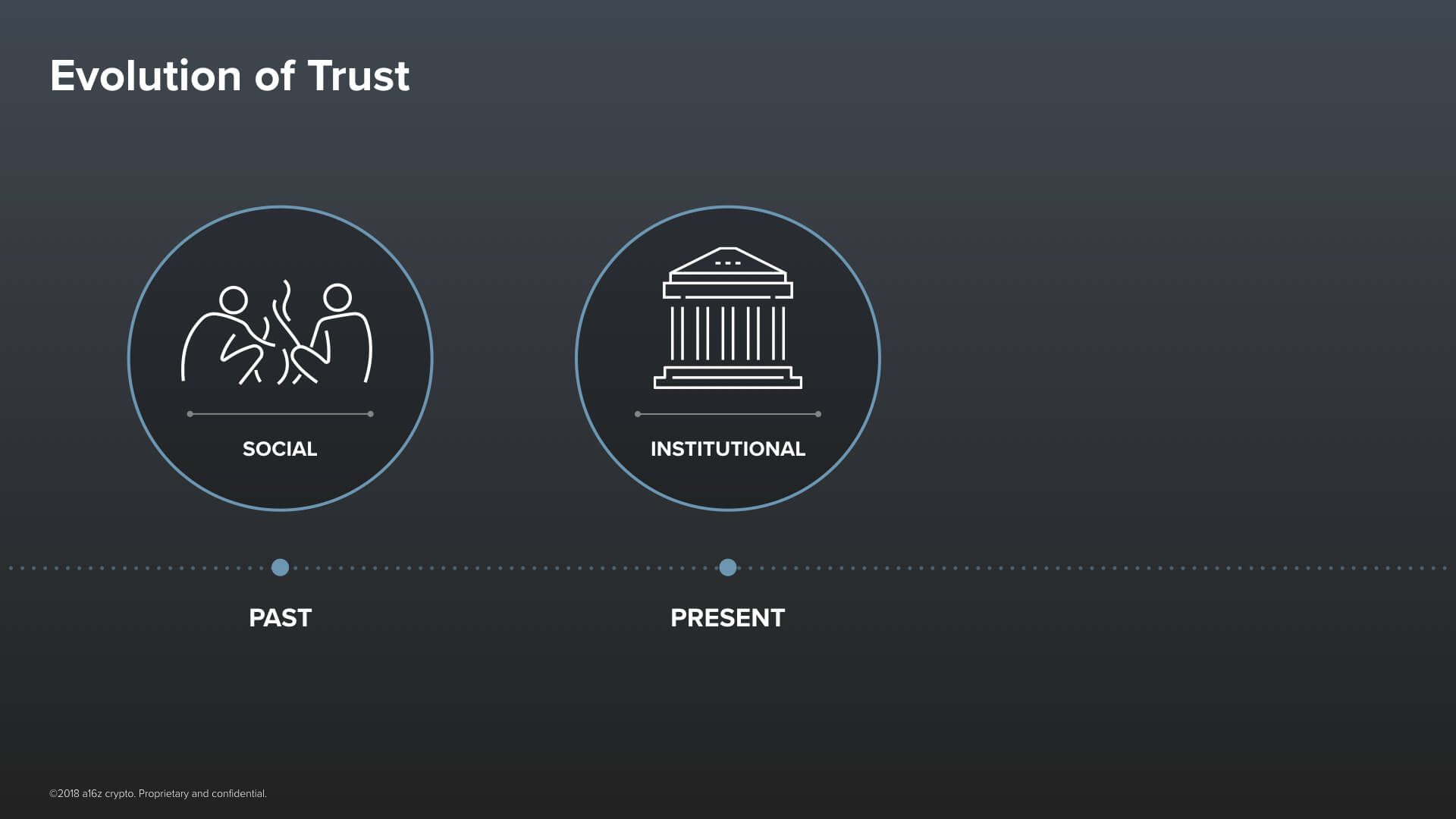

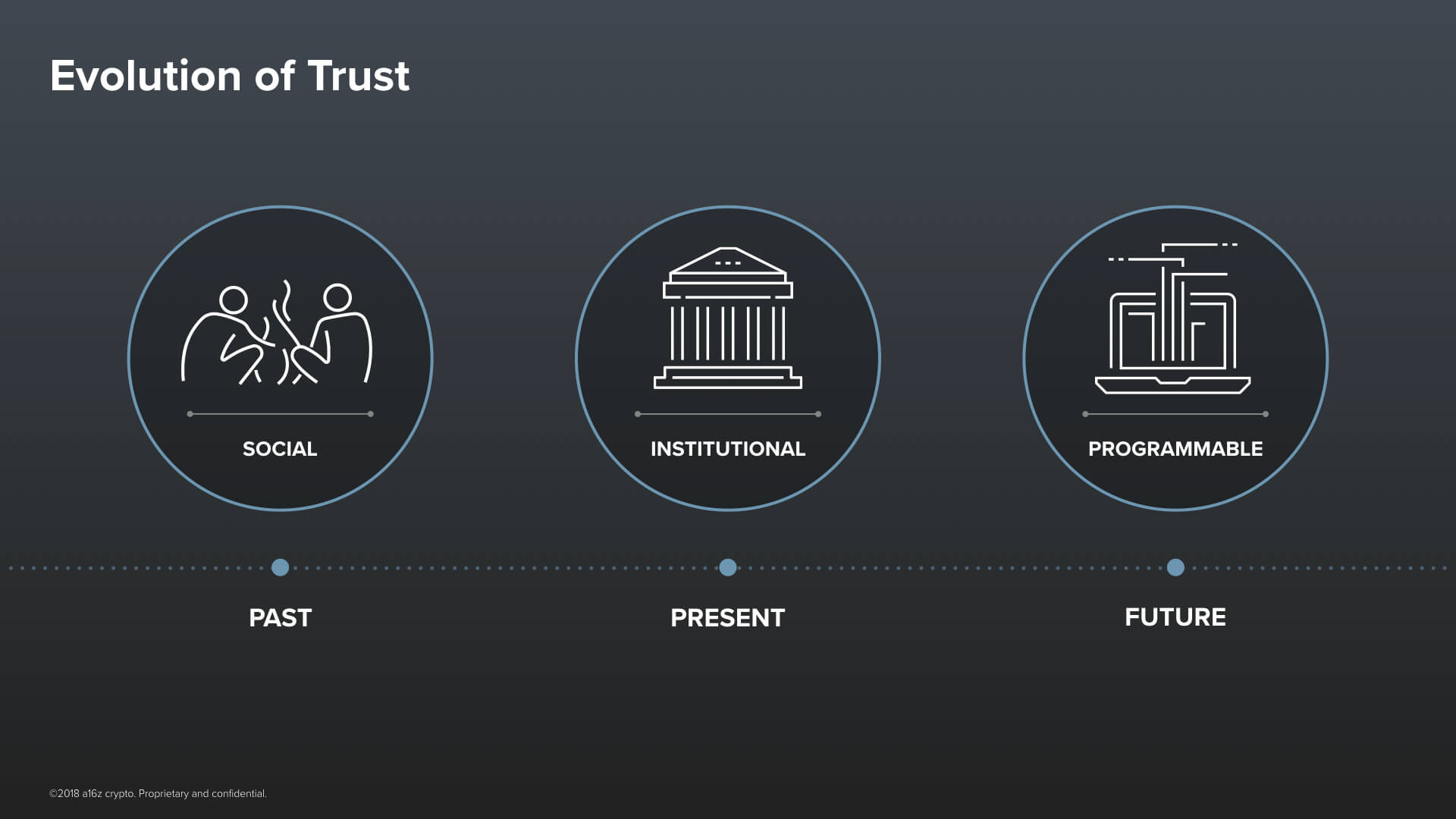

Throughout history, the kinds of things that we have relied on to build trust have changed dramatically. We started as nomadic hunter gatherers, basing our trust on things like facial expressions and body language––the biological signals that we instinctively cue into when we interact with one another. This model of trust was Social.

Over the many millennia that have followed, human institutions––like churches, schools, local governments, and then nation states, and corporations––have come along, and they have gone a long way to help us scale trust from the very local, where everyone knows one another intimately, to the nearly global, where everyone is a stranger. Let’s call this model of trust Institutional. It’s the predominant model of trust today.

But we are now reaching the limits of purely Institutional trust. It is too often the case that the institutions that we place our trust in let us down. Think of the recent Wells Fargo scandal, or of the Equifax hack, or the ongoing saga about user data over at Facebook, or––for that matter––of the Great Financial crisis ten years ago. Say what you will about crypto, but I think you’ll agree with me, there is a lot of room for improvement.

Our technology, and especially our software, has been immeasurably successful at improving a lot of things. But it has only begun to scratch the surface of the world of trust. I will argue that since the creation of the Internet, we have begun to discover a new paradigm for trust.

It is a kind of trust that is programmable. It is enabled by software, and its guarantees are based on something more fundamental than the authority of a human institution. To explain what this means and how it works, I need to take a moment to break down trust further into its elemental building blocks.

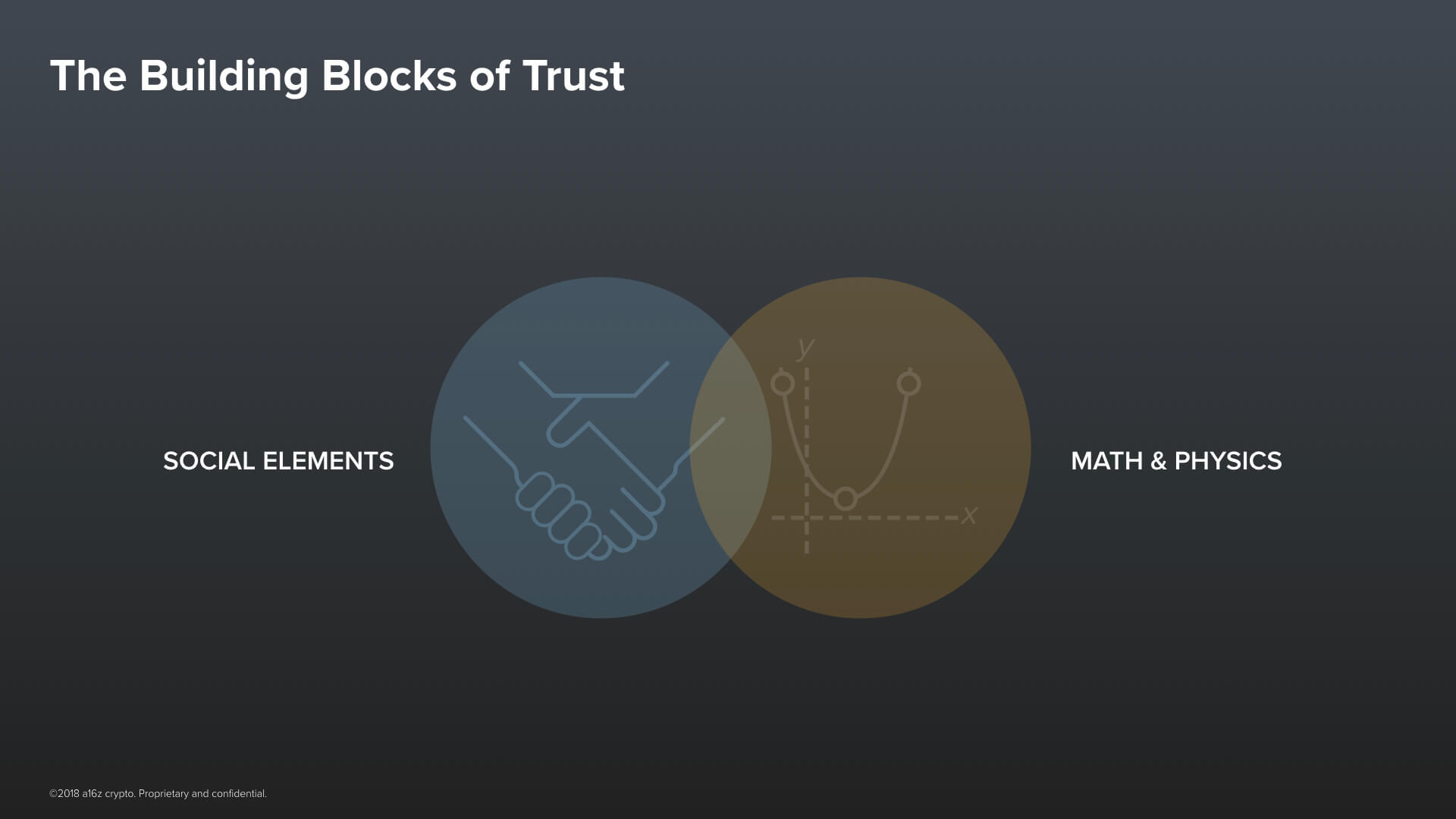

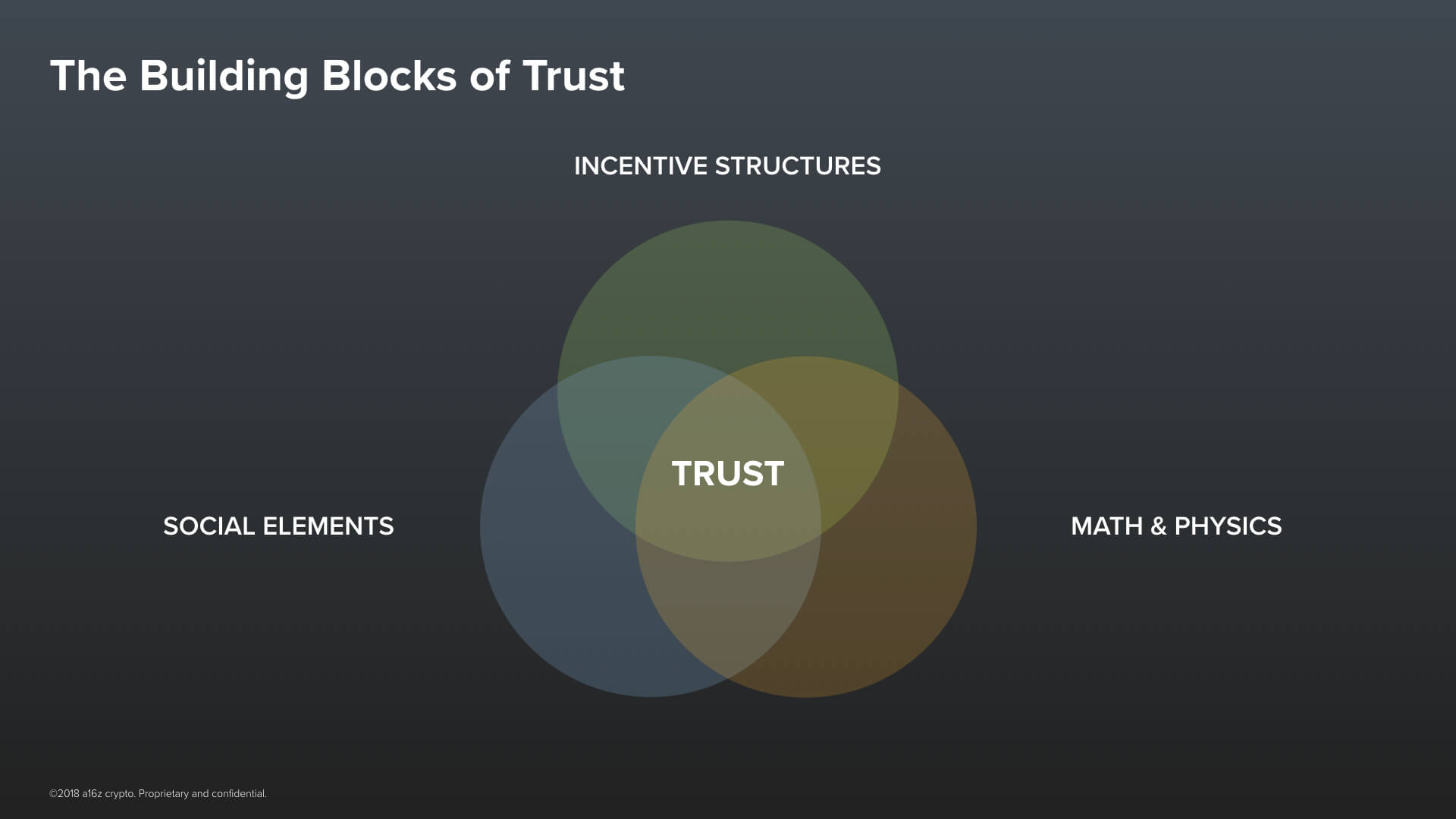

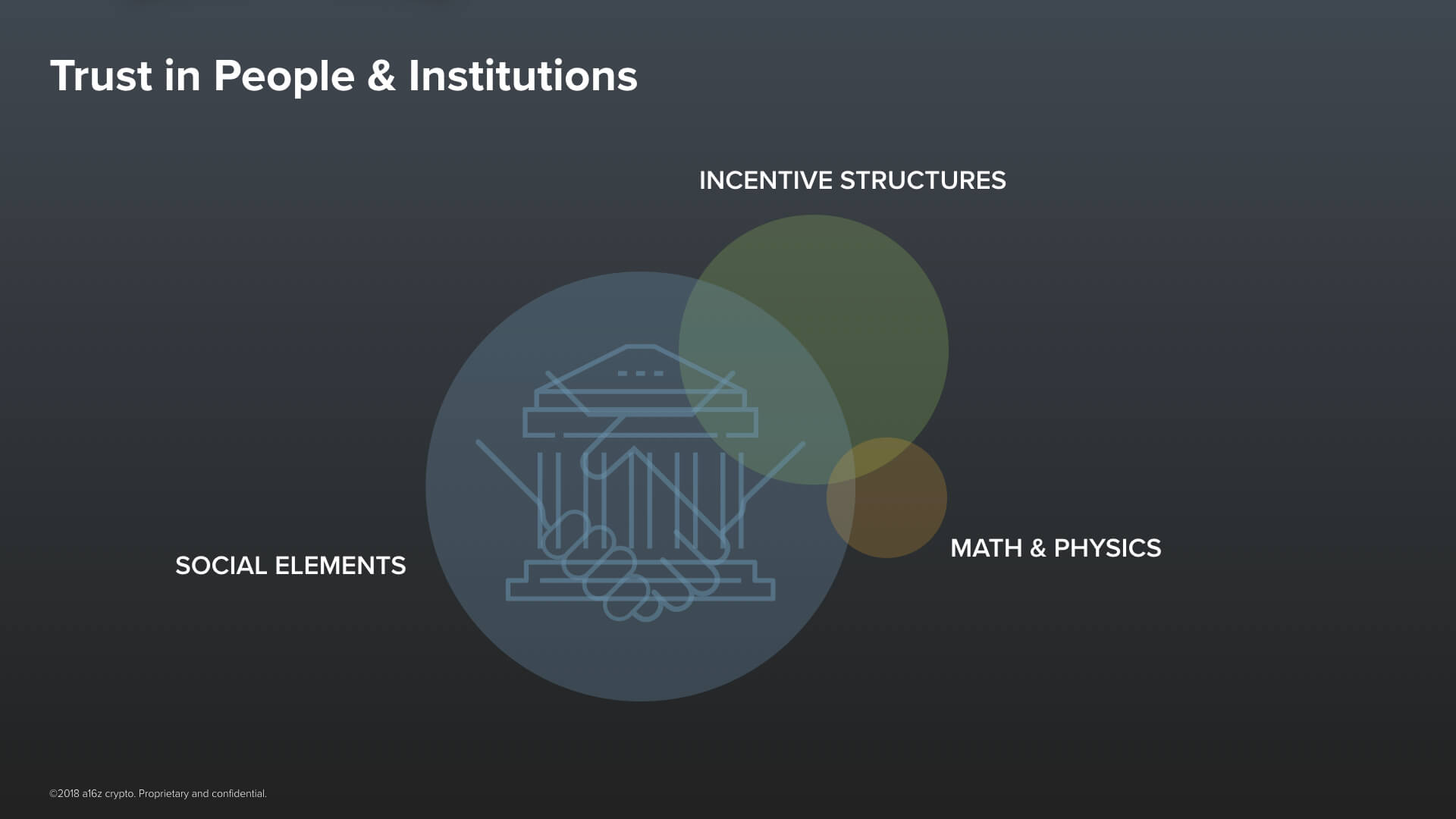

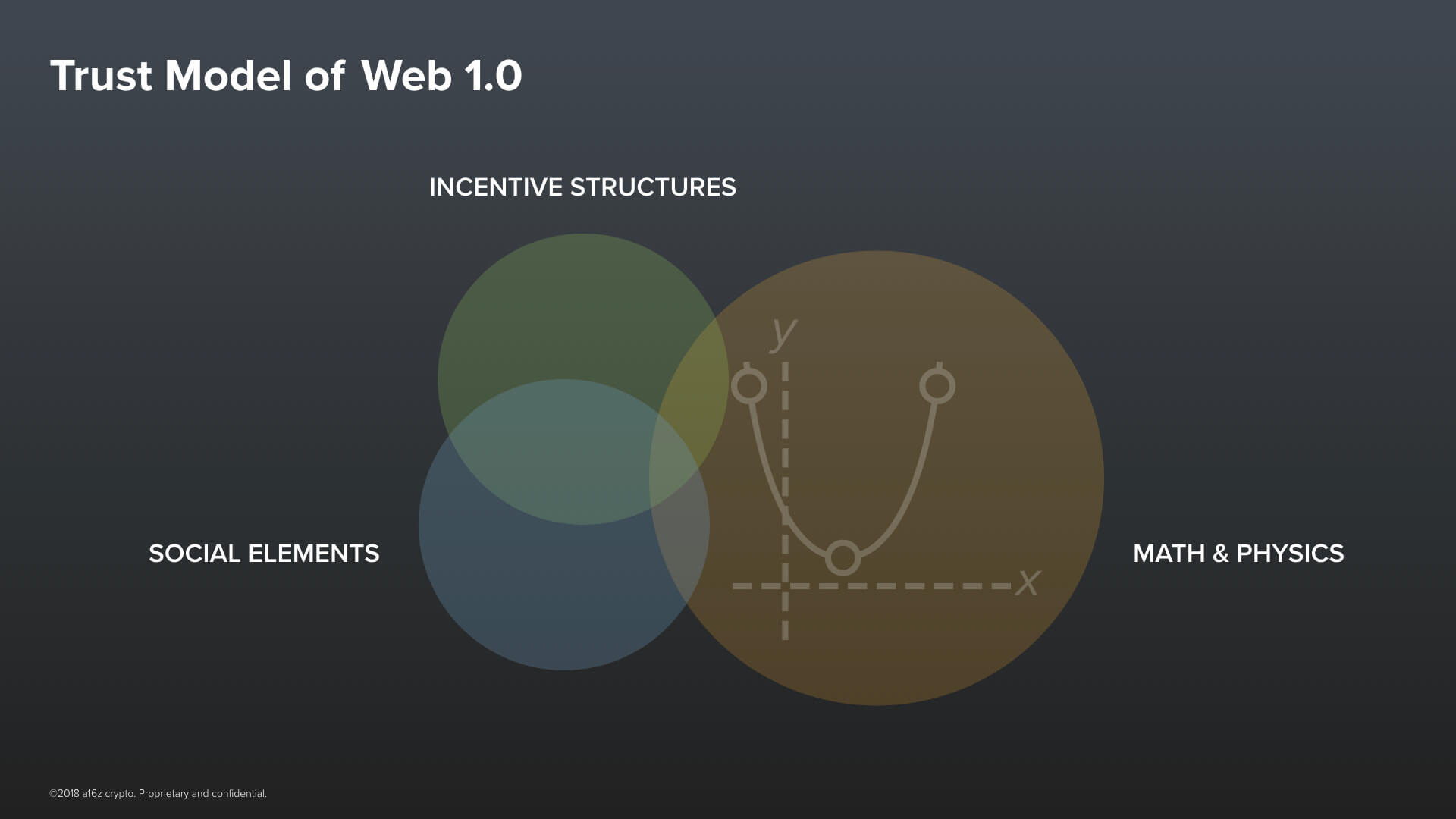

There are really two important components of trust. Two elements that together combine to form all of our trust in people––be it individuals or institutions––and our trust in technology.

The first is biological and instinctive –– the social elements of our interactions. We trust certain facial expressions, we trust perceived social status, we trust brand and reputation, and we trust observed track record. This kind of trust is of course hard to scale because it takes time and direct human exposure to build.

The second component comes from our knowledge of the physical world. It comes from our understanding of things like Math & Physics. It is the kind of trust that gives us confidence that doing something like landing on the moon is even possible.

These two components can be used as building blocks for a third component. A third building block that we can use to create trust–and that is Incentive Structures.

Now, some incentive structures are built primarily from things that are physical –– things in the orange circle. For example, we tend to trust that the physical difficulty of forging a U.S. dollar bill is enough to disincentivize most people from trying. It’s just not worth it. And of course, it also happens to be illegal, which itself is a disincentive. But that one looks more like this.

It is made up of people. Most of our incentive structures are made up of people––they are social constructs. Think of our legal contracts, or government regulation, or of insurance. Or think of the many agreements we make all the time implicitly with companies whose Terms of Service aren’t exactly written by J.K. Rowling.

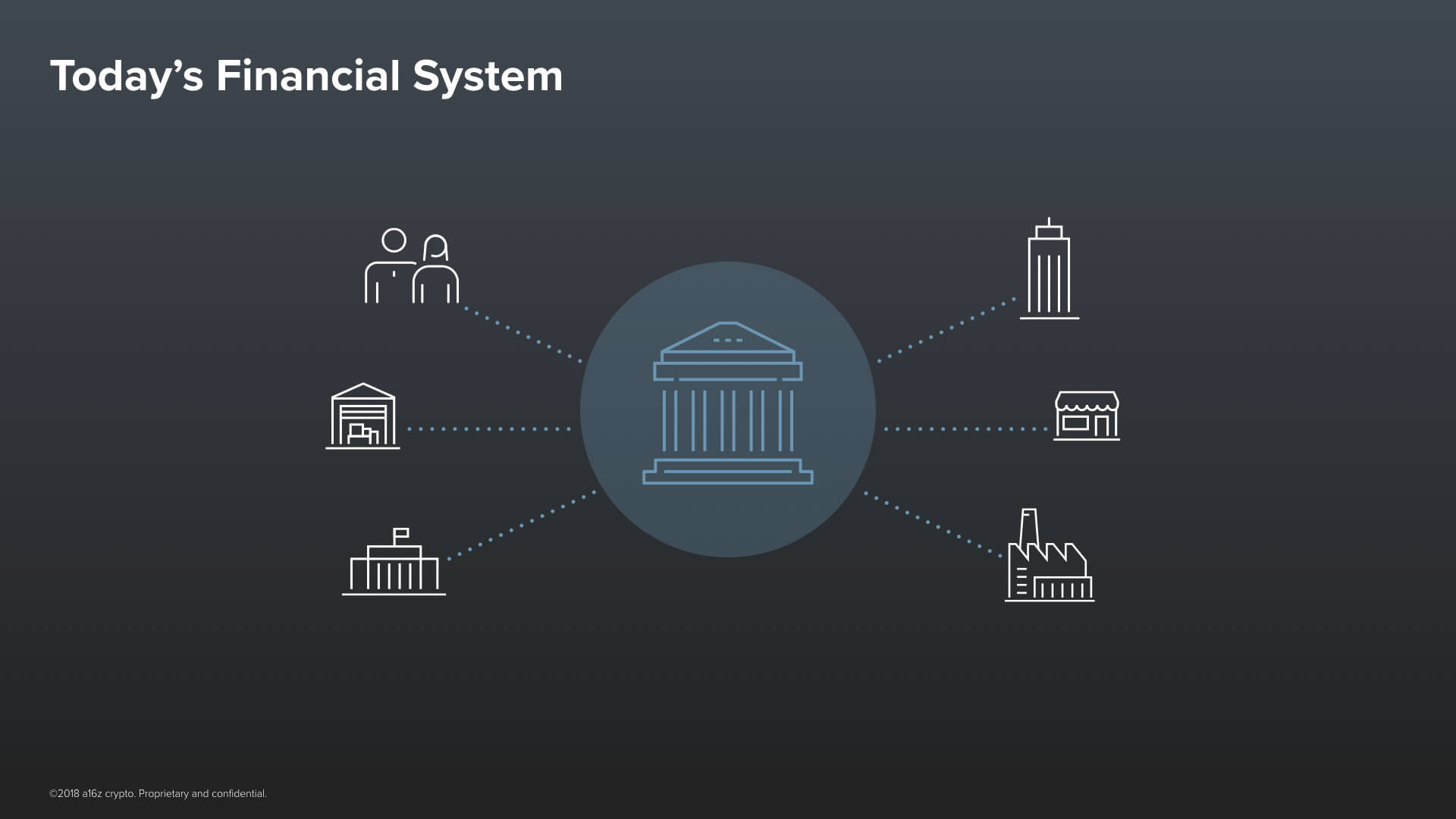

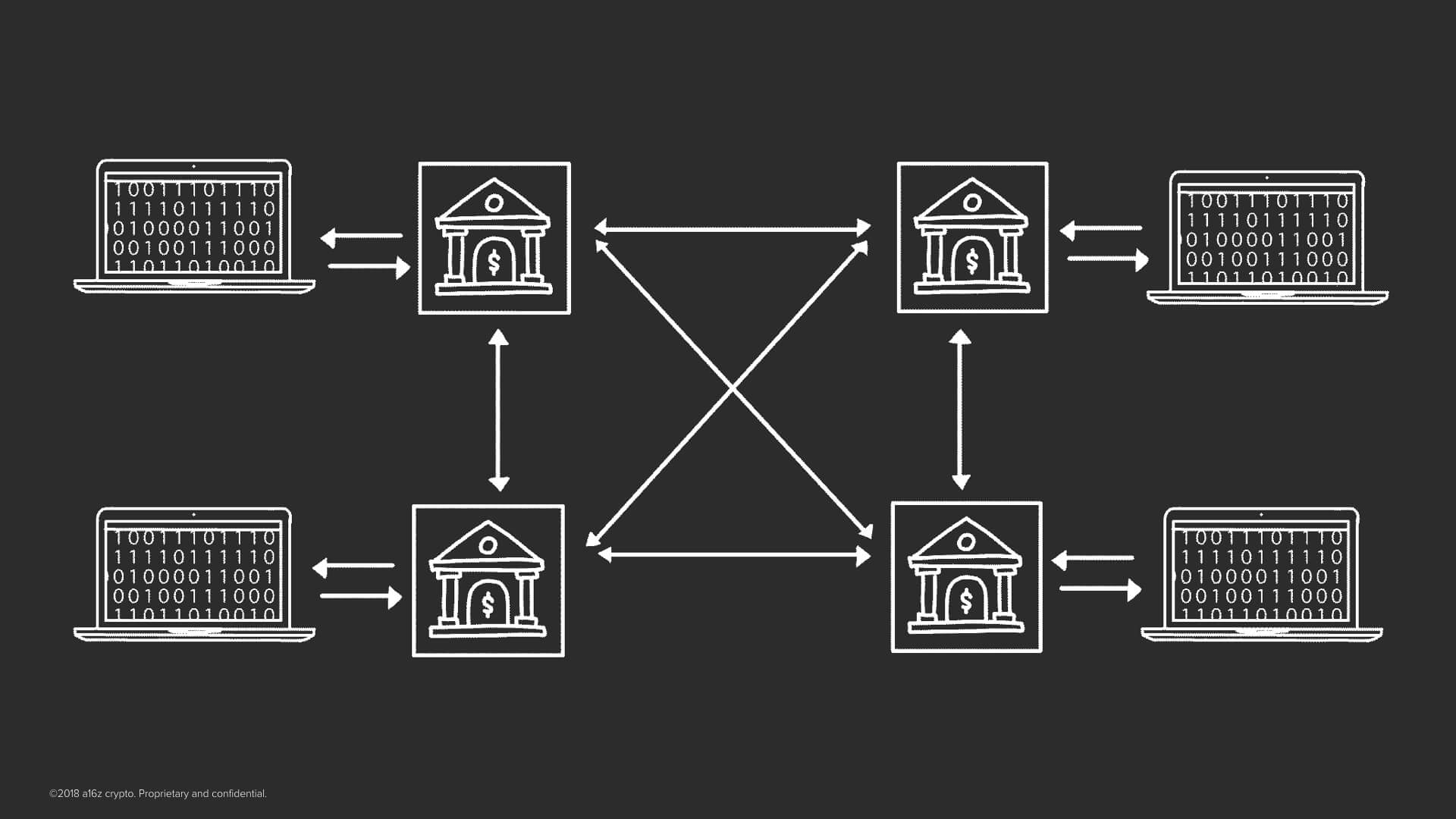

The problem today is that institutions have become the sole gatekeepers of trust. We are strongly dependent on them to cooperate. The best example of this is our financial system.

Financial Institutions as Today’s Gatekeepers of Trust

In our current financial system, the integrity of any transaction depends on the trustworthiness of the human institution––a bank––that sits in the middle of it.

And the only reason we trust banks is: because they have a track record, they have a reputation, and because there are legal incentive structures around them. Take, for example, credit cards. They’re not very secure. But the reason that you are willing to trust them is that, if someone charges something to your card, you can just dispute it after the fact. An entire team of trusted humans will then readily get involved and get you paid back! Our trust in our financial infrastructure today is based, not on technology, but on this human-driven possibility for recourse.

This institutional model of trust works in the first world but it simply cannot scale to 8 billion people. As Katie Haun mentioned in her talk about why she got interested in crypto, two billion people in the world today are completely unbanked, credit card fraud cost $190B last year, and fewer than 5% of people in the world hold any investments in stocks or government bonds. Now in contrast to this limited system, I’d like to give you an example of how trust can work differently.

An Open Source Internet and Trust

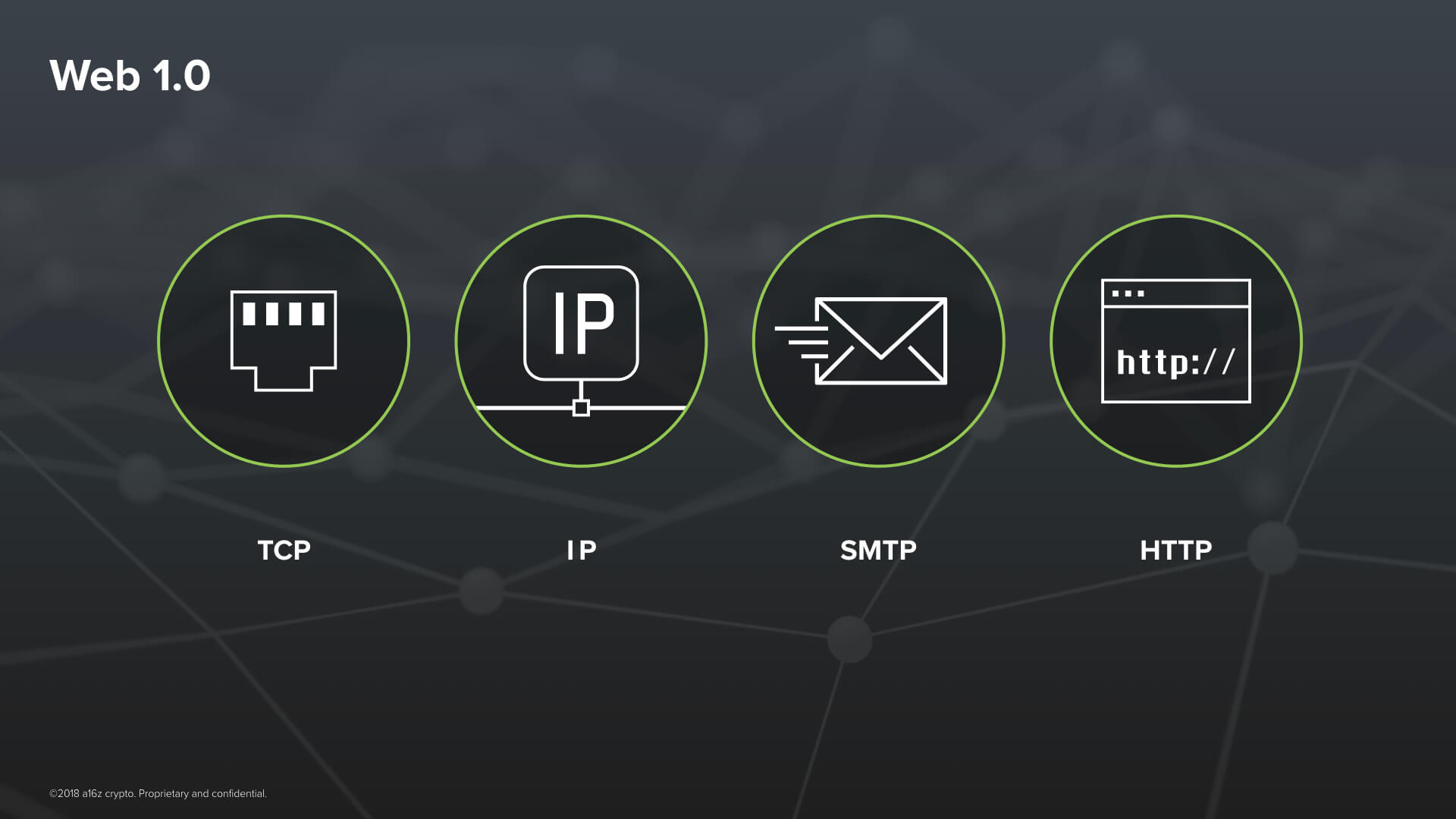

The Internet is one of our first global systems of cooperation where trust is not primarily based on institutions. Or at least it wasn’t –– in the beginning.

The early protocols of the Internet, let’s call them the protocols of Web 1.0, were a beautiful thing. TCP, IP, SMTP and HTTP –– they were designed in the 70s and 80s with a spirit of openness and inclusiveness. They were open standards. This means that anyone anywhere has the ability, on equal footing, to build on top of them without anyone’s permission. Case in point, hundreds of open source implementations exist. The code for these protocols inside of your phone right now, whether it’s an iPhone or an Android phone, is directly based on open source code.

Think about this: It is a great feat of human trust and cooperation that today there exists a single, global Internet rather than numerous, disjoint networks that fracture along our many divides. Hundreds of countries and thousands of companies around the world, many with conflicting incentives, have magically converged on running exactly the same protocols, down to the last bit, to be able to connect with one another.

In a perennially polarized world, how can we possibly explain this? It was directly a consequence of open source. Because the core protocols of the Internet were open source, nobody could take unilateral control over them. Their emergence and support was bottom up and mostly neutral. They became the stable and level playing field on top of which the ecosystem of the Internet could be built.

Their emergence led to a golden period for innovation. Entrepreneurs and their investors could trust that the rules of the game would remain neutral and fair. But, of course, open source is hard to monetize, so the business model of these startups depended on building proprietary, closed protocols on top of the Internet’s open ones. These were the protocols of Web 2.0.

A handful of these startups have since become some of the most valuable companies in human history. You may have heard of some of them. And, of course, thanks to these companies, billions of people have gotten access to great new technologies, mainly for free. And that has been a truly phenomenal thing. As of late, these companies don’t get enough credit for that.

But there is an important caveat, the tech giants of web 2.0 have become our new trusted intermediaries and gatekeepers on the Internet. For most of what we do on the Internet today, like searching the web, connecting with people, sharing media. We now are forced to place significantly more trust on proprietary and opaque code written by these companies.

As a result, these companies now command a great deal of power over their users and third-party developers. By virtue of their control of all data, they control: each and every interaction between users on the platform, each user’s ability to seamlessly exit and switch to other platforms, content creators’ potential for discovery and distribution, all flows of capital, and all relationships between third party developers and their users.

They also control the rules of the game. At any time, without warning, and almost entirely on their terms, these companies can (and do) change just about anything about what is allowed on their platforms––often disenfranchising entire companies in the process.

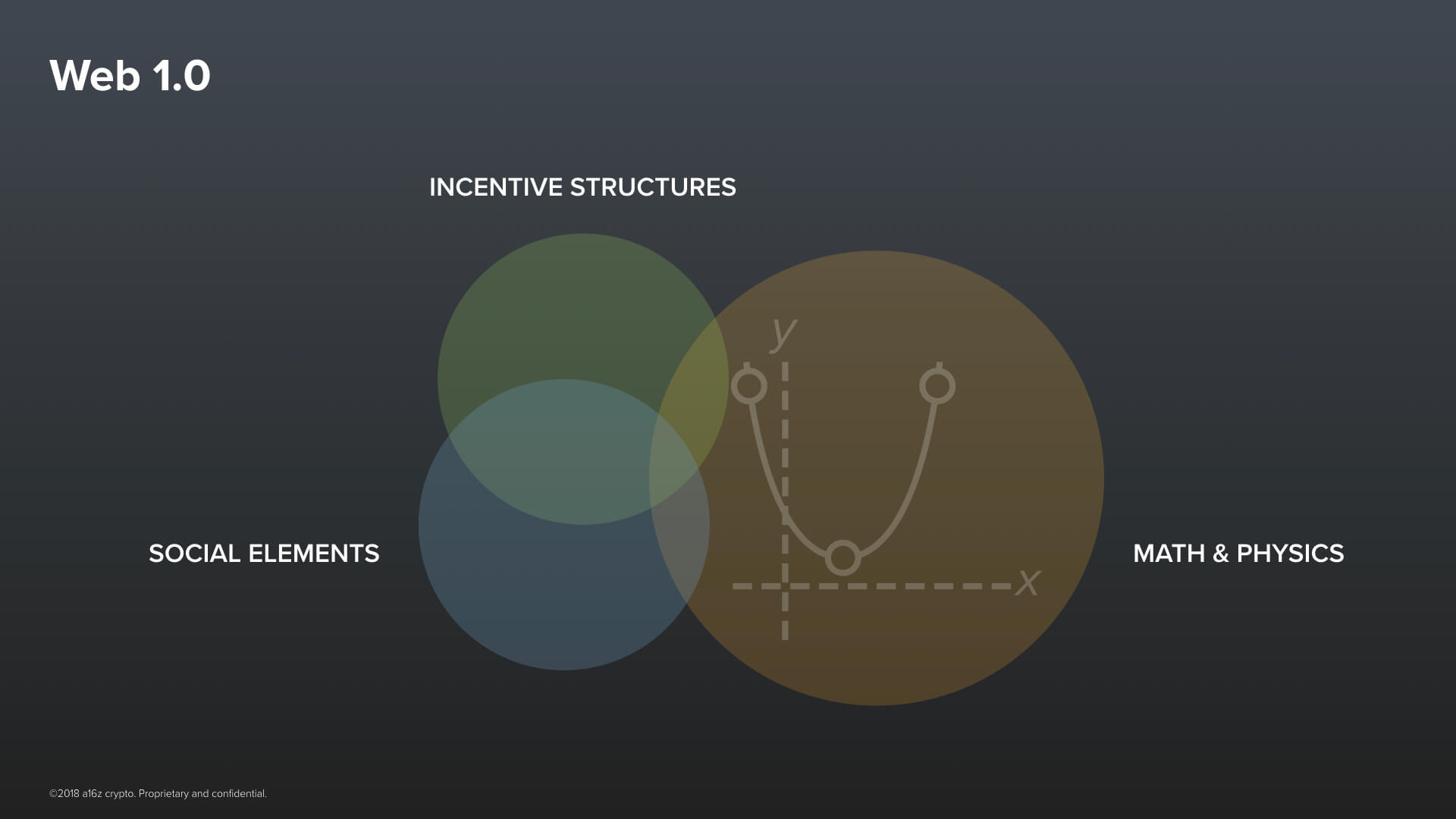

We’ve all seen the headlines: Facebook, Zynga, Twitter, Google, Yelp, and the list goes on. The behemoths of web 2.0 have re-introduced themselves into the trust equation. We went from this trust model …

… To this one.

The story of the Internet so far serves both as: inspiration about just how much value creation is possible when a software platform is open and neutral. And as a cautionary tale of what can happen when too much power and trust is placed on a small number of for-profit human institutions.

The trust model of Web 2.0 is not like the trust model of Web 1.0. It is inconceivable that a company the size of Google could be built on top of Google, in the same way that Google was built on top of TCP/IP and HTTP. So how do we get back to a trust model that doesn’t depend on gatekeepers? In other words, how do we go from “don’t be evil” to “can’t be evil”?

How Cryptonetworks Build Trust

Alright, the moment we’ve all been waiting for. Let’s talk about crypto. This entire presentation can be summarized with a single big idea: Cryptonetworks.

Cryptonetworks are a technology with the potential to catalyze trust and therefore cooperation at unprecedented scale. They will help bring about a shift away from institutional trust and onto trust that is programmable. Let’s talk about the technology. Cryptonetworks are built on top of the open protocols of web 1.0 and the building blocks of cryptography.

A simple way to think of cryptography is as the branch of Math and Computer Science that studies how to make data trustworthy. Now, when you hear “cryptography” you might be tempted to think about encryption, about keeping things secret. But, by far the most important application of cryptography today has nothing to do with secrecy. Instead, it’s about making data tamper-proof. Digital signatures, for example, are a breakthrough of modern cryptography that allows me to send you a message that you can be certain––mathematically––really came from me.

Digital signatures plus the open protocols of Web 1.0 are a powerful combination, and they’ve gone a long way to making today’s Internet possible. But, they are not a complete set of Lego building blocks for them to serve as a true software platform. There are protocols that are missing. They don’t help with the storage of data, for example. Or with computation on top of that data.

The tech giants of web 2.0 built fantastic businesses by stepping in and providing closed source versions of those missing protocols.

But what we need is a set of protocols that are open––like the protocols of web 1.0––that together make for a shared repository of user data (a database) that is neutral and is owned collectively by the users themselves. This means that no one human institution would be in control of it.

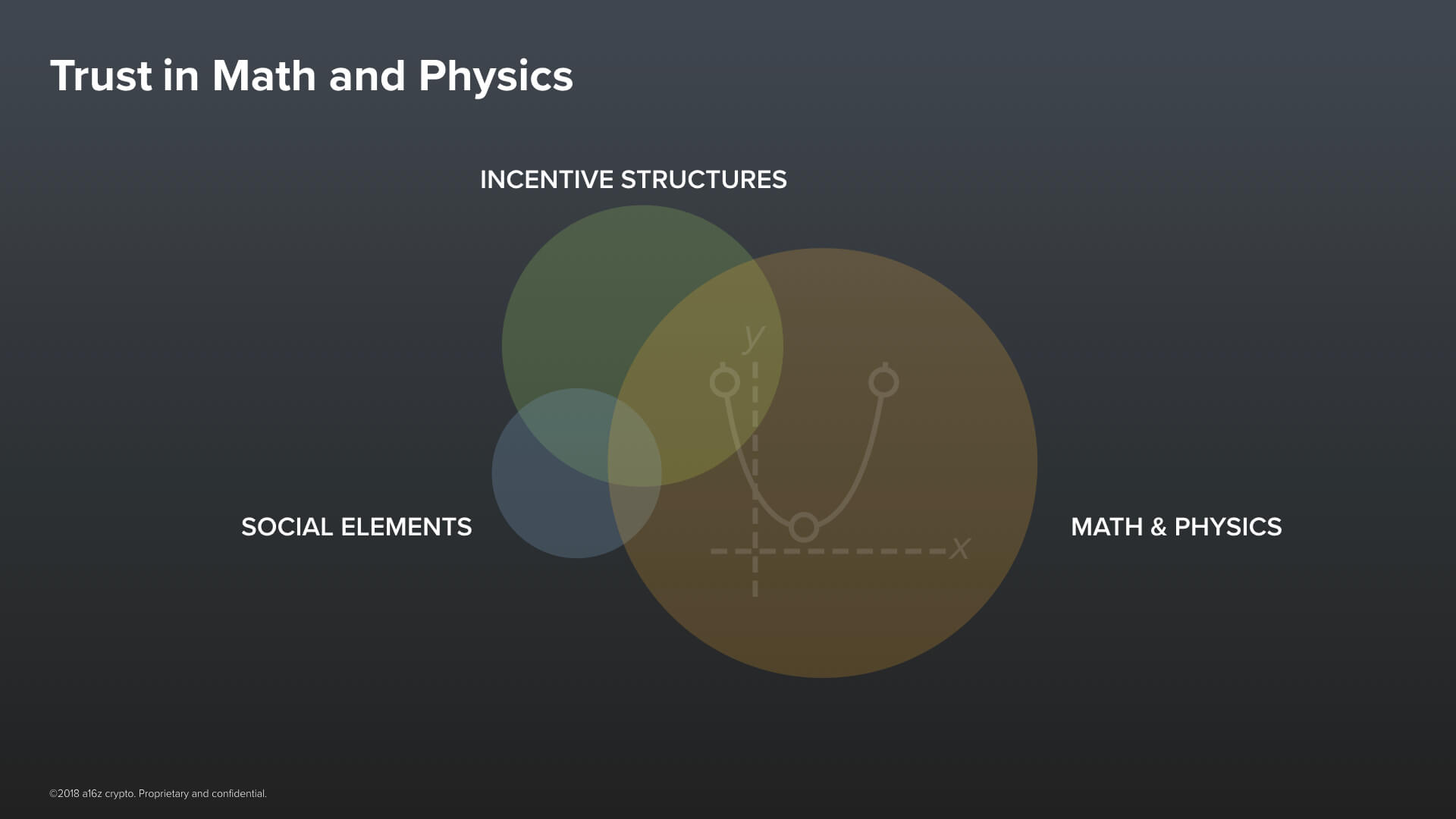

Now, of course, building such a database is hard because someone has to do the work of keeping it honest. Traditionally, we have relied on intermediaries like the tech giants, as well as banks and governments to keep our databases secure for us. What we want instead is for trust in the database to be based on Math and Physics. In other words, it must be self-policing.

Almost exactly ten years ago, the Bitcoin paper was published.

The idea behind Bitcoin is to leverage the primitives of cryptography like digital signatures, the open protocols of web 1.0, and a very clever incentive structure to build a collectively owned and neutral database of Bitcoin transactions––of payments.

Now, what is truly novel about this database is that its security emerges bottom up from its users –– users who can be anyone, anywhere, and can participate without anyone’s permission. In other words, control over the database is literally decentralized––there are no gatekeepers. The challenge is, of course, that many of those participants are no doubt dishonest and they would rather like to game the system for a profit, if they could. The genius of Bitcoin is an incentive structure that makes it self-policing. It is valuable to have at least an intuition for this works.

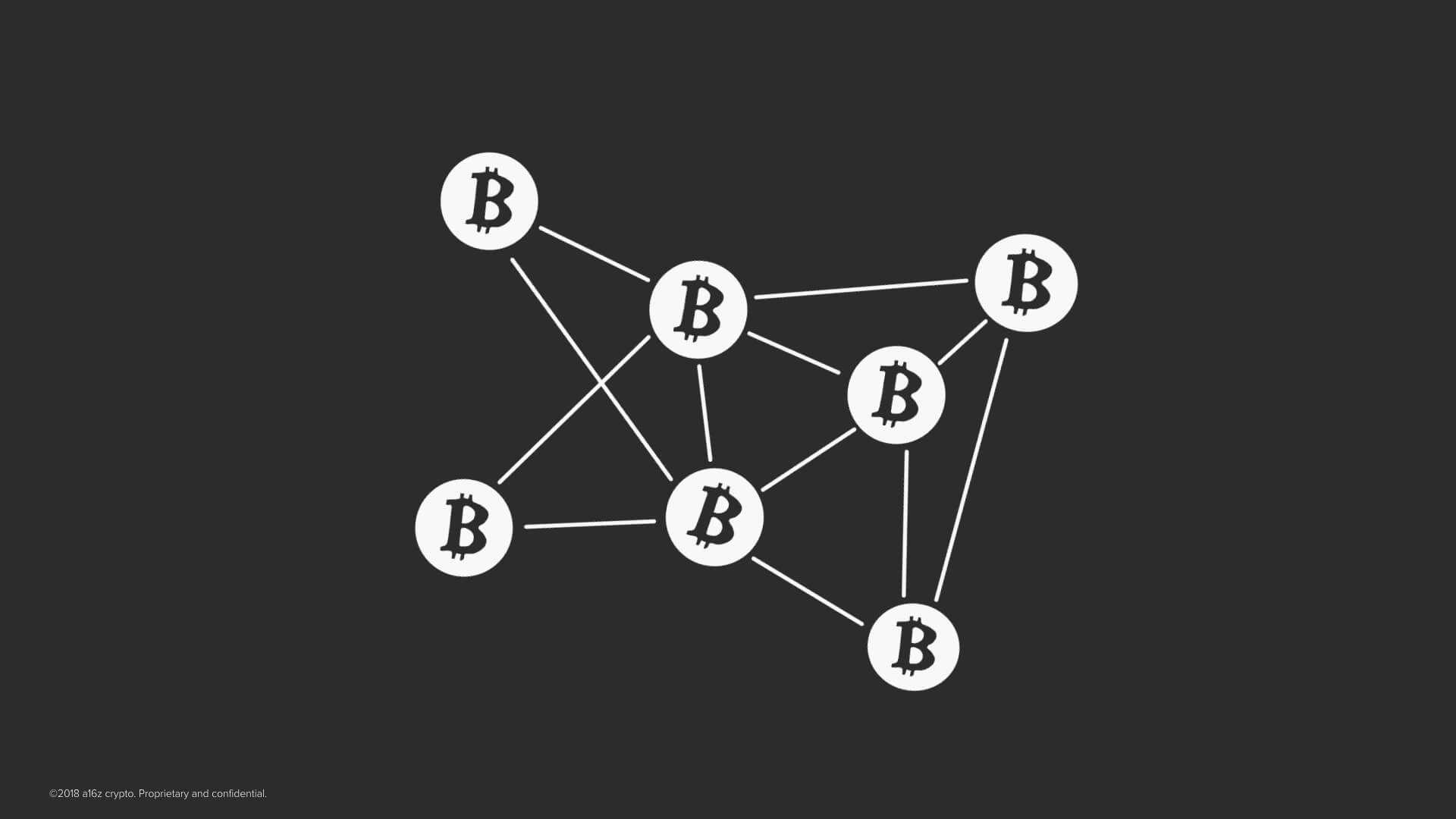

Instead of keeping a single copy of the database inside of some trusted data center owned by Google, each participant in the network keeps their own copy. But there’s a problem: how can we make sure that all the copies of the database stay consistent, and that nobody can insert a fraudulent transaction?

The answer is that each participant in the network, known as a miner, monitors the network and votes for the sets of transactions that they believe are valid. The twist is that they vote with their computational power. Consider Alice –– she’s a miner. The more of her computational power she offers to the network, the more secure she helps make it. And as a result, the protocol gives more voting power and a bigger reward.

Crucially, the reward that is paid out to Alice is newly minted Bitcoin. So in a single stroke, Bitcoin comes alive as a kind of money and, at the same time, as the very source of funding that bootstraps its own security.

This idea is known as Proof-of-Work. The insight is that you have to contribute to the security of the database in order to get to vote, and to get paid. The result is an elegant incentive structure that encourages participants in the network to keep one another in check. And so, even though they do not trust one another, they can come to trust the database that they are collectively helping secure.

Bitcoin is the simplest possible cryptonetwork. It attempts to address the problem of trustless money––money whose legitimacy and backing depends not on human institutions, but on mathematical guarantees. And all of this is great. But someone else came along and asked the question: Could we do something that is more interesting than just money with this idea?

Innovations in the Crypto Space

In 2014, Ethereum was launched. Its provocative insight is that there is no reason why Bitcoin should be a database just for money. And there’s no reason for updates to that database to just be transactions. Those updates could be whole computer programs. Ethereum is therefore more than just a decentralized database.

It is a decentralized, collectively owned, world computer.

Programs that run on top of it, once deployed, are in turn also collectively owned. No human institution controls them. In a sense, they are independent; once written, they obediently execute themselves as written, subject to nobody’s authority. But, why would anyone want programs that are independent like this?

Well, you very well may be able to guess, their usefulness has to do with trust. Programs of this kind are open source so everyone can see what they do. Their correct execution on the world computer, is mathematically guaranteed in the same way that transactions are guaranteed to be valid in Bitcoin. So, these programs can be used as building blocks for enforceable agreements or “smart contracts” between complete strangers without the need for mediating authorities.

For the first time, we have the ability to program trust.

Let’s work through some real world examples of what you can do.

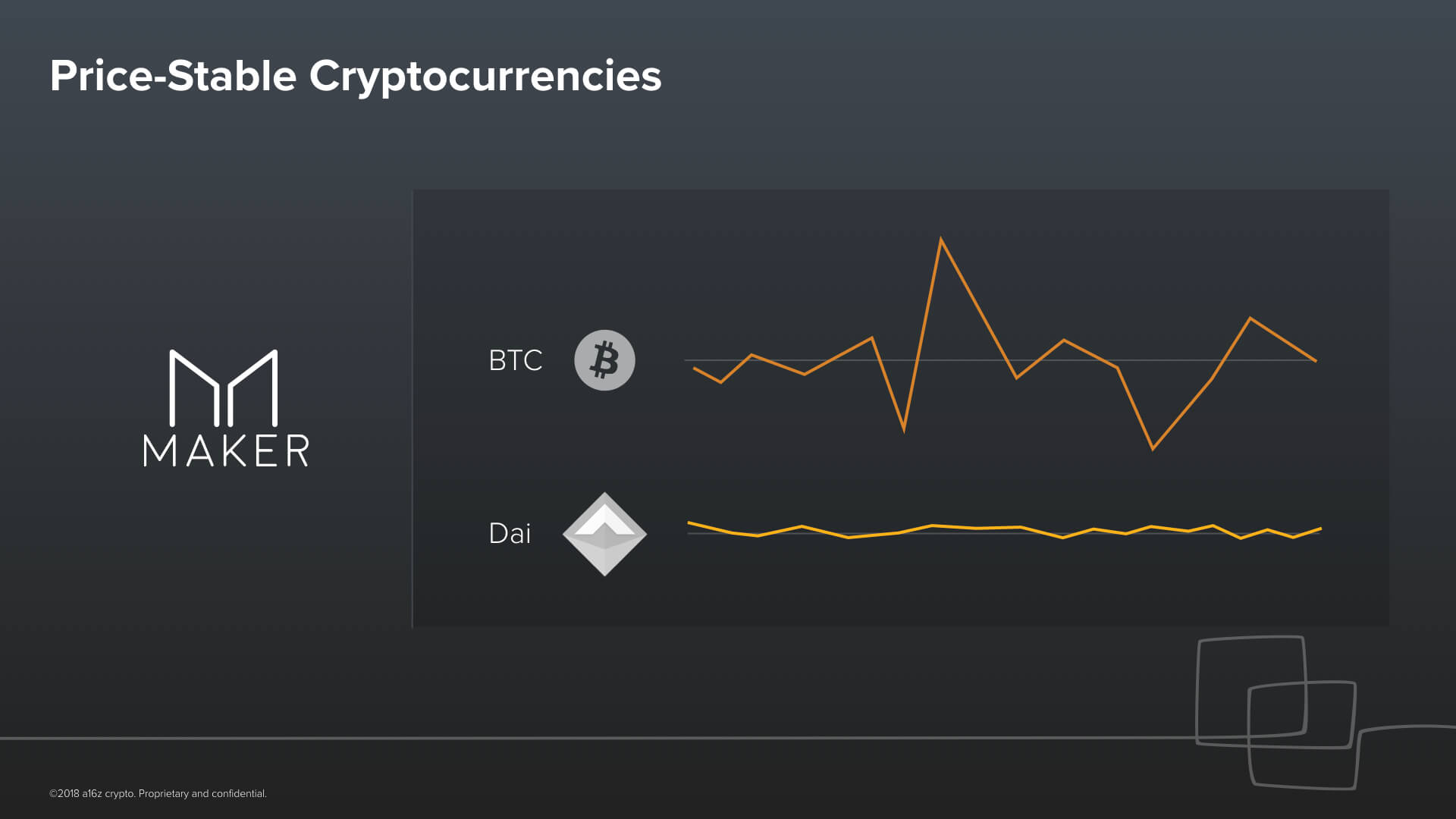

An ingenious application of this idea is a cryptocurrency––Dai––whose price is stable with respect to the dollar. One Dai is always worth $1. This is what Maker is building. The beauty of Dai as a cryptocurrency is that it has all of the advantages offered by Bitcoin, but it is also price stable. Because of its stability, it has a real chance to become a digital alternative to fiat for the two billion people who are unbanked or the hundreds of millions of people in countries with hyperinflation. Maker launched Dai almost a year ago. It has been in circulation ever since, and it has been successful at maintaining its peg. Now, you might think this whole idea should impossible. I mean, the price of something is always decided by the market, right? But the idea behind Dai is clever.

How so? Dai is simply a program running on the Ethereum world computer. It mints new Dai tokens and gives them out as loans to whomever is willing to post enough collateral. The collateral is in the form of other crypto-assets for those loans. These newly minted Dai tokens can then be traded as if they were dollars. This is sound because they are fully backed by the collateral that is held by the program. But, of course, we have an obvious problem here: What happens if the value of the Bob’s collateral starts to go down? This is where the magic of software kicks in. As soon as Bob’s loan becomes too risky, the program has the ability to immediately take possession of the collateral, sell it for the Dai that is owed, and use that Dai close Bob’s loan. This takes the Dai out of circulation and ensures that there’s always enough collateral held by the program to back all of the Dai that is outstanding.

Now take a second to think about this. Dai is not just a price-stable cryptocurrency. It is also a lending platform where loans are originated and margin-called entirely in software without trusted human intermediaries –– no banks. Now I don’t know about you, but I think this is really cool. The glimpses that things like Dai offer of the future are really what get me out of bed and into work every day.

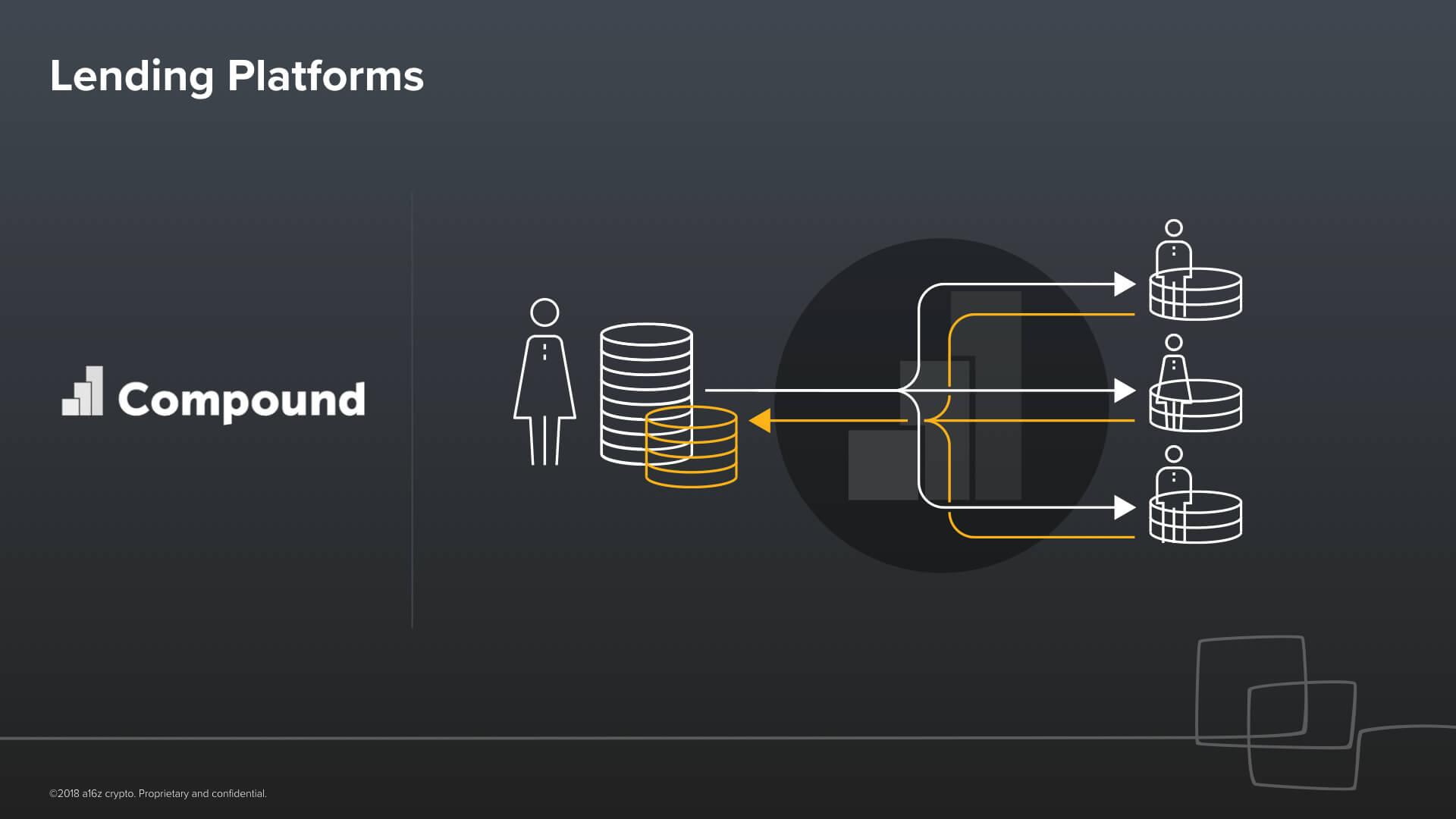

Let’s take the idea of lending further. Compound is a company that has built a program that runs on the Ethereum world computer that acts as a kind of money market. Anyone can lend crypto assets to the program, and the program will then, in turn, lend out those crypto assets to others. The interest rate is algorithmically set, and again, no trust is placed on human intermediaries.

Now think of what you could do if were to combine Compound with Dai, as a stable currency to lend and borrow, and were to add a friendly user interface. This starts to look a lot like a consumer bank. But one that’s built entirely in software, end-to-end.

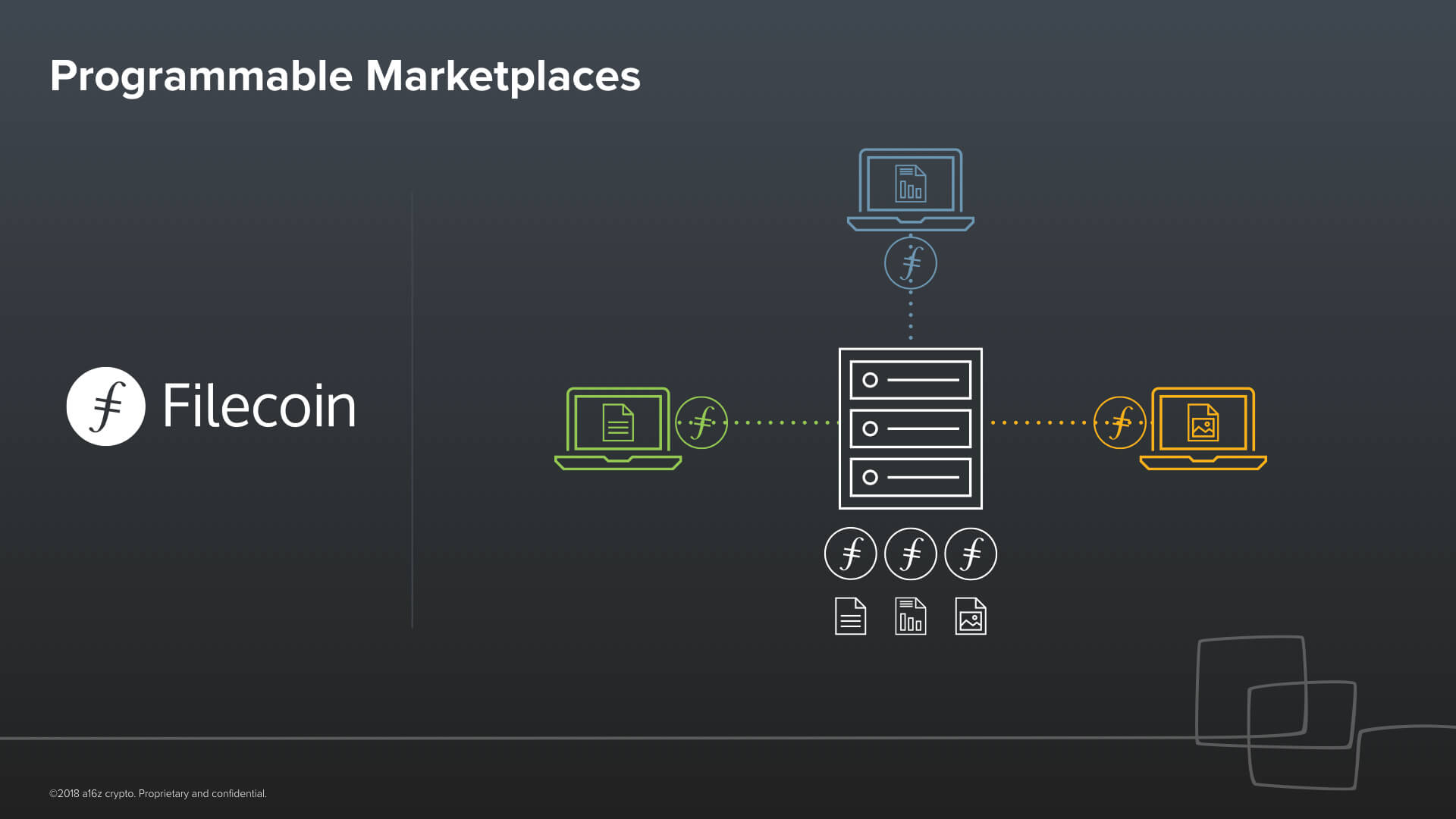

Let’s go beyond just finance. What else can we do with programmable trust? One example, is a programmable marketplace like Filecoin. I can rent you the spare storage space on my laptop, and you can pay me for it in Filecoin. Crucially, you and I could be complete strangers and live across the world from another. You can trust that I will store your files, and I can trust that I will get paid for storing your files, because of the mathematical rules and incentives of Filecoin. And again, this works without intermediaries, just the protocol.

This is a CryptoKitty. I happen to own this CryptoKitty. And I also happen to be too embarrassed to admit to you how much I paid for this CryptoKitty. So this CryptoKitty is mine; it’s like some sort of digital, collectible beanie baby or tamagochi (if any of those words mean anything to you).

The difference here is that this is something that is purely digital that the world recognizes that I own. Think about it: you can use the technology to track how much Bitcoin everyone one. But, you also use it to track who owns all the adorable cats that play starring roles in Pokemon-like online games. I mean, why not? No matter what happens––even if the company that made CryptoKitties were to go away––this kitty is still mine. My ownership over it is burned into the Ethereum blockchain. I can take it with me, and into games made by other people. And that is something that is new. This is just the beginning of a new paradigm for web based games.

Cryptonetworks of Web 3.0

The technology in this space is still very nascent. This is the very beginning. Countless building blocks are still missing. There are many problems: problems of performance, of privacy which is still missing, of custody and key management, of identity, of protocol governance, and of developer and of user experience. The list goes on, but as these limitations are gradually addressed it will become possible to use this new set of Lego building blocks to build things that we can hardly imagine today––things as complex as Search Engines and Social Networks that will become platforms in their own right. Like the protocols of Web 1.0, the cryptonetworks of Web 3.0 are open source, collectively owned, and can be trusted to remain neutral.

We see this as the foundation to another wave of innovation that is just as large if not larger than the startup ecosystem on the Internet so far.

We can hardly hope to imagine what this wave will look like when all is said and done, but we can already see the beginning of a progression: it starts with solutions to some of the inefficiencies in our financial system; it progresses to fully programmable marketplaces like Filecoin; to the first crypto enabled games, like CryptoKitties; and finally to an architecture for the Internet that can serve as a platform for the yet uncharted Web 3.0.

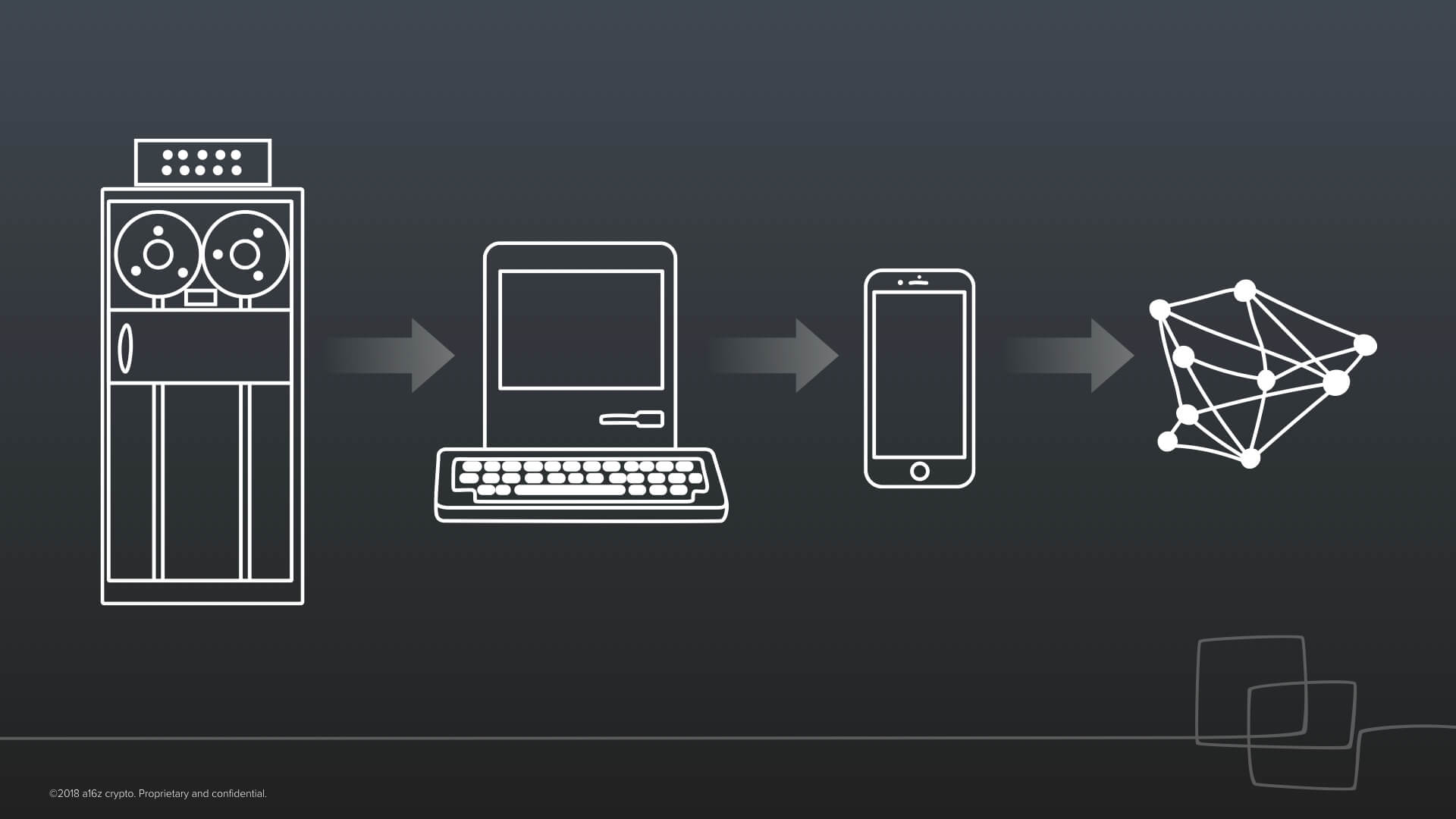

This is a fundamentally new paradigm for computing. We’ve gone from mainframes, to the personal computer, to the smartphone era, and now onto cryptonetworks. The key feature of this new paradigm that was previously unheard of is that trust can now be software–it is programmable.

And, through programmable trust, cryptonetworks stand to enable human cooperation at a scale that is completely unprecedented. Trust is becoming unbundled, decentralized, and inverted –– instead of flowing top down from institutions to individual people, it is now beginning to emerge bottom up from individuals and software.