It’s been an eventful year in the world of generative music.

In April, the first viral AI cover dropped: ghostwriter’s “Heart on My Sleeve,” which woke up the music world to the fact that AI content could not only exist, but could be good.

Soon after, Google unveiled MusicLM, a text-to-music tool that generates songs from a basic prompt; Paul McCartney used AI to extract John Lennon’s voice for a new Beatles track; and Grimes offered creators 50% of the royalties for streams of songs that used an AI clone of her voice. And perhaps most importantly, Meta open sourced MusicGen, a music generation model that can turn a text prompt into quality samples. This move alone spawned a flurry of new apps that use and extend the model to help people create tracks.

Similar to how instruments, recorded music, synthesizers, and samplers all supercharged the number of creators and consumers of music when they were introduced, we believe that generative music will help artists make a similar creative leap by blurring the lines between artist, consumer, producer, and performer. By dramatically reducing the friction from idea to creation, AI will allow more people to make music, while also boosting the creative capabilities of existing artists and producers.

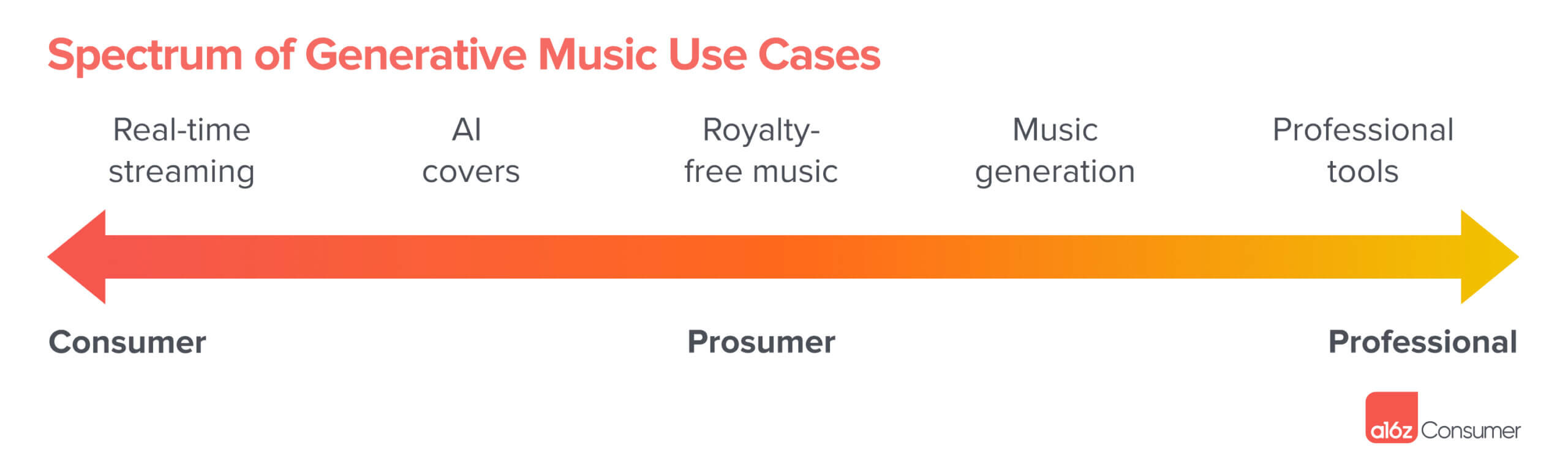

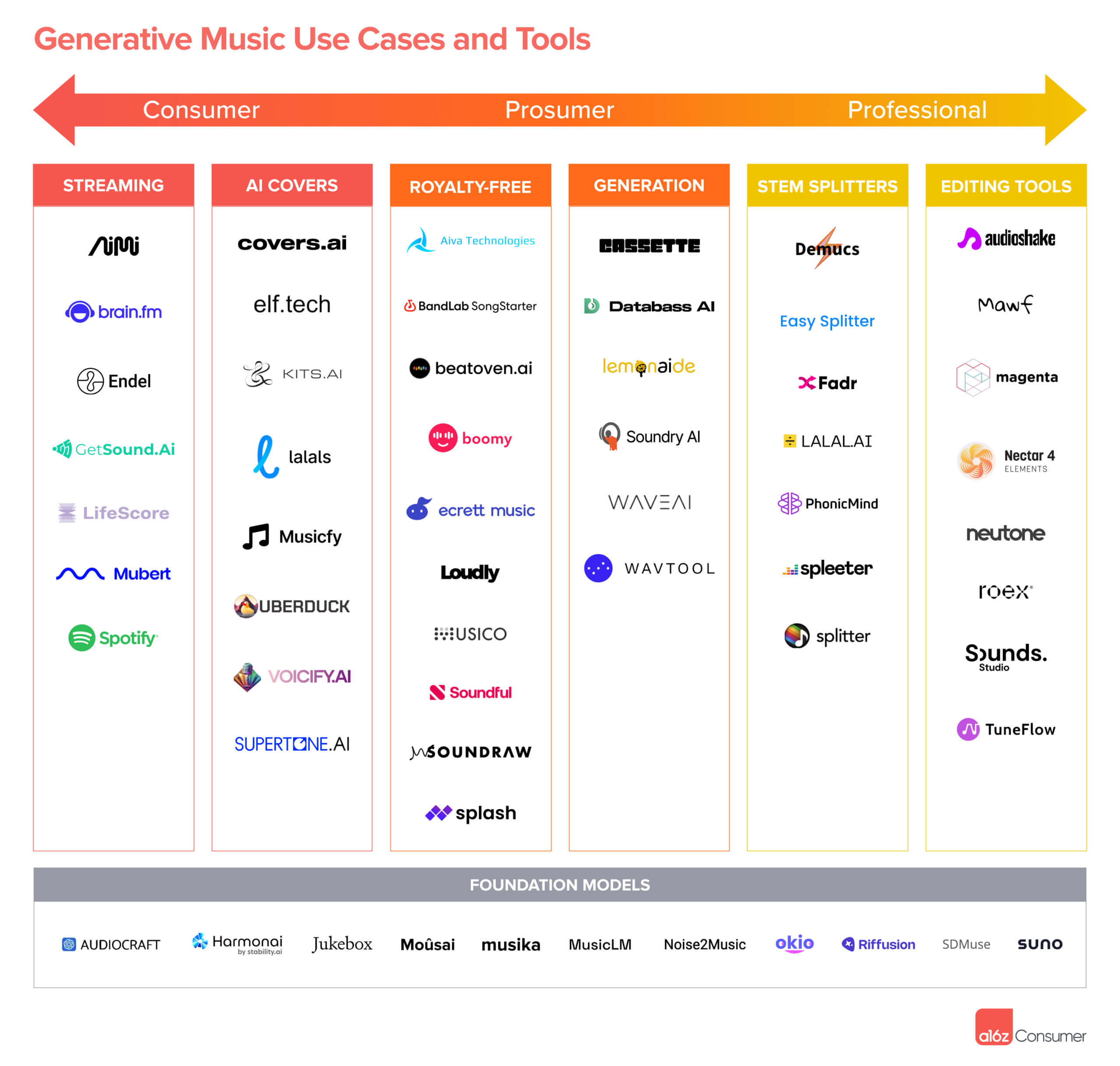

In this post, we’ll dive into what people are doing today, explore where AI music might be headed, and outline a few of the emerging companies and capabilities at the forefront of the space. This post is structured around 5 core use cases for ease of comprehension, but we’ve found another helpful way to categorize these products is by target audience — products built for everyday consumers vary greatly from prosumers/creators with commercial use cases. We demonstrate the overlap below:

Real-Time Music Streaming

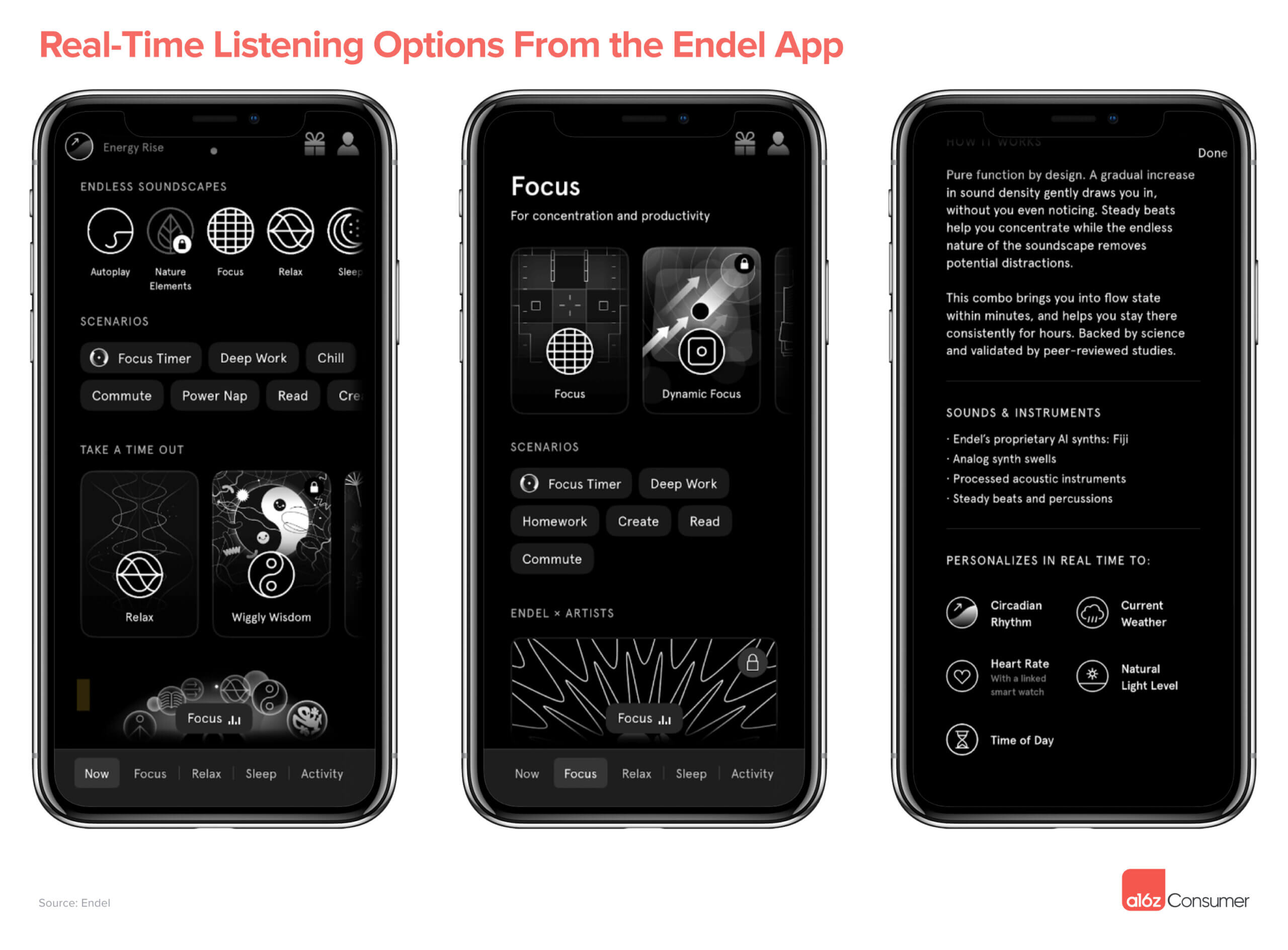

Thus far, most of the emerging generative streaming products have been in the functional music category: apps like Endel, Brain.fm, and Aimi. They generate never-ending playlists to help you get into a certain mood or headspace, and then adapt based on the time of day and your activity. (However, functional music is starting to converge with traditional music, as powerhouse labels like UMG partner with generative music companies like Endel to create “functional” versions of popular new releases.)

In the Endel app, you can hear how the sound is quite different if you’re in “deep work” mode vs. “trying to relax” mode. Endel has also partnered with creatives to produce soundscapes based on their work, like a generative album.

Most of the products in the music streaming space have been focused on soundscapes or background noise, and they don’t generate vocals. But, it’s not hard to imagine a future where AI-powered streaming apps can also create more traditional music with AI generated vocals. Just as recorded music brought about the long play album as a format for music, one can imagine generative models enabling “infinite songs” as a new format.

This gets more interesting if you don’t have to prompt the product with text. What if you could instead provide general guidance on the genres or artists you’re interested in, or even allow it to learn from your past listening history with no prompt required? Or what if the product connects to your calendar to serve the perfect “pump up” playlist before a big meeting?

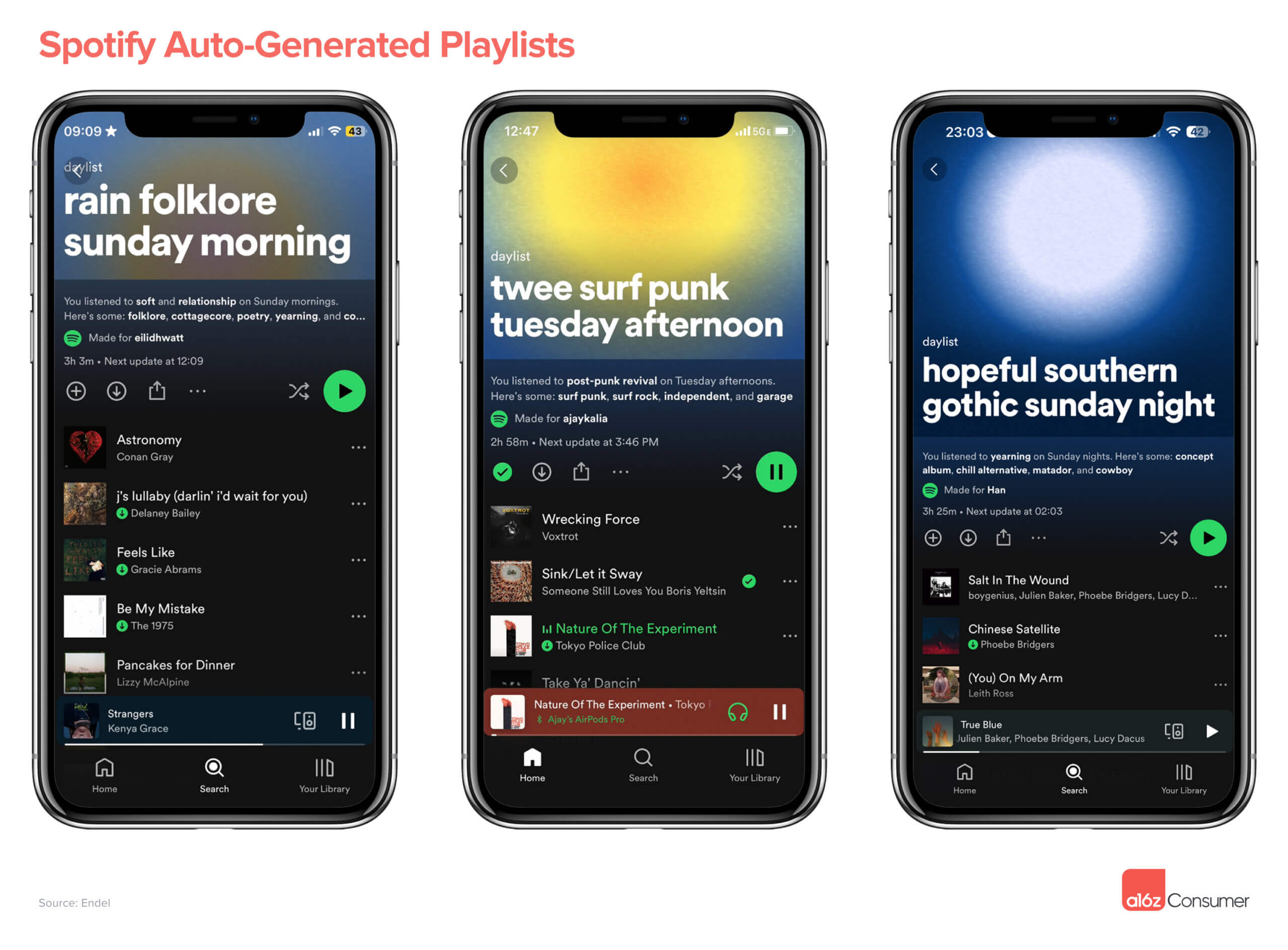

Spotify has been making strides toward personalized, auto-generated playlists. In February, they launched an AI DJ that sets a curated lineup of music alongside commentary. It’s based on the latest music you’ve listened to as well as old favorites — plus it constantly refreshes the lineup based on your feedback. And this month, they unveiled “Daylist” — an automated playlist that updates multiple times a day based on what you typically listen to at specific times.

Unsurprisingly, Spotify isn’t generating new music, but instead curating these playlists from existing songs. But the most evolved version of this product would likely involve an AI-generated and human-created mix of content, soundscapes, instrumentals, and songs.

AI Covers

AI-generated covers were arguably the first killer use case for AI music. Since “Heart on My Sleeve” dropped in April, the AI cover industry has exploded, with videos labeled #aicover racking up more than 10 billion views on TikTok.

Much of this activity started by creators in the AI Hub Discord, which had 500k+ members before it was shut down in early October after repeated copyright violation claims, and as discussed below, these legal concerns have not been resolved. That server has now disaggregated into more private communities where users train and share voice models for specific characters or artists. Many use retrieval-based voice conversion, which essentially transforms a clip of someone speaking (or singing!) into another person’s voice. Some experts, despite legal uncertainty, have even created guides on how to train a model and make covers with it, and they’ll post links to models they’ve trained for others to download.

Running one of these models locally requires some technical sophistication. There are now a number of browser-based alternatives that do the heavy lifting for you. Products like Musicfy, Voicify, Covers, and Kits are some examples of new products trying to streamline the process. Most require you to upload a clip of yourself (or someone else) singing to transform the voice, but we expect that text-to-song is on the horizon (products like Uberduck are already doing this for rappers).

The major unresolved problem with AI covers is legal rights, which are important to take into consideration if you’re working in this space.

However, similar legal uncertainty has accompanied other changes in technology. For example, the litigation and claims around sampling that defined the early years of hip hop. After years of litigation starting in the early 1990s, many of the “original” sampled artists realized that finding an economic arrangement with those who wished to sample their work was positive-sum both creatively and financially. Labels dedicated entire teams to clearing samples, and Biz Markie even released a tongue-in-cheek album entitled “All Samples Cleared.”

While some labels and artists feel threatened by AI music, others see opportunity — they can make a passive income from other creators generating songs that use their voice, no work required! Grimes is the highest profile example of this, as she released a product called Elf.tech that enables others to create new songs with her voice. She’s pledged to split royalties with any AI-created song that is able to generate revenue.

We expect to see infrastructure emerge to support this on a greater scale. For example, artists need a place to store their custom voice models, track AI covers, and understand streams and monetization across tracks. Some artists or producers may even want to use their voice models to test different lyrics, see how a given voice sounds on a song, or experiment with different collaborators on a track.

Royalty-Free Tracks (aka AI Muzak)

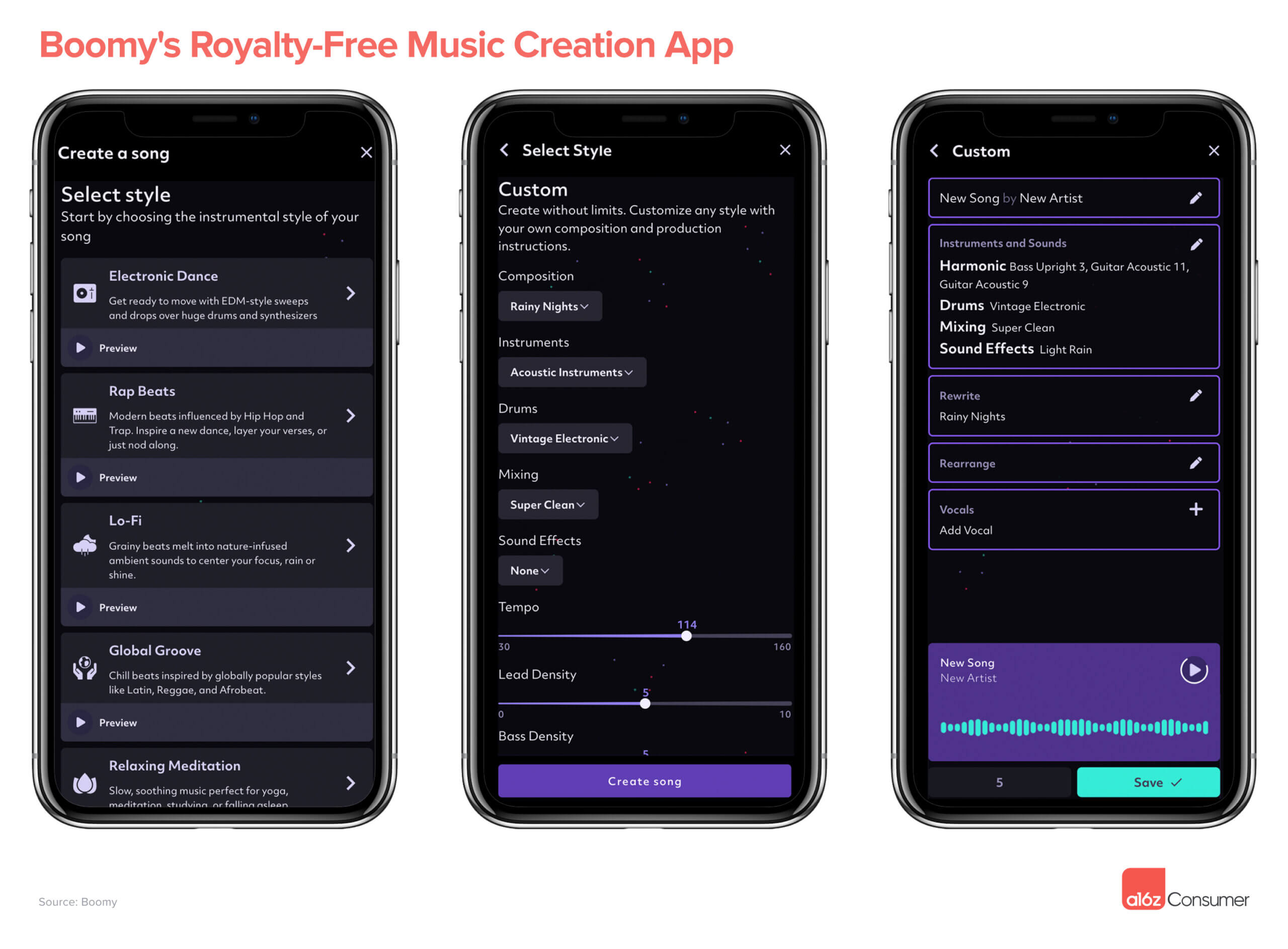

Moving into prosumer tools, if you’ve ever created a YouTube video, podcast, or any type of video content for a business, you’ve probably experienced the struggle of finding royalty-free music. While stock music libraries exist, they’re often challenging to navigate and the best tracks tend to be overused. There is even an often-mocked genre of music that defines this forgettable — but royalty free — sound: “muzak” or “elevator music.”

Enter AI-generated music. Products like Beatoven, Soundraw, and Boomy make it easy for anyone to generate unique, royalty-free tracks. These tools typically allow you to choose a genre, mood, and energy level for your song, and then use your inputs to auto-generate a new track. Some of these tools enable you to edit the output if it’s not quite right, e.g., increasing or decreasing the tempo, adding or subtracting certain instruments, or even rearranging notes.

We expect the future of royalty-free music will be almost entirely AI-generated. This genre has already become commoditized, so it’s not hard to imagine a world where all background music is created by AI, and we break the historical tradeoff between quality and cost.

The early adopters of these products have largely been individual content creators and SMBs. However, we expect these tools to move upmarket, in terms of both traditional enterprise sales to larger companies like games studios, and embedded music generation in content creation platforms via APIs.

Music Generation

Perhaps the most exciting implication of large models combined with music is the potential for bedroom producers and other prosumers (including those that lack formal music training) to create professional-grade music. A few of the key capabilities here will include:

- Inpainting: Taking a couple of notes that a producer plays and “filling” out the phrase.

- Outpainting: Taking a section of a song and extrapolating what the next few bars might look like. This is already supported by MusicGen with the “continuation” setting.

- Audio to MIDI: Converting audio to MIDI including pitch bend, velocity, and other MIDI attributes, as available via Spotify’s Basic Pitch product.

- Stem separation: Decompiling a song into stems including vocals, bassline, and percussion using technologies like Demucs.

You can imagine a future producer’s workflow looking something like this:

- Grab an appropriately cleared song you want to sample

- Split the stems and convert an interesting audio element to MIDI

- Play a few notes on a synthesizer and then use in-painting to fill out the phrase

- Extrapolate that phrase to a few additional phrases using outpainting

- Compose a track (further using generative tech to create one-shots), replicate or extend studio musicians, and master the track in a specific style

We’ve also started to see the rise of software-only products that focus on various parts of the production stack; for example, generating samples (Soundry AI), melodies (MelodyStudio), MIDI files (Lemonaide, AudioCipher), or even mixing (RoEx).

It will be critical that these models are multimodal, and accept music and other audio inputs as many people lack the vocabulary to describe the exact sounds they’re seeking. We expect to see a tight loop between hardware and software — including the rise of “generative instruments,” which may be DJ controllers and synthesizers that embed these ideas directly into the physical product.

Professional Tools

Finally, we’ll touch on a newer category of AI music products: professional tools that are used in the workflow of music producers, artists, and labels. (Note that while we say professional, many of these products also serve indie or amateur creators.)

These products vary widely in complexity and use cases, as well as the extent to which they integrate within the traditional production workflow. We can split them into 3 major categories:

- Browser-based tools that focus on one element of the creation or editing pipeline and are accessible to all; you don’t need to use traditional production software to benefit from them. For example, Demucs (an open source model from Meta), Lalal, AudioShake, and PhonicMind do stem splitting.

- AI-powered virtual studio technologies (VSTs) that plug into digital audio workstations (DAWs) like Ableton Live, Pro Tools, and Logic Pro. These VSTs, including Mawf, Neutone, and Izotope, can be used to synthesize or process sound within a producer’s existing workspace without requiring them to re-home their workflow.

- Products that are trying to re-invent the DAW entirely with an AI-first approach, making it more accessible to a new generation of consumers and professionals alike. Many of the most popular DAWs today are 20+ years old; startups like TuneFlow and WavTool are tackling the ambitious challenge of building a new version of the DAW from the ground up.

Music’s Midjourney Moment

Products like Midjourney and Runway have allowed consumers to create impressive visual content that previously would have required knowledge of and access to expensive, specialized and cumbersome tools. Already, we’re seeing creative professionals such as graphic designers adopt these early generative AI tools to expedite their workflows and iterate on content more quickly. We expect to see analogous products in music — AI-powered tools that decrease the friction from inspiration to expression to zero.

Products like Midjourney and Runway have allowed consumers to create impressive visual content that previously would have required knowledge of and access to expensive, specialized and cumbersome tools. Already, we’re seeing creative professionals such as graphic designers adopt these early generative AI tools to expedite their workflows and iterate on content more quickly. We expect to see analogous products in music — AI-powered tools that decrease the friction from inspiration to expression to zero.

This “Midjourney moment” for generative music — when creating a quality track becomes fast and easy enough for everyday consumers to do it — will have massive implications for the music industry, from professional producers and artists to a new class of consumer creators.

Our ultimate dream? An end-to-end tool where you provide guidance on the vibe and themes of the track you’re looking to create, in the form of text, audio, images, or even video, and an AI copilot then collaborates with you to write and produce the song. We don’t imagine the most popular songs will ever be entirely AI generated — there’s a human element to music, as well as a connection to the artist that can’t be replaced — however, we do expect AI assistance will make it easier for the average person to become a musician. And we like the sound of that!

If you’re building here, reach out to us at anish@a16z.com and jmoore@a16z.com.

-

Justine Moore is a partner on the investing team at Andreessen Horowitz, where she focuses on AI — both foundation models and applications.

-

Anish Acharya Anish Acharya is an entrepreneur and general partner at Andreessen Horowitz. At a16z, he focuses on consumer investing, including AI-native products and companies that will help usher in a new era of abundance.