This is a written version of a presentation I gave live at the a16z Summit in November 2018. You can watch a video version on YouTube.

Skynet is coming for your children — or is it?

In July 2017, I published a primer about artificial intelligence, machine learning and deep learning. Since then, I’ve been obsessively reading the headlines about machine learning, and in general, you will see two broad categories of articles on the front page. One category of headlines is “Robots are Coming for Your Jobs,” which predicts that we are headed inexorably towards mass unemployment. Even sober organizations like McKinsey seem to be forecasting doom-and-gloom scenarios in which one-third of workers are out of a job due to automation by 2030:

If that’s not scary enough, here is headline category number two: “Skynet is coming for your children.” In other words, AI is going to get smarter than humans at everything, and we’re going to end up the also-ran species on planet Earth.

But if you flip to the back pages of the newspapers or read more obscure journals, you’ll find stories of technology getting loans to first-time borrowers, delivering blood and vaccines to patients, and saving lives on the battlefield and on beaches.

So the counter-narrative I want to offer to the mainstream, front page accounts is that with careful, thoughtful, empathic design, we can enable ourselves to live longer, safer lives. We can create jobs where we are doing more creative work. We can understand each other better.But before I get to the many, many examples of where this is already happening today, let me share what’s happening in the AI ecosystem more broadly.

What’s happening in the AI ecosystem

The AI ecosystem is thriving, from universities to businesses to the halls of government around the world. Here are three anecdotes that illustrate how vibrant the ecosystem is. First up, here’s a fun fact from the world of academic AI research. The biggest gathering of researchers is a conference called Neural Information Processing Systems (recently renamed NeurIPS). It started meeting in 1987, and this past year (in 2018), the conference sold out in 11 minutes and 38 seconds. OK, it’s not quite Beyonce, whose stadium-sized concerts can sell out in 22 seconds, but we’re getting there.

Second: businesses across a spectrum of industries seem to be actually investing in AI. Here’s the perspective from Accenture CTO Paul Daugherty, who’s been at the company for more than three decades.

When we hosted Paul recently on the a16z podcast, he shared, “I’ve been in my company 32 years and been work in this industry for a while and been part of growing everything from our Internet business to our cloud mobile IoT businesses all those things, nothing has moved as fast across the organization as AI is moving right now. No trend has grown as fast in terms of real spending, in terms of headcount, focused on whatever measure you wanna use. AI is the fastest growing trend we’ve ever seen in terms of enterprise impact. It’s also the first one of all those trends that’s remarkably diversified across industries and across parts of the organization.”

When we hosted Paul recently on the a16z podcast, he shared, “I’ve been in my company 32 years and been work in this industry for a while and been part of growing everything from our Internet business to our cloud mobile IoT businesses all those things, nothing has moved as fast across the organization as AI is moving right now. No trend has grown as fast in terms of real spending, in terms of headcount, focused on whatever measure you wanna use. AI is the fastest growing trend we’ve ever seen in terms of enterprise impact. It’s also the first one of all those trends that’s remarkably diversified across industries and across parts of the organization.”

Third: with the academics publishing more papers and businesses implementing more projects, the politicians are naturally trying to position their own country or region as the best place on the planet to build AI-powered startups or do machine learning research. Many countries have announced their plans to foster an AI ecosystem, and no country takes this more seriously than China, which wants to be the undisputed world leader in AI by the year 2030 (see the official Chinese development plan). They’re even publishing cute infographics to get the word out to all of their people:

It’s now up to all of us together to harness this tremendous energy to benefit all humanity. With thoughtful, careful, and compassionate design, we can really make ourselves more creative, better decision makers, live longer, gain superpowers in the physical world, and even understand each other better. Let me share many real-world examples of how AI is helping us in each of these categories.

1: Automating the routine enables us to be more creative

Shakespeare had Hamlet say this about humanity:

While we are capable of logic and reason, we are also wonderfully creative (“infinite in faculties”). When machines automate the mundane and routine tasks, we will have more time, energy, and attention for the so-called “right-brain” thinking that machines are currently not good at, such as holistic thinking, empathy, creativity, and musicality. Let me share a few examples.

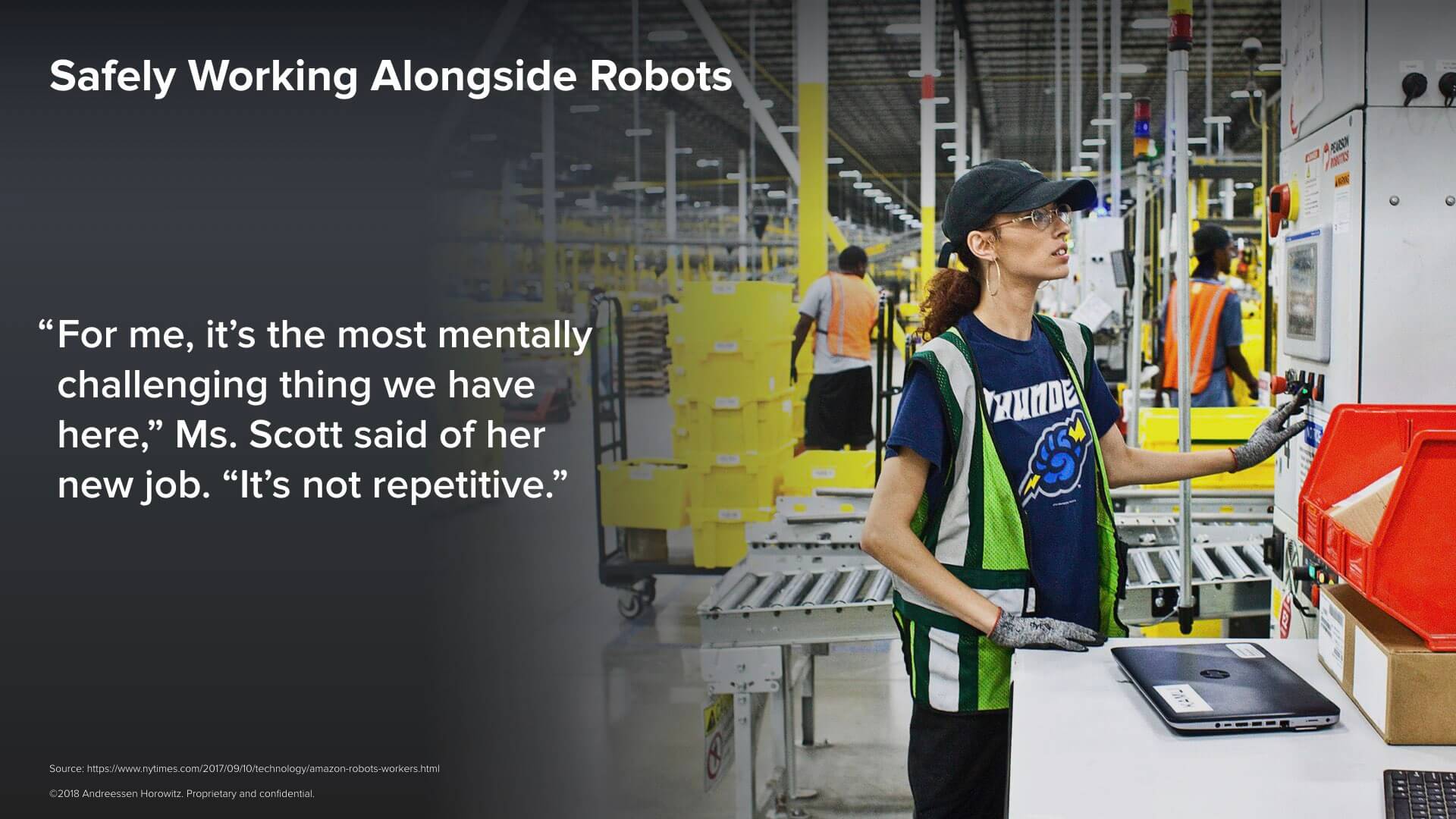

We are increasingly addicted to Amazon Prime and its global equivalents, with its same-day or two-day free delivery service. To feed our addiction, Amazon has been building distribution centers closer and closer to our homes and deploying hundreds of thousands of Kiva robots to carry 25-pound yellow bins from one location to another.Alongside those robots, Amazon has been hiring hundreds of thousands of human workers to position the Kiva robots and troubleshoot issues when the robots get stuck. Nissa Scott, shown in the picture above, says about her job in a New York Times article , “For me, it’s the most mentally challenging thing we have here. It’s not repetitive.” When machines take over simple repetitive tasks, humans are freed to do what they do best; thinking outside of the box and solving complex problems in creative ways.Here’s another example.

We are increasingly addicted to Amazon Prime and its global equivalents, with its same-day or two-day free delivery service. To feed our addiction, Amazon has been building distribution centers closer and closer to our homes and deploying hundreds of thousands of Kiva robots to carry 25-pound yellow bins from one location to another.Alongside those robots, Amazon has been hiring hundreds of thousands of human workers to position the Kiva robots and troubleshoot issues when the robots get stuck. Nissa Scott, shown in the picture above, says about her job in a New York Times article , “For me, it’s the most mentally challenging thing we have here. It’s not repetitive.” When machines take over simple repetitive tasks, humans are freed to do what they do best; thinking outside of the box and solving complex problems in creative ways.Here’s another example.

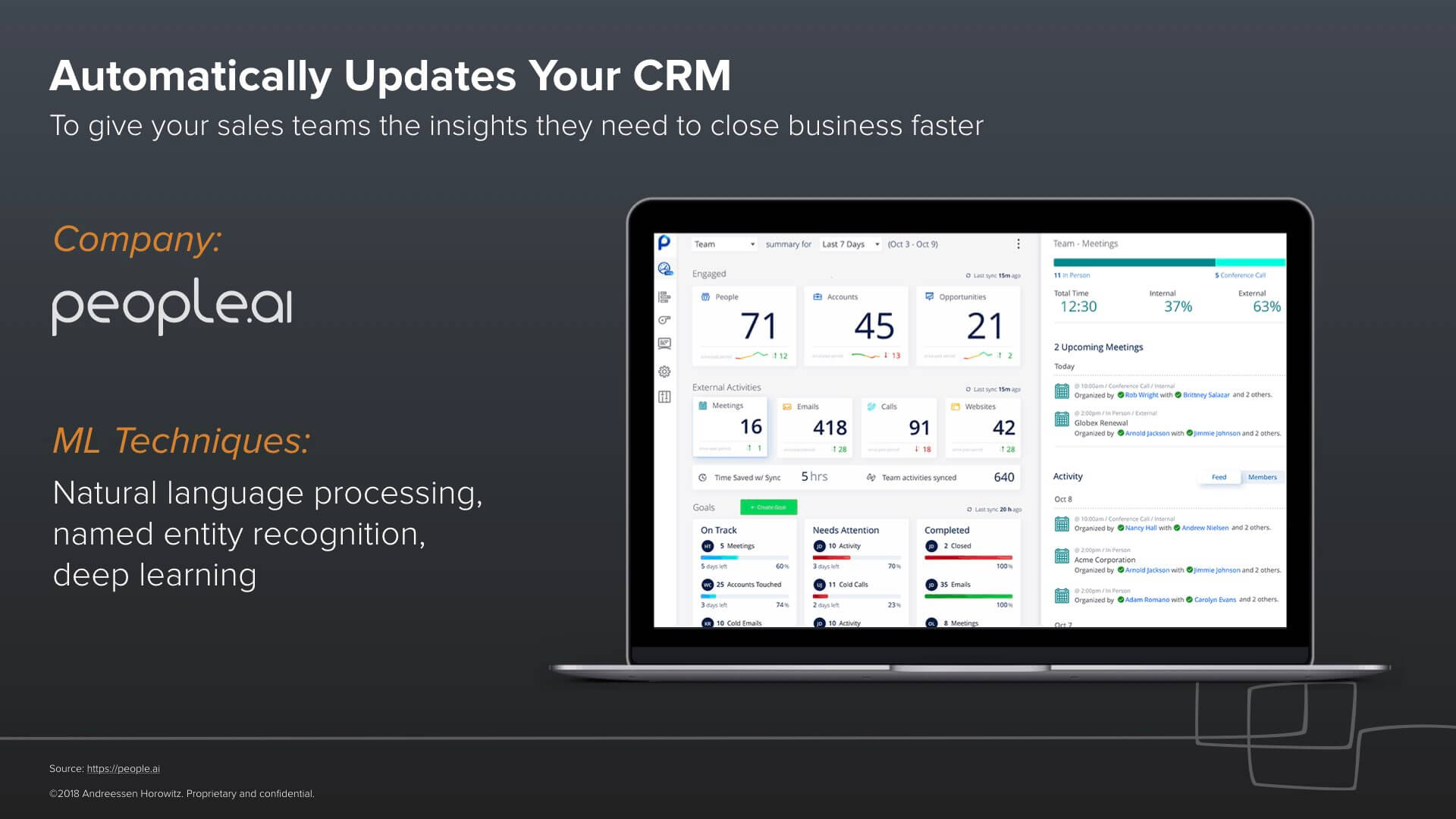

Many sales and marketing workers spend hours tediously inputting data into their Customer Relationship Management (CRM) systems. But despite sales management’s best efforts, these systems are often missing data or, worse, populated with the wrong information. Our portfolio company People.ai uses machine learning to automatically populate data into CRMs. With complete, up-to-date CRMs, sales and marketing people can finally generate and act on the insight they wanted out of their CRMs is the first place — such as knowing that we should call our prospect Alison on Friday afternoon, because that’s when she really wants to find out about how new systems can help her with her daily routine. By freeing sales people from the routine task of typing into CRMs, they can focus on understanding other people’s problems and using their empathy and creativity to help solve those problems.

Many sales and marketing workers spend hours tediously inputting data into their Customer Relationship Management (CRM) systems. But despite sales management’s best efforts, these systems are often missing data or, worse, populated with the wrong information. Our portfolio company People.ai uses machine learning to automatically populate data into CRMs. With complete, up-to-date CRMs, sales and marketing people can finally generate and act on the insight they wanted out of their CRMs is the first place — such as knowing that we should call our prospect Alison on Friday afternoon, because that’s when she really wants to find out about how new systems can help her with her daily routine. By freeing sales people from the routine task of typing into CRMs, they can focus on understanding other people’s problems and using their empathy and creativity to help solve those problems.

Where else can we automate the routine? How about preparing for trials? One of the first steps in preparing for a trial is gathering the evidence.

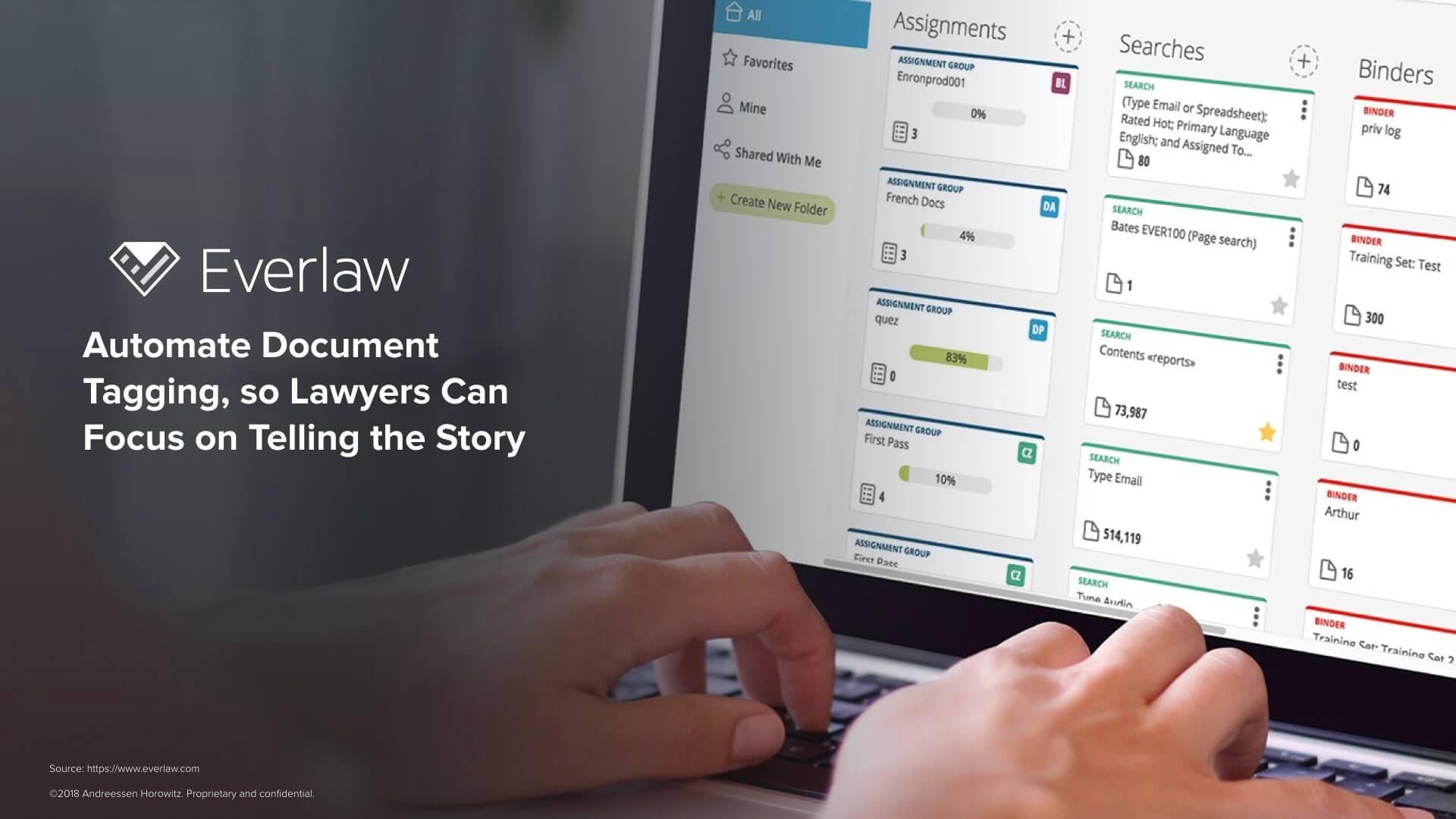

Traditionally, trial lawyers would sift through terabytes of documents, pictures, videos, emails, and other evidence as they prepare to argue a case. They have to figure out which of these documents are relevant to the case, what they are about, and how they tie together. Without software, it can take hundreds or thousands of hours for paralegals to comb through each document or picture.One of our portfolio companies Everlaw simplifies this process by using machine learning to quickly process, sort, and tag files relevant to a case and store them for easy access. As a result, lawyers working that case are able to use their talents on the more creative task of connecting the dots and coming up with the compelling big-picture story that a jury can follow.

Traditionally, trial lawyers would sift through terabytes of documents, pictures, videos, emails, and other evidence as they prepare to argue a case. They have to figure out which of these documents are relevant to the case, what they are about, and how they tie together. Without software, it can take hundreds or thousands of hours for paralegals to comb through each document or picture.One of our portfolio companies Everlaw simplifies this process by using machine learning to quickly process, sort, and tag files relevant to a case and store them for easy access. As a result, lawyers working that case are able to use their talents on the more creative task of connecting the dots and coming up with the compelling big-picture story that a jury can follow.

So-called natural language processing (NLP) systems — software that understands language — are finding their way across other applications as well. Dialpad is a Web-based phone and conferencing service which can transcribe audio recordings of conversations you have.

Once transcribed, Dialpad analyzes the text for signs of anger or frustration using a machine learning technique called sentiment analysis. If the system detects calls where people are getting frustrated, it can help agents use their creativity, empathy and IQ to get the conversation back on track.

We can bring these technologies to the doctor’s office as well. The last time I visited my primary care physician, he spent around 35 minutes with me, and of that time, for about 25 minutes, he was interacting with his laptop rather than me, trying gamely to keep my electronic health record up to date. Suki is a startup working on reconnecting doctors with their patients by listening to the conversation between a patient and their doctor, and automatically updating the patient’s medical records.By automating the routine, the doctor can focus on the creative, empathic, and emotional part of patient care. When these technologies (as well as systems which help diagnose problems) make it into most doctor’s offices, I believe we’ll choose our doctors largely based on who has the best bedside manner because diagnosis and keeping systems up to date will be mostly automated.

We can bring these technologies to the doctor’s office as well. The last time I visited my primary care physician, he spent around 35 minutes with me, and of that time, for about 25 minutes, he was interacting with his laptop rather than me, trying gamely to keep my electronic health record up to date. Suki is a startup working on reconnecting doctors with their patients by listening to the conversation between a patient and their doctor, and automatically updating the patient’s medical records.By automating the routine, the doctor can focus on the creative, empathic, and emotional part of patient care. When these technologies (as well as systems which help diagnose problems) make it into most doctor’s offices, I believe we’ll choose our doctors largely based on who has the best bedside manner because diagnosis and keeping systems up to date will be mostly automated.

I’ll finish this section with my favorite example of automating the routine. This is my personal favorite because in my family I am the designated photographer and videographer. In general, my kids are pretty well-behaved, but I can always get them to roll their eyes at me when I ask them to pose for a picture because when I do, I’m pulling them (and me) out of the moment. Skydio helps us all stay in the moment with their hardware and software solution called the Skydio R1 that automates videography. The Skydio R1 positions its camera exactly where it needs, keeping up with the action, to capture the memory.

2: Machine learning gives us superpowers in the physical world

Our human perception systems are amazing. Your eyes can distinguish between 10 million different colors at very detailed resolutions. But there are also well-known limitations to our perceptual systems. This is why we have bomb-sniffing dogs and not bomb-sniffing humans. Our memories are also worse than many of us would like to admit. Our brains are designed to forget, for good reason, a lot of what you see and hear. What if we could design around the limitations of our human perceptual system and give ourselves superpowers in the real world? Machine learning helps us do exactly that. For example, Pindrop gives banks, retailers, and government organizations the power of super hearing.

Pindrop’s service helps prevent voice fraud, an increasingly common way for hackers to steal identities and money. By analyzing nearly 1,400 acoustic attributes, the system can help organizations figure out if you are really you when you call in on the phone, which remains up to 78% percent of how we choose to interact with our merchants, government agencies and banks. It does this by listening to our voices (which deepen into middle age and then rise in pitch as we age) as well as the noise on the line introduced by our phones and phone networks. Using proprietary machine learning algorithms, Pindrop can use the noise on the line to predict whether you are calling from your iPhone at home or using Skype from Estonia. This super-hearing capability helps organizations dramatically reduce the number of successful fraud attempts.

Machine learning also gives us super-seeing superpowers in a growing set of indoor farms operated by companies such as Iron Ox and Bowery Farms.

It’s hard for us as humans to watch things grow slowly and to compare them over time: our perceptual systems are designed to notice fast-moving predators rather than slow-growing spinach. Machine vision systems, on the other hand, can be trained to know exactly what a healthy head of lettuce is supposed to look like on day 36 of its life. For lettuce that isn’t thriving, the farm can automatically adjust its temperature, lighting conditions, water level, nutrient mix, humidity, and other factors to get to optimum health. Crop yields from these indoor farms can be as high as 15 pounds per square foot of growing space compared to 7 pounds for efficient greenhouses and 1 pound for field-grown crops — and it can reach these growing efficiencies with 95% less water and no pesticides. The power of super-seeing makes us more efficient farmers.

Super-seeing can also be used inside factories. Despite massive investment in robotics technology worldwide, around 90% of all factory work is still done by human hands. The challenge is that there is a lot of turnover in factories. It’s hard to keep workers on these jobs. An extremely well run factory in the United States might have a 30% turnover rate a year, driving expensive re-recruiting and re-training costs. In China, it’s not unusual to have a 2% turnover rate a day, which means that in a few months, you have a completely new workforce. With turnover so high, it’s critical for factories to minimize the cost of training each new employee.

Drishti is bringing super-seeing to these factories. By capturing and analyzing video as the workers assemble products, the system can suggest personalized actions for each work to help them keep on track. Factories using Drishti have seen a 25% increase in labor productivity and a 50% decrease in error rates.

When we bring super-seeing abilities to construction sites, we can help keep the whole crew safer, on budget, and on schedule. Doxel gathers data from ground robots and drones using LIDARs (the same laser-based radars used on self-driving cars) and cameras each day after workers have gone home.By building accurate 3D maps (with up to 2 mm accuracy), Doxel knows what got built (“what is”) and compares it with the building plans (“what should be”). And it can then forecast when major parts of the building will be finished.

Video credit: IEEE, Doxel

This daily loop helps keep the flow of the right workers with the right materials and tools to the site at the right time. It also helps prevent errors you might have experienced during your own home remodel project — errors like putting up the drywall before the plumber or electricians is finished with behind-the-wall piping or cabling.

Using this system from Doxel, Kaiser Permanente helped increase the productivity of construction workers by 38% and finished its Viewridge Medical Office 11% under budget.

Of course, we can’t talk about AI super-powers without talking about self-driving cars. One of the second-order effects of advanced driver assistance systems leading to full self-driving cars is that we’re going to have reliable witnesses at accident sites. Everywhere a self-driving car goes, we’ll have sensor data from a dozen cameras, a few LiDARs, multiple radars and ultrasonic sensors, and so on. Think about what that would mean for the courts and insurance companies. Instead of relying on eyewitness testimony — and even when no one is around — we can reconstruct what happened at the scene of an accident. In September 2018, Tesla released Software Version 9.0 to cars it built after August 2017. With this software release, users could record the car’s camera data to a USB flash drive, and many drivers started doing exactly that. This video is from a Tesla driver in North Carolina:

Fortunately, no one was hurt in the accident, but the dashcam footage illustrates how useful having video cameras is when there are no eyewitnesses: the Honda driver clearly maneuvered illegally into oncoming traffic, but without the video footage, this situation could have turned into a standoff in which both drivers insisted they were not to blame.

Here’s my final example of how machine learning systems that give us super-powers in the real world. An Israeli company called OrCam makes a product called MyEye 2, which clips onto a pair of glasses to help people with vision problems (such as blindness, vision impairments, dyslexia) navigate the world. The product takes video of the environment and describes what’s in the video. It’s able to read text from a book, say the names of people as they approach (a feature I’d love, even though I have normal vision!), distinguish between $5 and $50 bills, identify products at a grocery store, and more.

3: Helping Us Make Better Decisions

Machine learning algorithms can help us make better decisions by minimizing human biases, taking a more complete data set into account, and otherwise compensating for known shortcomings in our decision-making software. While the age-old computer science axiom of “garbage in, garbage out” still applies, carefully designed systems with fully representative data sets can help us make smarter decisions.

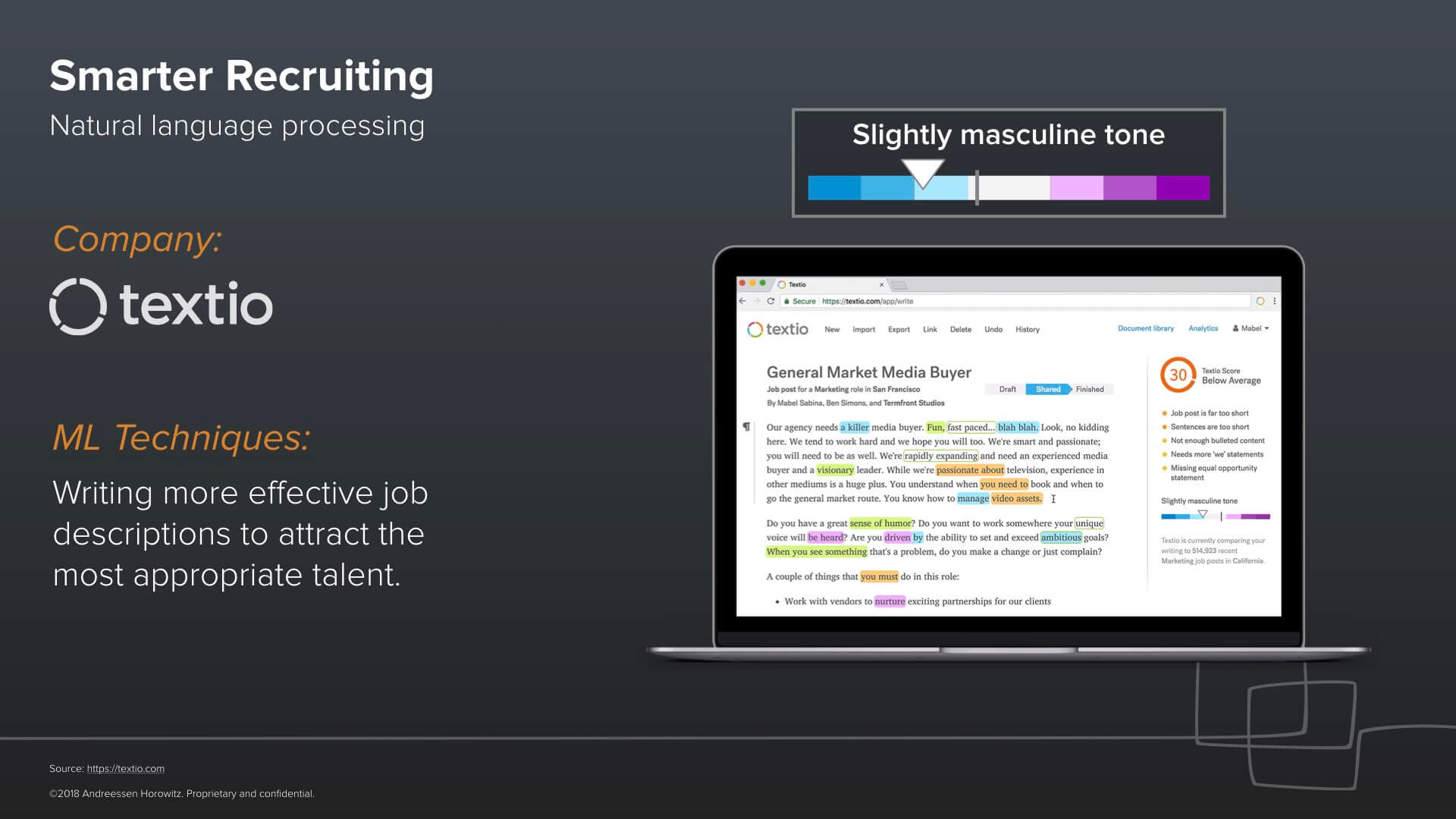

Consider recruiting. Textio makes a software product that helps people write job descriptions that are most likely to attract someone qualified and capable of doing a specific job. Started by a team of Microsoft Office veterans, the company provides on-screen guidance as you write. For example, Textio will alert you when you use words that have been found to be more appealing to one gender over another at a specific point in time. At the time of this presentation, words such as exhaustive, enforcement, and fearless are statistically proven to skew your talent pool towards men.

Textio also points out regional differences. For instance, if you use “a great work ethic” to describe your ideal candidate, this may appeal to workers in San Jose but will deter workers in the Everett, Washington region. You are better off leaving that phrase out if you’re targeting a candidate pool in Everett. Given that Textio has access to a broad data set — existing job descriptions, application statistics, and work performance data — it can help eliminate blind spots in your own recruiting process.

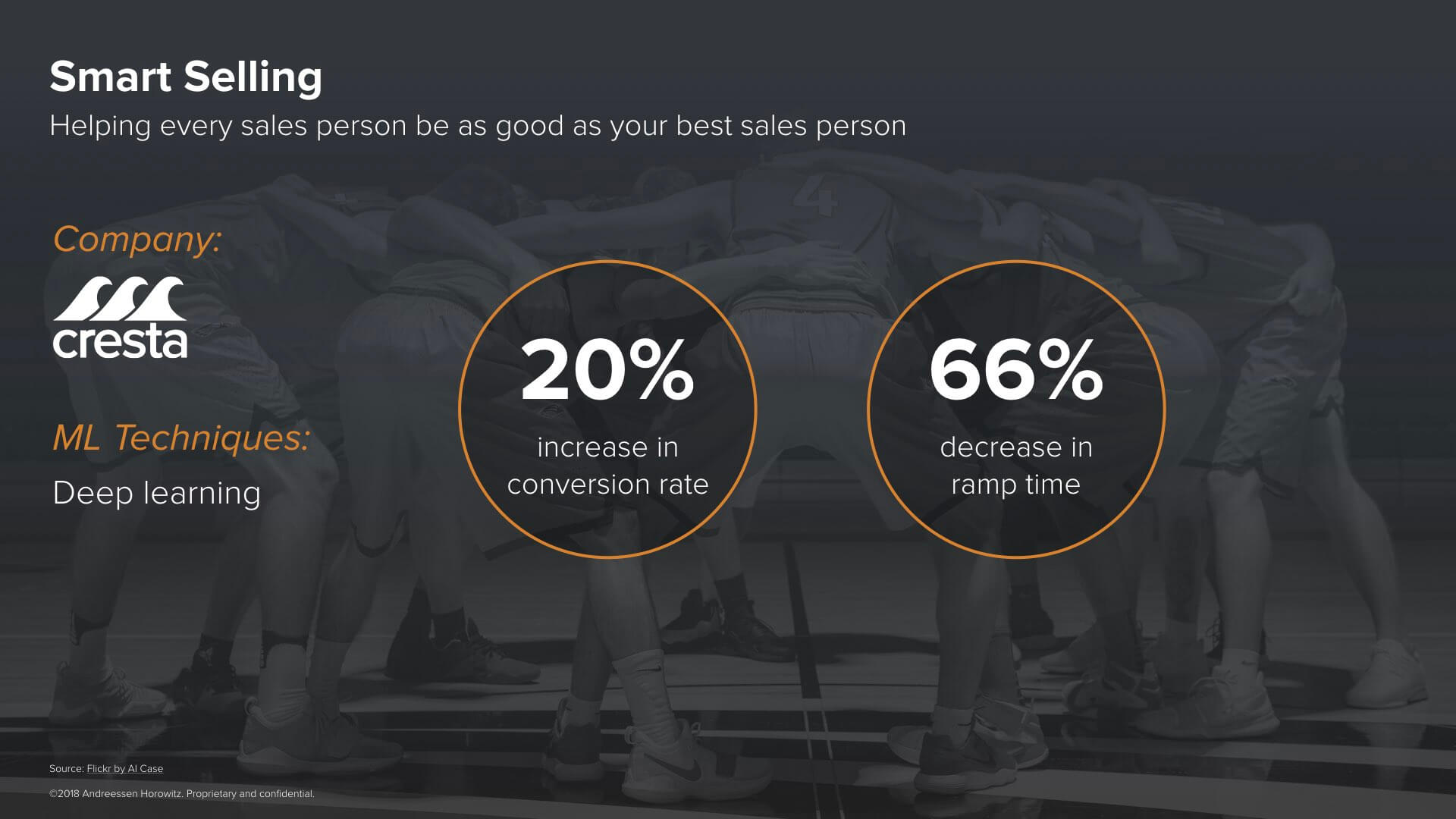

Machine learning can also help figure out what makes your best people so effective and help turn novices into experts on day one. Cresta.ai watches how the most effective salepeople interact with prospects as they chat: figuring out what the prospect needs, recommending products, answering questions. The system extracts best practices and turns this into real-time suggestions for less experienced or less effective reps as they interact with their prospects.

In deployments with customers such as Intuit, sales teams have experienced conversion rates go up 20% and ramp time go down 66%: in other words, reps are closing more business with less training than without the system. This system is better for both the sales people and the customer. As one Intuit rep describes, “I was worried that using this system would take away the personal relationship or conversations with the customer, but having this AI as a job aide assists in personalizing the conversation.”

Machine learning can also help improve decision making for very skilled professionals, such as geologists hunting for a specific mineral. Inside a lithium-ion battery (such as the one in your phone, laptop, or electric vehicle) is likely a mineral called cobalt which helps the battery stay fresh over many charge and discharge cycles. The need for cobalt is growing rapidly as battery factories such as Tesla’s Gigafactory are producing more lithium-ion batteries to put in their cars. Unfortunately, 65% of the world’s known cobalt reserves are in the Democratic Republic of Congo, which is politically unstable and may have as many as 40,000 children working cobalt mines (as reported by Amnesty International and CBS).

If we had a reliable political partner in the DRC’s government, we might try to improve working conditions for all miners, but we should also look for new sources of cobalt. That’s exactly what KoBold Metals is doing. By looking at many different data sources such as topography data, the plants that grow in a region, magnetic and electromagnetic patterns, water and weather patterns, types of rock, and so on, the system can help geologists find likely locations for cobalt — before we send very expensive expedition teams to dig up the earth.

If we had a reliable political partner in the DRC’s government, we might try to improve working conditions for all miners, but we should also look for new sources of cobalt. That’s exactly what KoBold Metals is doing. By looking at many different data sources such as topography data, the plants that grow in a region, magnetic and electromagnetic patterns, water and weather patterns, types of rock, and so on, the system can help geologists find likely locations for cobalt — before we send very expensive expedition teams to dig up the earth.

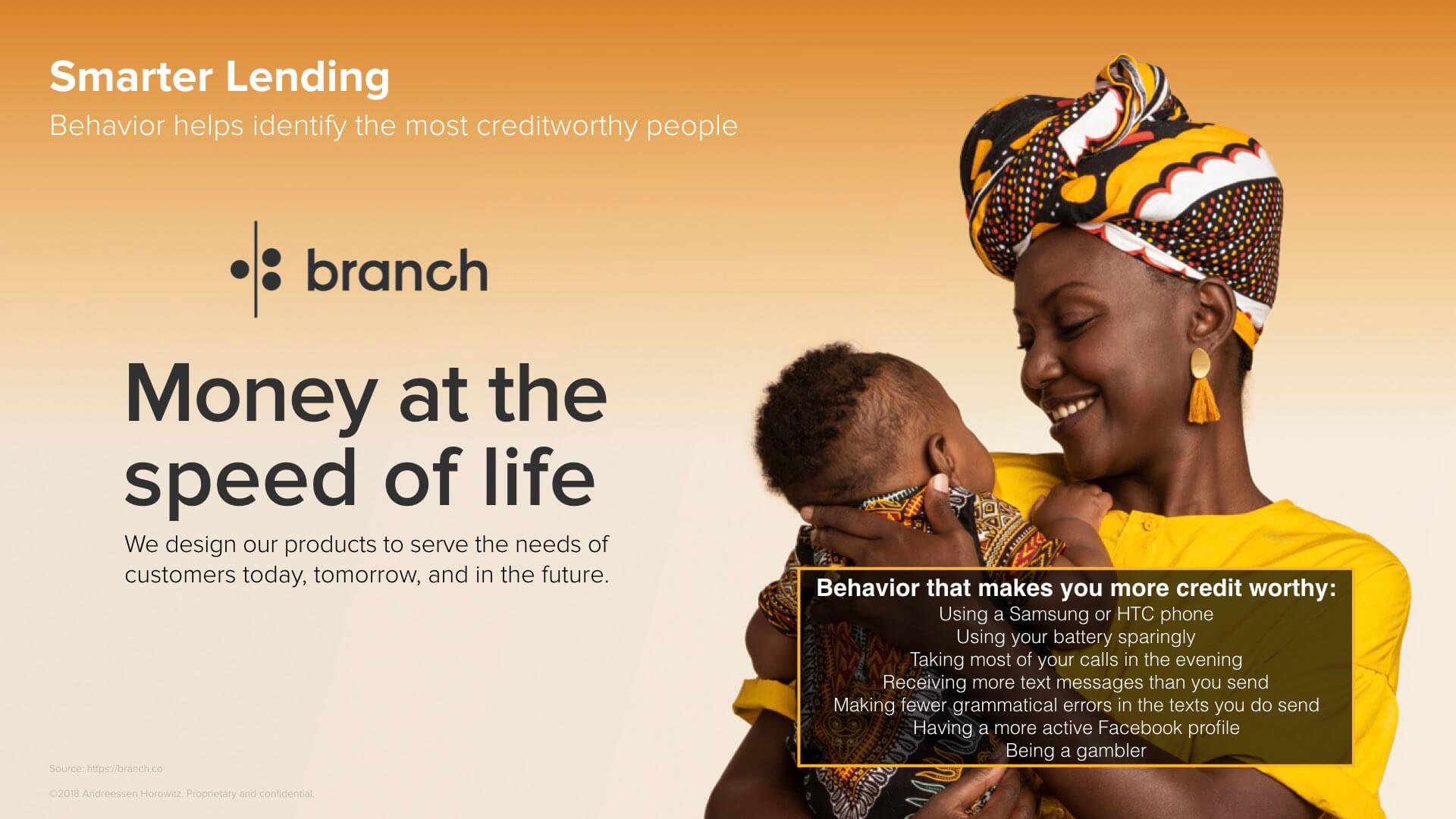

Another great example is in fintech. Issuing loans to first-time borrowers, especially in third world countries like Kenya, Tanzania, or Nigeria, is risky. In these countries, credit bureaus either don’t exist or they aren’t able to reach everyone leaving whole swaths of the population unable to qualify for traditional loans. Our portfolio company Branch has been working to qualify first-time borrowers for loans in these areas. Without credit bureaus, Branch sought to qualify borrowers based on their mobile app usage and behavior which was gathered via their mobile app. Using machine learning, Branch analyzed the data and identified several behavioral indicators that have been shown to predict the likelihood of loan repayment.

Here are some surprising factors or behaviors that make Branch borrowers more likely to repay their loans:

Here are some surprising factors or behaviors that make Branch borrowers more likely to repay their loans:

- Using a brand-name phone like a Samsung or HTC

- Using your battery sparingly

- Taking most of your calls in the evening

- Receiving more text messages than you send

- Having a more active Facebook profile

- Running gambling apps on your phone

Most of the indicators on the list are not too surprising, with the exception of the last one. The data found that if an individual is a gambler and has gambling apps on their phone, they are more likely to repay their loans. If this seems a little counterintuitive to you, you’re not alone. It just goes to show how off-the-mark a human decision maker can be due to personal bias and past experience.Does that mean algorithms are just better than humans at all decision making? Not yet.

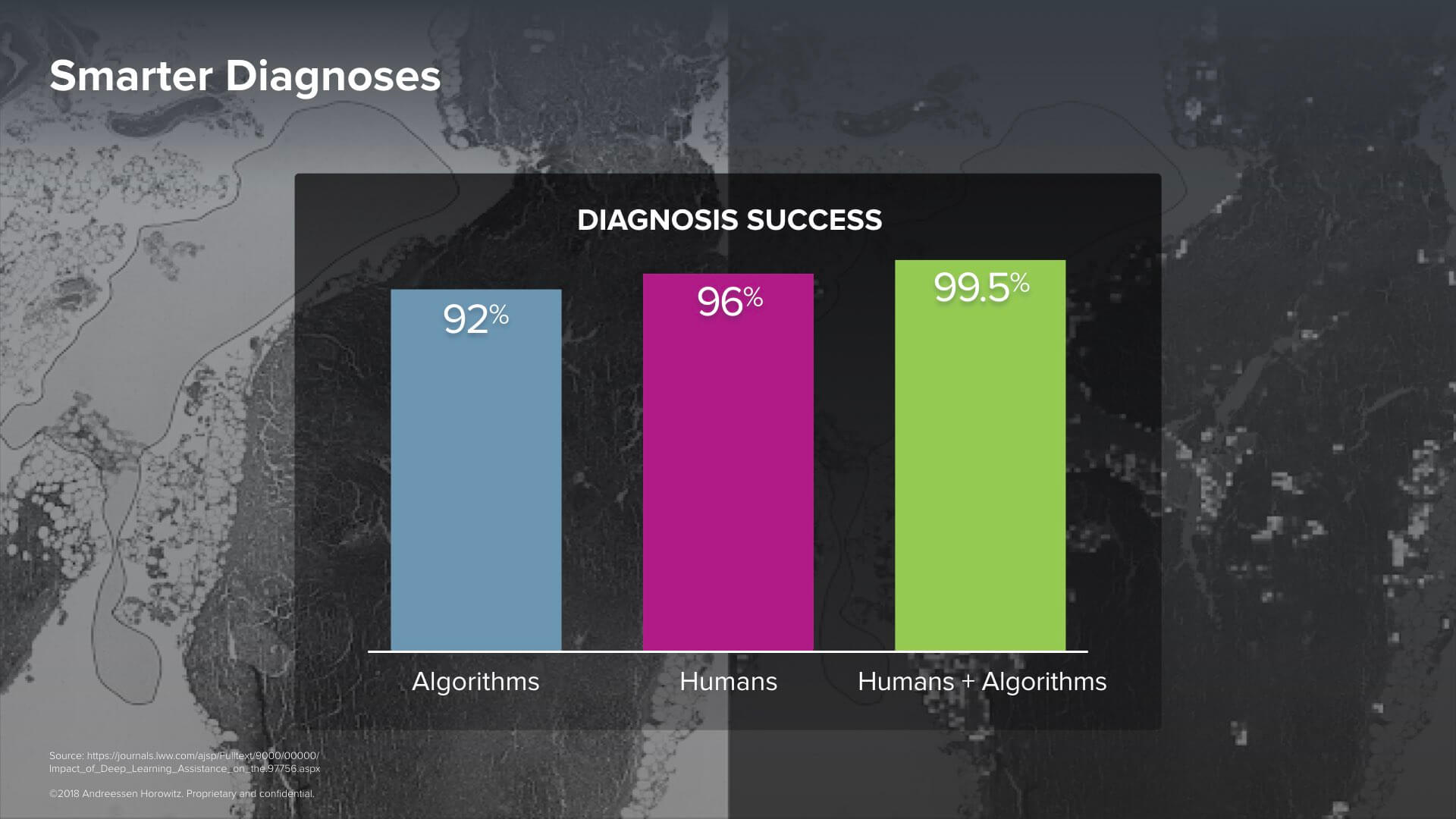

Studies comparing performance at diagnosing cancer from tissue samples (such as this one at Harvard Medical School published in June 2016 and another led by Google AI Health researchers published in October 2018) have shown that when humans compete with algorithms, the team that consistently delivers the most accurate diagnoses are humans together with the algorithms, who outperforming algorithms alone and humans alone.

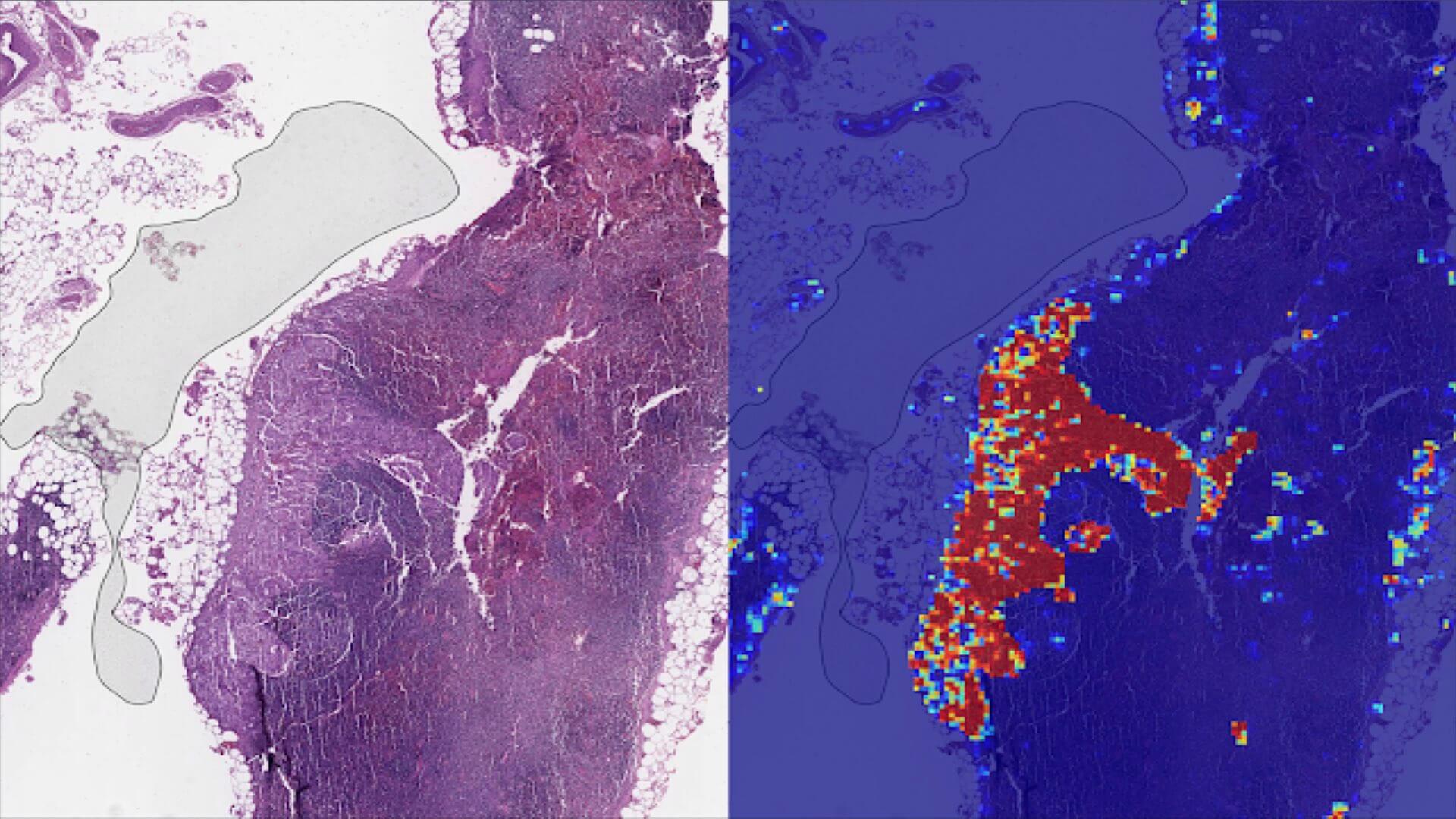

To give you a sense of what doctors are using to diagnose cancer, here are two side by side images. The one the left shows a tissue slice which has been stained. The one on the right shows the results of a computer vision algorithm superimposing a “heat map” that shows areas it considers more (in red) or less likely (in blue) to contain cancerous tissue.

Why are humans with algorithms outperforming both algorithms alone and humans alone? One possibility is that the algorithms improve decision making in a similar way that grammar and spell checks help improve our writing. Generally, these checkers do a pretty good job of catching many of our typos and grammar mistakes (true positives). But occasionally, the checker will flag something as incorrect (false positive) or give a grammatical suggestion that you don’t agree with. In those instances, you end up overriding the checker. The end result, through this push-and-pull, challenge-and-override process, is writing that is better than either party could have written on its own.

4: Automating dangerous jobs & tasks makes us safer

Some jobs are obviously dangerous, such as ocean rescues, delivering blood and vaccines to remote areas, and charging into a building to see if there are hostile soldiers in it.Startups and non-profit organizations are helping to automate each one of these jobs.

Watch this dramatic video of what may be the world’s first ocean rescue by drone captured on film:

An organization called Little Ripper was flying the drone over the surf to gather video footage. They planned to build a machine learning model that would identify sharks in the water to act as an early warning system for surfers and swimmers. During a training mission to gather footage, a real-life distress call came in. Within 70 seconds, drone operators were able to fly over to the distressed swimmers and drop flotation vests to them, rescuing them — all without sending a team of lifeguards into the dangerous surf.

Zipline, one of our portfolio companies, is working to deliver life-saving blood and vaccines to people across Rwanda. Their drone-based delivery system is so pervasive that they now deliver roughly 25% of all the blood used in Rwandan blood transfusions. Most deliveries happen in less than half an hour. The company is now expanding their delivery system to Ghana and test flights have begun in the United States.

https://www.youtube.com/watch?v=6wBeXIgD4sY&t=72s

Finally, our portfolio company Shield.ai is building a drone to augment human soldiers in the most dangerous operation the US military performs today. It’s called “clearing” and it involves sending armed human soldiers into buildings they’ve never seen more.

https://www.youtube.com/watch?v=gNK-hp3Yysw

The company’s Nova drone can be an invaluable aid to soldiers when clearing unknown enemy buildings. It’s able to explore and transmit detailed maps of the building’s passageways while using machine learning algorithms to tag the occupants within as either friend or foe.

Ocean rescue, delivering blood to remote areas quickly, clearing potentially hostile buildings: each of these jobs is obviously dangerous. But not all dangerous jobs are obviously dangerous. Surprisingly, one of the most dangerous jobs in the United States, according to the Bureau of Labor and Statistics, is long-haul trucking. There are more fatalities associated with this occupation than any other, and this already distressing number doesn’t take into account the negative health impacts associated with long haul trucking.

Obesity rates among long haul truckers are more than double that found within the normal population and the risks don’t stop there. Being obese increases one’s risk for diabetes, cancer, and stroke, and due to the stressful nature of the job, truckers are much more likely to use alcohol and smoke further serving to shorten their lifespans. We have got to get these truckers out of their cabs as soon as we can build self-driving systems that are safer than human drivers.

Driving passenger cars is also extremely dangerous. The World Health Organization estimates that 1.2 million people are killed every year in road traffic accidents. It’s the leading cause of death of young people aged 15 to 29, and they think recovering from fatal road accidents consumes about 3% of the world’s GDP. We’ve got to get human drivers out of the cars as soon as self-driving systems are safe enough to take over the steering wheel, and we have a set of portfolio companies actively building products and services in this ecosystem.

DeepMap is building beautifully detailed 3D maps that the algorithms can use to safely navigate our roads. Applied Intuition creates simulated systems to help engineers test and retest their algorithms to ensure the algorithm that they’ve created will behave exactly as expected when exposed to a variety of conditions. Voyage operates a robo-taxi service within retirement communities so that even as we lose our ability to drive, we can maintain our independence. Cyngn is working on a whole set of self-driving software that can work in a wide variety of vehicle types.

5: Machine learning will help us understand each other better

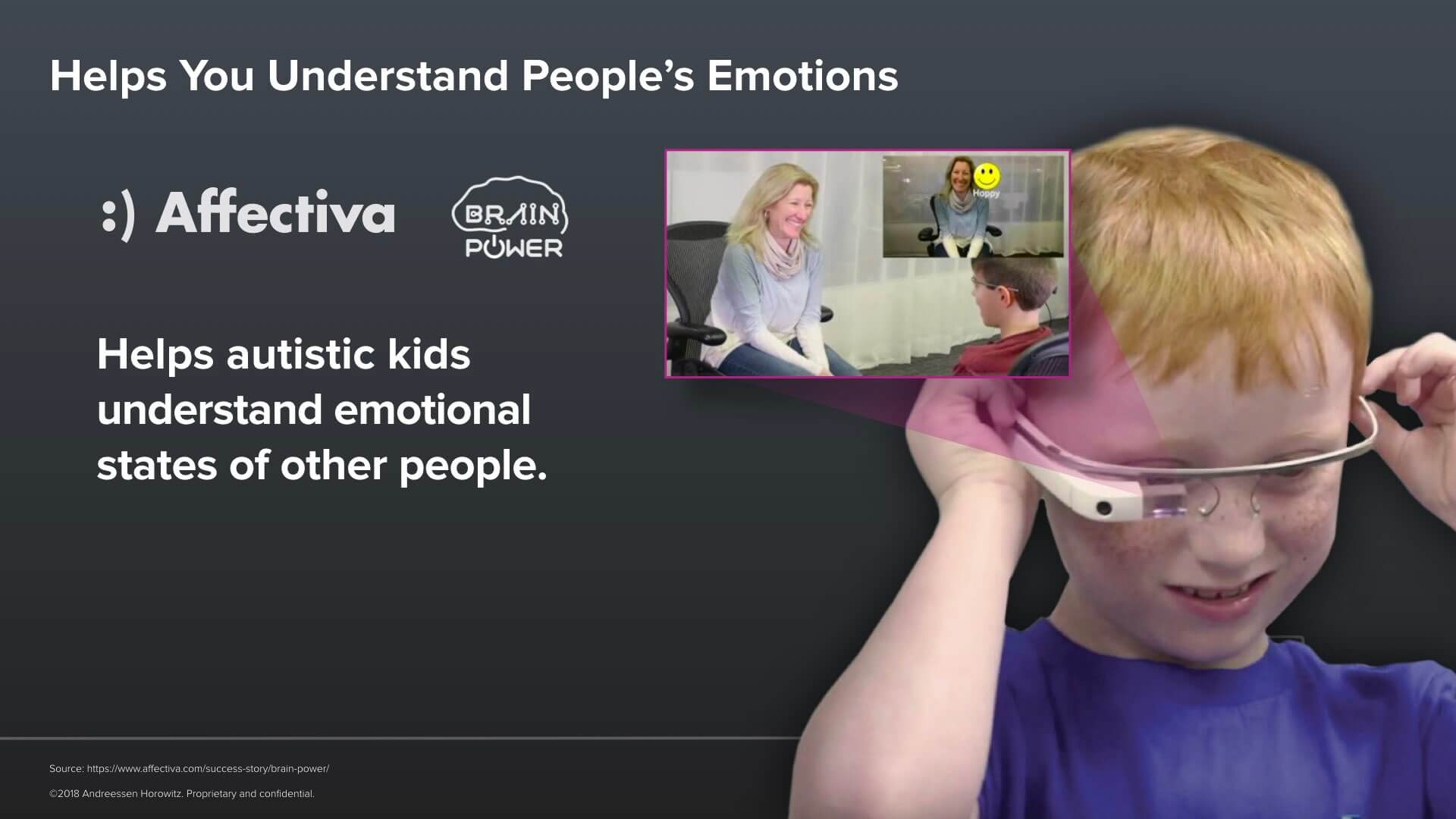

You might think that humans are best equipped to understand each other. After all, there’s another human on the other side of a conversation. But it turns out that well-designed software can actually help us understand each other better. Consider people diagnosed with autism. Those with autism have difficulty forming and maintaining relationships because they are not able to instinctively recognize and respond to non-verbal forms of communication. Two Cambridge-based companies, Affectiva and Brain Power, have built applications designed to help those with autism recognize, with the use of simplified emojis, the emotional state of the people around them so that they can modulate their behavior appropriately.

Their system analyzes the video from a Google glass and superimposes the appropriate emoji over a person’s face, helping the wearer most accurately understand the emotional state of the person they are interacting with. Here’s the system in action:One of the very first goals of artificial intelligence research was machine translation — that is, translating one human language into another (see a brief history in the a16z Primer on Artificial Intelligence).

The most difficult challenge in this area is simultaneous real-time translation: as a speaker is talking in one language, the system automatically outputs a translation in another language in time so that the two speakers can carry on a natural dialog.I think of this style of translation of United Nations speech translation, and it turns out it’s hard for even highly trained, experienced human translators. I learned this type of translation is so challenging that professional UN translators can only work for 20 minutes at a time before they are relieved by a teammate.

Given how big the technical challenge is, it’s impressive that the AI community is making solid progress towards this goal, as demonstrated by Chinese company Baidu’s system STACL (Simultaneous Translation with Anticipation and Controllable Latency) which they unveiled in October 2018.

In order to keep up with the speaker, the machine learning system actually generates multiple predictions for how each sentence will end as the speaker starts speaking. It’s kind of like Google’s Autocomplete in your browser’s search bar. The system needs to create and translate different possible endings for a sentence because it will fall behind the speaker if it doesn’t. Imagine how much better we can understand each with real-time translation embedded in every Web call in every conference room we walk into or every browser window we open.

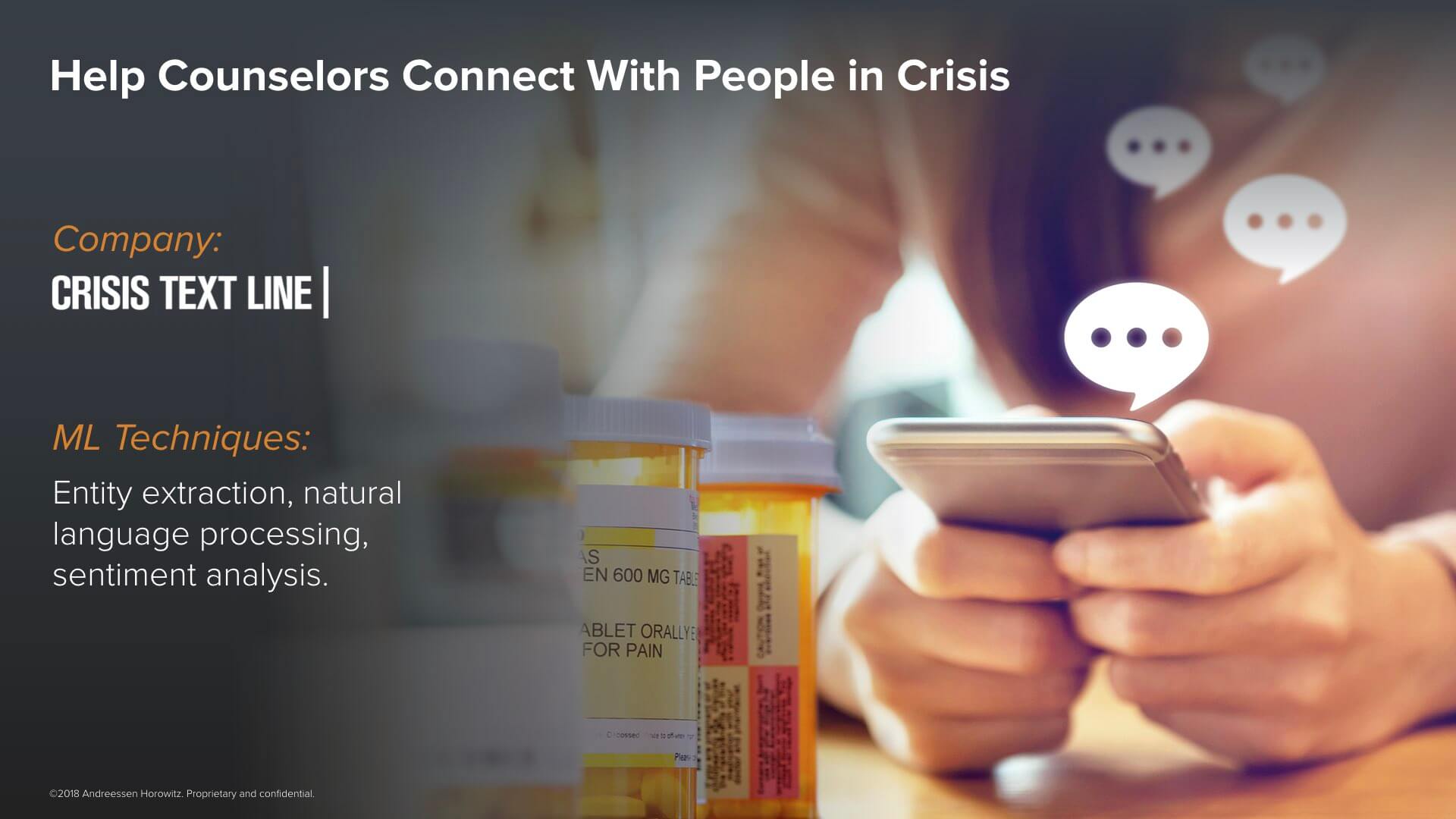

Here is one last example in this category of understanding each other better, and this might well be my favorite example in the entire presentation. There’s a nonprofit called the Crisis Text Line which helps to counsel people in crisis via text. Where an older generation might have called a hotline when faced with a crisis, the current generation prefers texting.

When Crisis Text Line started, they asked trained counselors to generate a list of 50 trigger words that could be used to predict high risk texters. The words they generated were generally what you would expect: words such as “die”, “cut”, “suicide”, and “kill”. After the service had been running for some time, the company applied machine learning techniques to see if there were other words that we could add or remove from the list, and the results were very surprising. Did you know the word ibuprofen is 14 times more likely to predict suicide than the word suicide? That the crying face emoticon is 11 times more predictive?

When Crisis Text Line started, they asked trained counselors to generate a list of 50 trigger words that could be used to predict high risk texters. The words they generated were generally what you would expect: words such as “die”, “cut”, “suicide”, and “kill”. After the service had been running for some time, the company applied machine learning techniques to see if there were other words that we could add or remove from the list, and the results were very surprising. Did you know the word ibuprofen is 14 times more likely to predict suicide than the word suicide? That the crying face emoticon is 11 times more predictive?

Stanford researchers went on to extract best practices of the most effective counselors. One of the techniques they found effective was creativity: successful counselors respond in a creative way rather than using too generic or “templated” responses. Machine learning is helping us understand each other even better in the extreme case of crisis counseling.

Getting From Here to There

While machine learning software has much promise in making us better humans, we won’t get there without being intentional, deliberate, and empathic.“Garbage in, garbage out” remains true and is perhaps even more fraught with potential danger as we learn to trust AI-based systems more and more.Having said that, I have three suggestions for how we can get from here to here in a way that maximizes human thriving.First, we need to be inclusive. To combat “garbage in, garbage out”, we must train our machine learning systems with high quality, inclusive data. Organizations such as AI4ALL, OpenAI, and the Partnership for AI are publishing best practices (on both inclusion and safety) for researchers and product teams to use as they design systems. We must learn to use data quality tools and conceptual frameworks from organizations such as Accenture, Google, and Microsoft.

As companies and organizations make progress towards a future of inclusive, safe machine learning systems, organizations scale, they often discover they need to hire an executive that thinks about these issues full time. It’s one of the Valley’s hottest new job roles: director of AI Ethics and Policy.Second, let’s not turn this into a replay of the nuclear arms race with a zero-sum race to the biggest arsenal. Compared to the proprietary database era beginning in the 1980s, we are already off to a better start in the machine learning community with the proliferation of open source code bases (such as Google’s TensorFlow, Databricks’s mlflow, and the ubiquitous Keras and scikit-learn) and shared data repositories (from organizations such as Kaggle, UCI, Data.gov; see curated lists here and here and on Wikipedia).

But we have much further to go. While parochial policy wonks from one country or another might want to turn this into a zero-sum game where one country succeeds at another’s expense, we can and should work together to realize the promise of ML-powered software for all people, regardless of nationality.

But we have much further to go. While parochial policy wonks from one country or another might want to turn this into a zero-sum game where one country succeeds at another’s expense, we can and should work together to realize the promise of ML-powered software for all people, regardless of nationality.

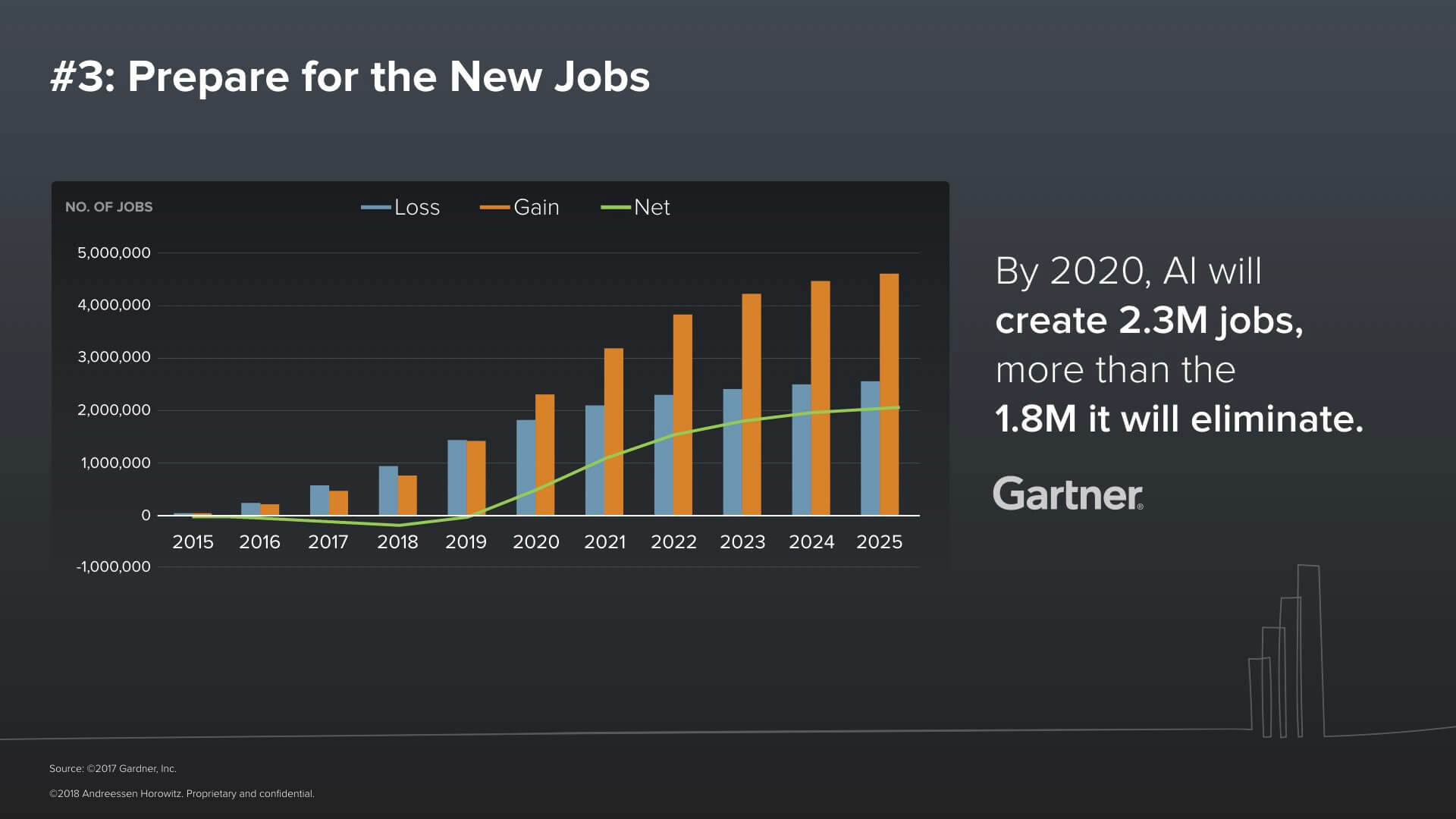

Finally, a word on jobs. While it’s beyond the scope of this presentation to predict the future of employment, I will observe that more recent studies by organizations such as Gartner are predicting new job gains compared to earlier studies predicting mass unemployment in the coming decades.

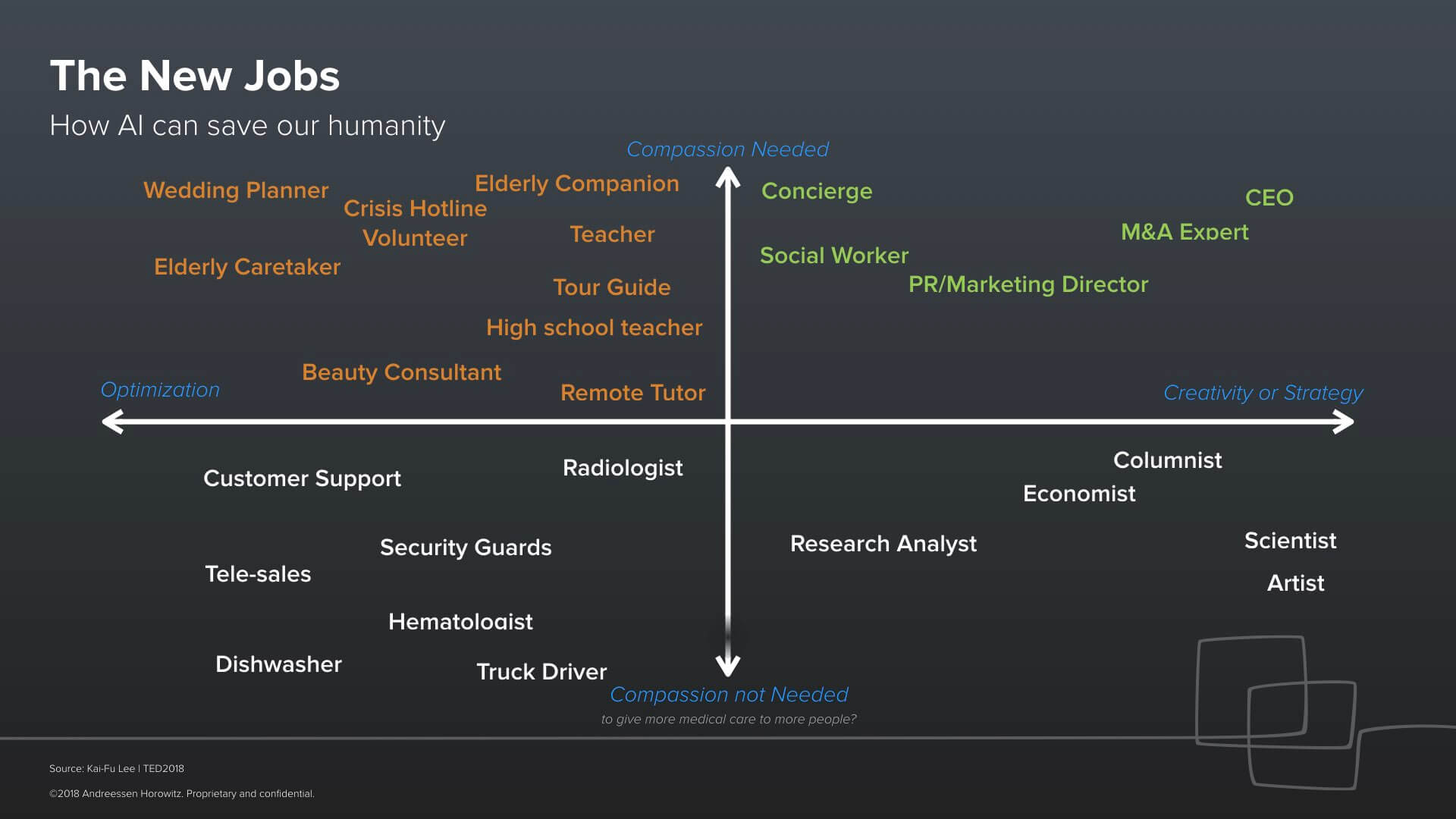

I like a framework my friend Kai-Fu Lee proposes in his book AI Superpowers: China, Silicon Valley, and the New World Order. Imagine two axes: on the x-axis, we have a spectrum of jobs that are more routine versus jobs that require creativity and strategy. On the y-axis, we have jobs that require compassion to do well.

I like a framework my friend Kai-Fu Lee proposes in his book AI Superpowers: China, Silicon Valley, and the New World Order. Imagine two axes: on the x-axis, we have a spectrum of jobs that are more routine versus jobs that require creativity and strategy. On the y-axis, we have jobs that require compassion to do well.

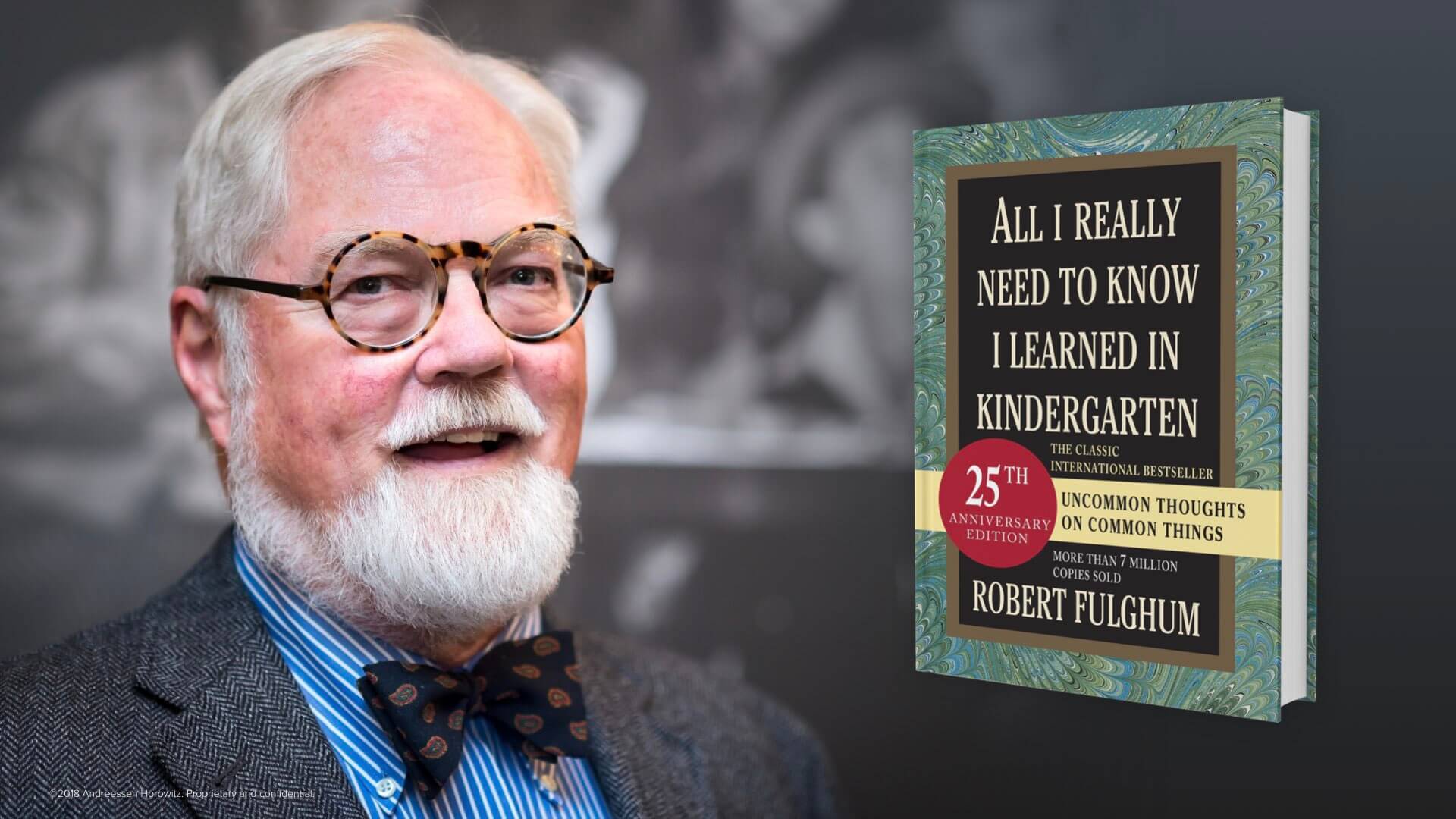

Kai-Fu argues that the jobs that machine learning will displace first are the lower-left quadrant jobs: routine jobs that don’t require much compassion. But even as those jobs disappear, we still have 3 quadrants worth of jobs: those requiring compassion in the top half of the graph, and the jobs in the lower-right quadrant that require a lot of creativity. These are the jobs that current machine learning techniques are not on a trajectory to displace. Thinking about how to prepare future generations for those jobs, I am reminded of a book popular when I was growing up. The book was written by a minister named Robert Fulghum and is called Everything I Needed to Learn I Learned in Kindergarten.

In the book, he argues that the values we should nurture in our kids are human values: empathy, fair play, learning how to get along with other people, saying sorry, imagination and so on.

While I know that we’ll need to layer on top of that foundation a set of practical and technical know-how, I agree with him that a foundation rich in EQ and compassion and imagination and creativity is the perfect springboard to prepare people — the doctors with the best bedside manner, the sales reps solving my actual problems, crisis counselors who really understand when we’re in crisis — for a machine-learning powered future in which humans and algorithms are better together.

While I know that we’ll need to layer on top of that foundation a set of practical and technical know-how, I agree with him that a foundation rich in EQ and compassion and imagination and creativity is the perfect springboard to prepare people — the doctors with the best bedside manner, the sales reps solving my actual problems, crisis counselors who really understand when we’re in crisis — for a machine-learning powered future in which humans and algorithms are better together.

- 16 Minutes #57: Semiconductor Shortage and the Global Supply Chain Squeeze

- 16 Minutes on the News #52: Dall-E AI for Images; Direct Listings, SEC Ruling for Issuing Shares

- 16 Minutes on the News #42: Nvidia + Arm

- GPT-3: What’s Hype, What’s Real on the Latest in AI

- 16 Minutes on the News #37: GPT-3, Beyond the Hype