Understanding what’s in an image — one of the simplest cognitive tasks for most humans — is a stubbornly difficult problem for artificial intelligence systems to solve.

Over the past decade, AI models have gotten better and better at analyzing the kinds of images you might find on the internet. The state of the art has advanced from small models that had to be manually trained by machine learning engineers to large, pre-trained models that work out of the box. This progress corresponds to an ever-growing set of available training data, starting with ImageNet (~1 million images) and culminating, currently, in LAION (~6 billion images).

The problem is that all this data is drawn from the same domain (i.e. the public internet). One of the most iconic diagrams from the ImageNet paper (from 2012) showed off its granular classes of cat pictures.

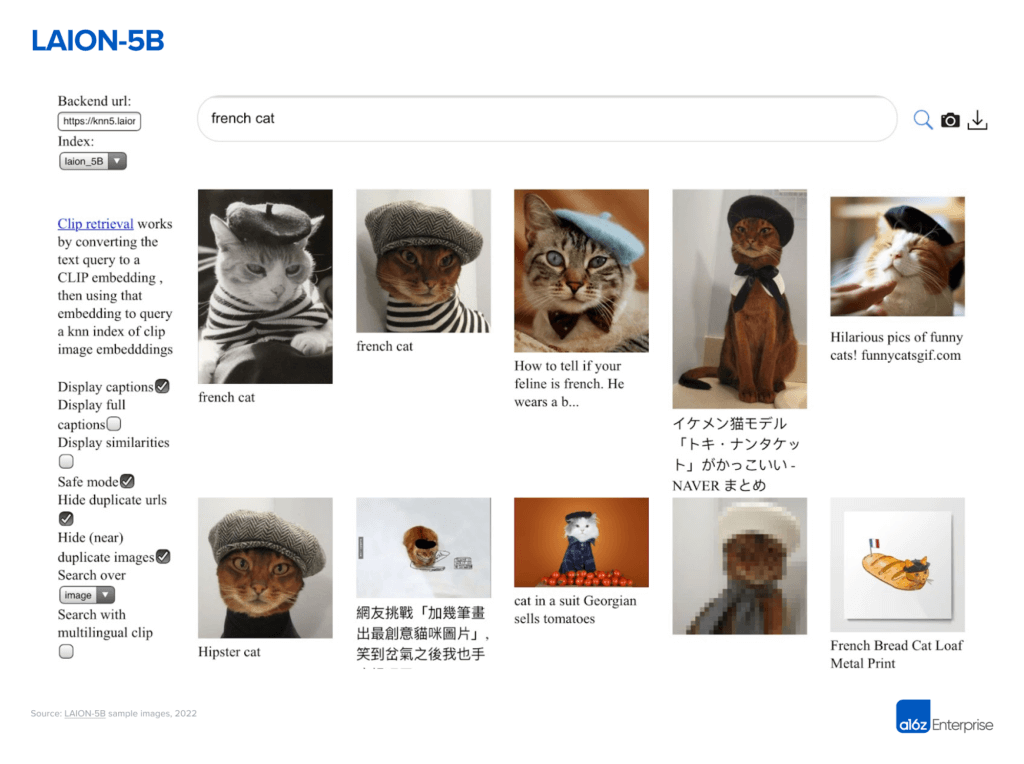

The lead image in the LAION blog post (from 2022) features . . . cat pictures.

This is OK for many consumer use cases, where production data is likely to match the training data reasonably well. But most B2B use cases don’t fit neatly into this mold. Visual content in a business context may be produced by unique cameras, in unique settings, or with unique metadata. When OpenAI first released CLIP, for example, it outperformed older models on pictures of cars or food, but trailed dramatically on satellite images and pictures of tumors. Newer models like BASIC plugged some of those gaps, but developed new, bizarre failure modes (for example, failing MNIST, one of the earliest and simplest image classification benchmarks).

As a result, most businesses trying to analyze images and videos are stuck doing supervised machine learning — manually collecting, labeling, and training large internal datasets — even for relatively simple business needs. There hasn’t been a simple way to apply domain expertise to large pools of visual content.

This is where Coactive AI comes in. Coactive is an application that helps data teams work efficiently with image and video data, without requiring specialized machine learning skills. It uses state-of-the-art pre-trained models to give a rough understanding of visual content, and a proprietary active learning system to develop more specialized visual concepts. These concepts are then exposed through standard SQL and API interfaces to power trend analysis, content moderation, search, and other core business functions.

Critically, Coactive allows users to map these analytic workflows to proprietary data and metadata ontologies, such as an existing product catalog or list of prohibited content. It can handle out-of-domain data that doesn’t match typical internet image data. And it does this without requiring users to manually label more than a few example data points. The result is an intuitive visual analytics system that can capture practical business expertise but doesn’t require an ML-engineering or data-labeling team to use.

We’re excited to announce today that we invested in both the seed and series A rounds of Coactive. We’re thrilled to support cofounders Cody Coleman and Will Gaviria Rojas in building the company.

Cody saw this problem firsthand at Facebook, where he worked on active learning systems for content moderation, and developed the core technology for Coactive while earning his PhD at Stanford under Matei Zaharia (cofounder of Databricks and co-creator of Apache Spark). And we’d be remiss to not mention the truly exceptional determination Cody has shown in his personal life before founding Coactive. Will also saw the problems Coactive is solving in his work as a data scientist at eBay, and is an accomplished deep learning researcher trained at MIT and Northwestern.

As AI continues to show breathtaking new results nearly every week, we are proud to support founders to bring these advances to important — and deceptively complex, if not always sexy — business problems.

***