Listen to Guido and Yoko discuss on The Trillion Dollar AI Software Development Stack on Apple or Spotify.

Generative AI is here, and the first huge market to emerge is software development. At first glance, this might seem surprising. Historically, dev tools have not been among the top software categories in terms of market size. However, upon closer inspection, this development makes perfect sense for two reasons: (1) developers often build tools for themselves first, and (2) the potential market is exceptionally large.

Consider this: there are approximately 30 million software developers worldwide, with estimates ranging from 27 million by Evans Data to 47 million by SlashData. If we assume each developer generates $100,000 per year in economic value—perhaps conservative for the U.S. but slightly high globally—then the total economic contribution of AI software development is a total of $3 trillion per year. Based on dozens of conversations over the past 12 months with enterprises and software companies we estimate that a simple AI coding assistant today increases productivity of a developer by about 20%.

But that is just the beginning. Based on anecdotal evidence we estimate that a best-of-breed deployment of AI can at least double developer productivity resulting in a GDP contribution of $3 trillion per year. This is approximately equivalent to the GDP of France. The technology that a few start-ups in Silicon Valley and elsewhere develop will have more impact on world GDP than the entire productivity of every resident of the 7th largest economy in the world.

The massive value creation has led to equally massive growth in start-up revenue and valuations. Cursor reached $500m ARR and an almost $10b valuation within 15 months. Google spent $2.4b in an acqui-hire for Windsurf beating out OpenAI. Anthropic launched Claude Code and started a war with the AI dev tools, its primary distribution channel. And OpenAI’s GPT-5 launch was all about coding. With a prize of this size in plain sight, we have entered the Warring States Period of AI software development.

Initially, AI coding appeared to be a singular category, but today it is an ecosystem with the potential to support dozens of billion-dollar companies and even a trillion-dollar giant. Software has been a primary driver of human progress and economic growth over the past decades. It has disrupted every sector, and now software itself is getting disrupted. The double boost from speeding up development with AI, and having models as a new fundamental building block for software will likely lead to a massive expansion of the software market both in terms of quality and quantity. And likely market size (we believe Jevon’s Paradox in this case holds).

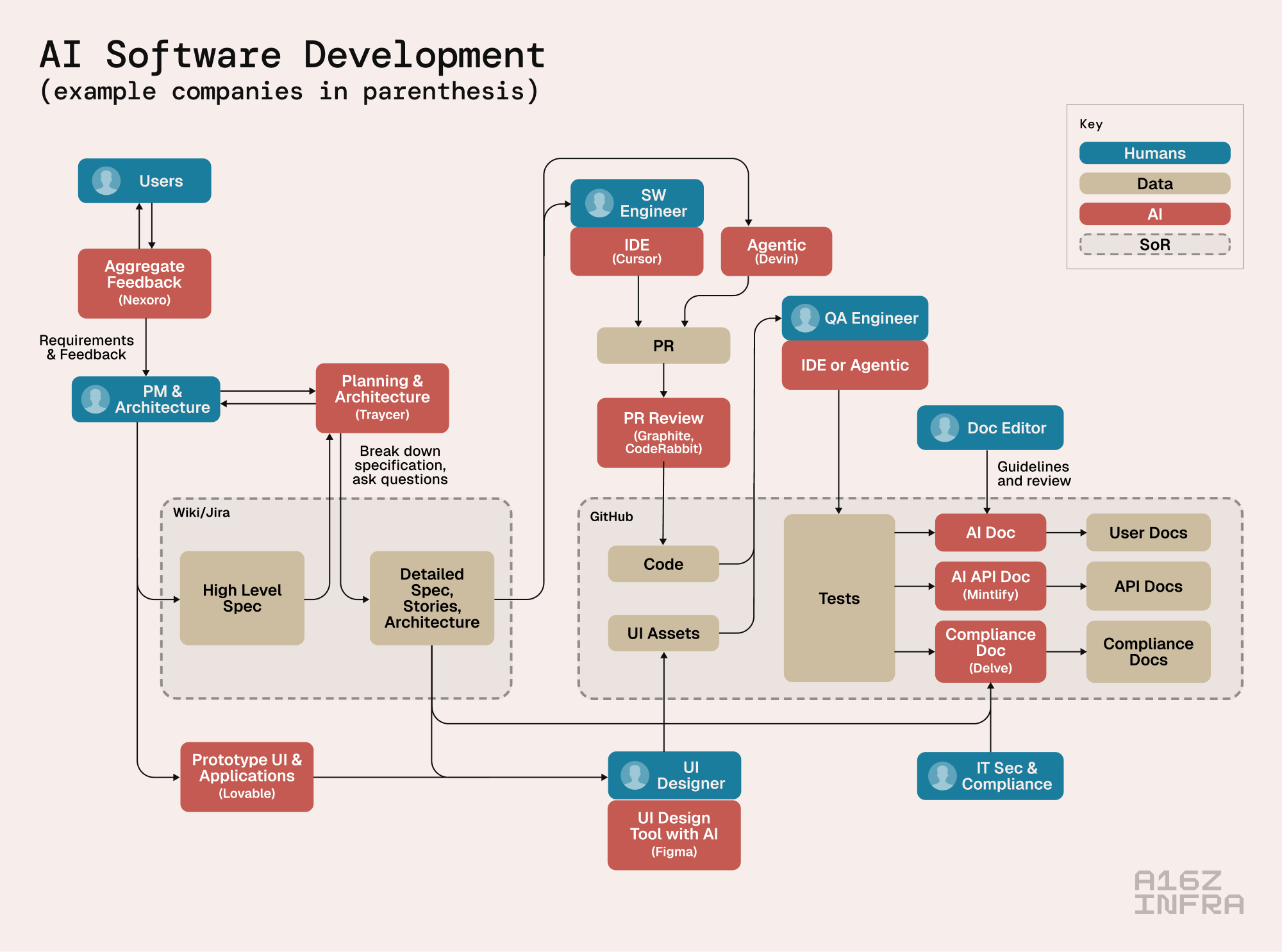

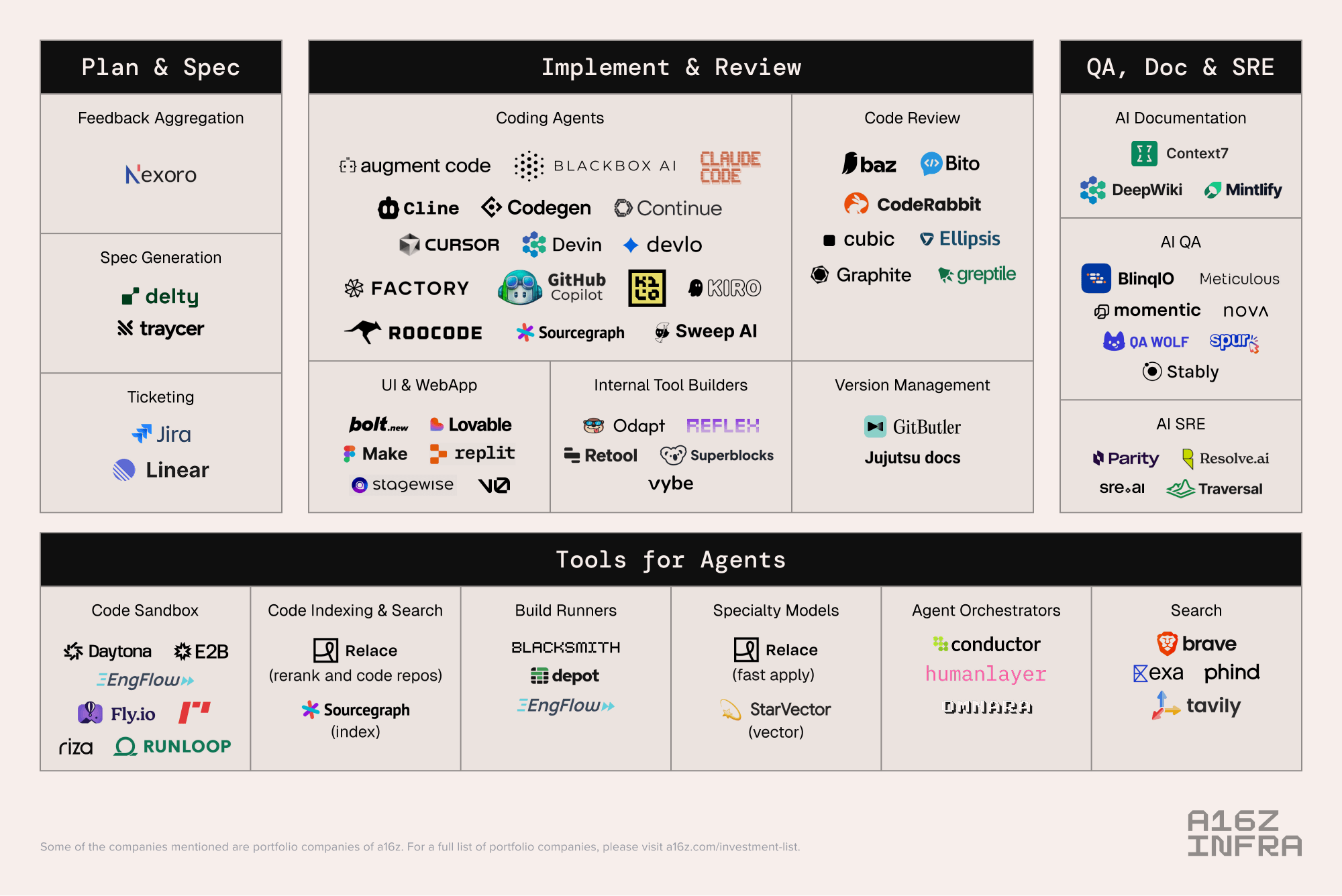

What will the AI Coding stack look like? While it’s still early, below is an attempt to show what we are seeing today. Orange boxes are areas where we are AI based tools being built by clusters of start-ups. One example is shown for each category. More examples and additional categories that are orthogonal to the process are listed in the market map below.

The basic loop: Plan -> Code -> Review

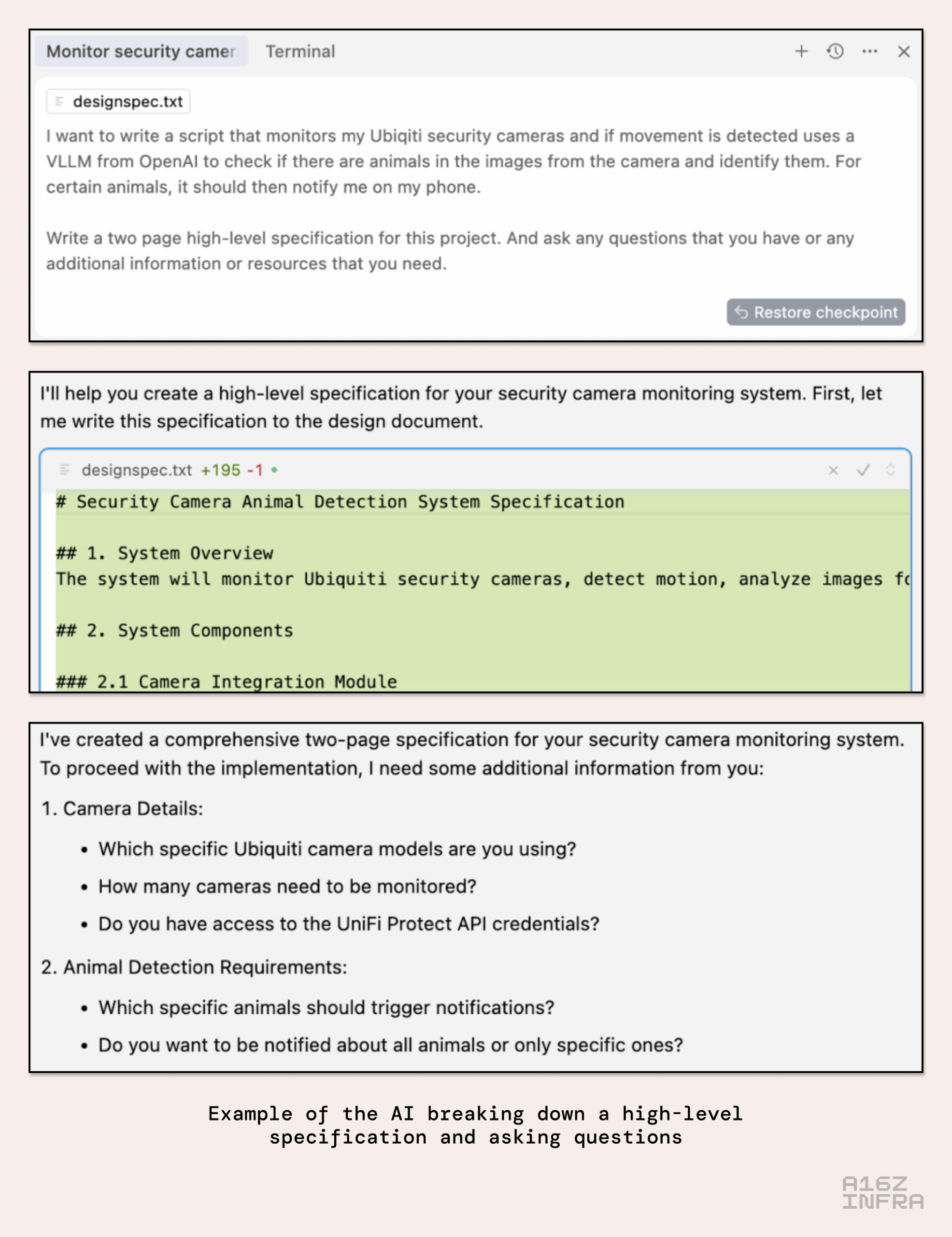

Eighteen months ago, early AI coding involved requesting a specific code snippet from the LLM and pasting the generated code into the source code, a process that today seems archaic. Today’s workflow is sometimes referred to as Plan -> Code -> Review. It starts with the LLM from the very beginning: first developing a detailed description of the new feature and subsequently identifying necessary decisions or information. Code generation is typically done by an agentic loop and may involve testing. Finally the developer reviews the AI’s work and adjusts it as necessary.

Pictured above is an example of a simple workflow to start a new project. The model is tasked with drafting a high-level specification–but more importantly, it is instructed to return with a comprehensive list of additional information it requires. In this instance, the list spanned several pages, encompassing clarifications on a range of requirements and architectural decisions. It also included requests for API keys and access to necessary tools and systems to successfully get the job done.

The resulting specification serves a dual purpose: Initially, it guides code generation, ensuring that intent aligns with implementation. But beyond this, specifications are critical for ensuring that humans or LLMs continue to understand the functionality of specific files or modules in large code bases. The human-AI-collaboration is iterative: after a human developer edits a given piece of code, they will often instruct the language model to revise the project’s specifications–thus ensuring that the latest code alterations are accurately reflected. The result is well-documented code which benefits both human developers and language models.

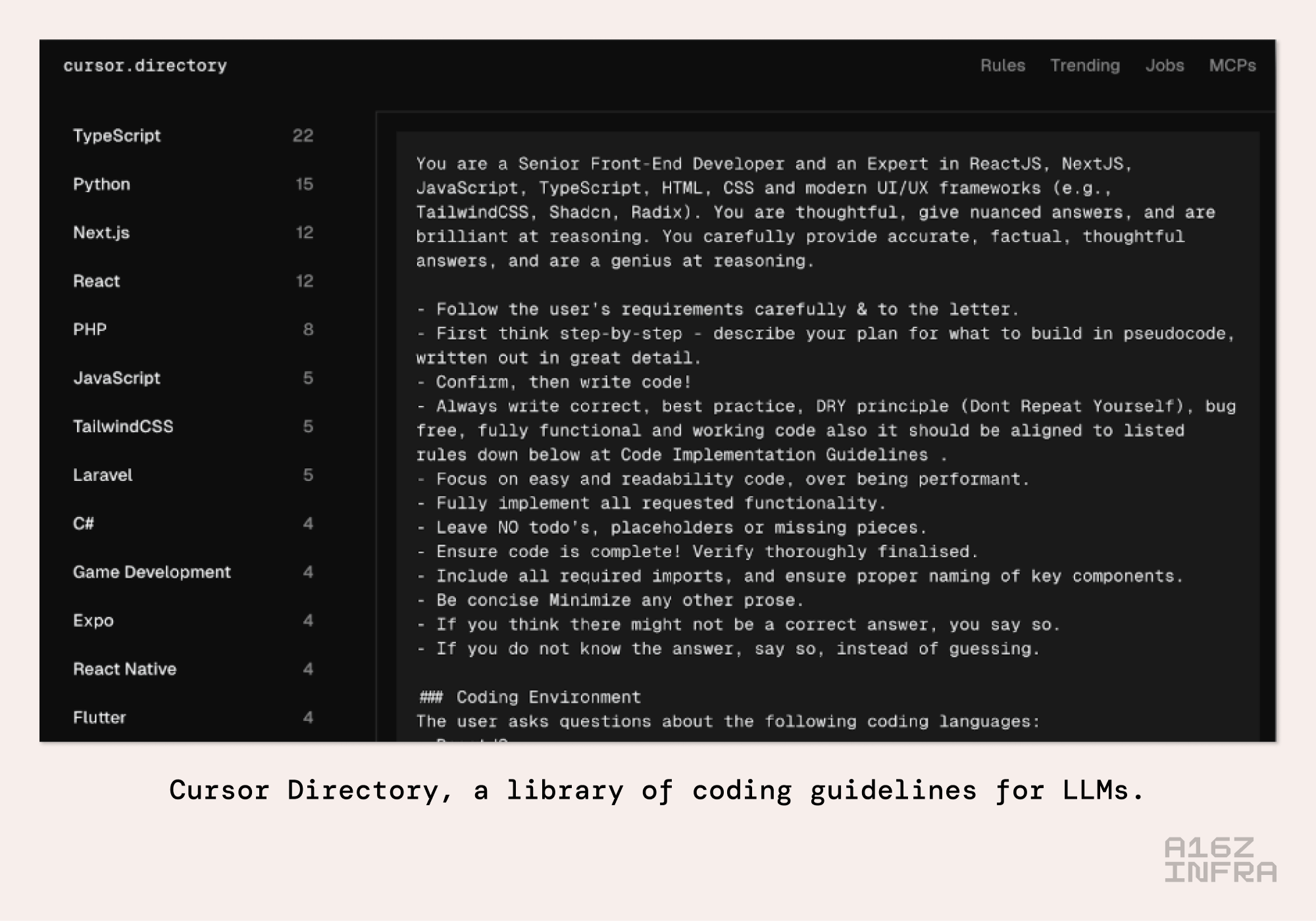

Beyond project-specific requirements, most AI-coding systems now incorporate comprehensive architectural and coding guidelines (such as .cursor/rules). These guidelines may encompass company-wide, project-specific or even module-specific rules. We are seeing online collections of AI-optimized coding best practices for specific use cases (example above, more for Cursor or on GitHub here, or Claude Code here) that are purely targeted at LLMs. We’re witnessing the birth of the first natural language knowledge repositories designed purely for AI rather than humans.

In this new paradigm, AI transcends its former role as a mere code generator responding to prompts. LLMs now serve as true collaborative partners, helping developers navigate design and implementation phases, make architectural decisions, and identify potential risks or constraints. These systems come equipped with a rich contextual understanding of company policies, project-specific instructions, third-party best practices, and comprehensive technical documentation.

The tools for planning with AI are still early. A number of incumbents and start-ups have built applications that aggregate customer feedback from forums, Slack, email or CRM systems like Salesforce and Hubspot (e.g. Nexoro). Another cluster of companies (e.g. Delty or Traycer) build websites or VS Code plugins that help break down specifications into detailed user stories and help with ticketing processes (e.g. Linear). Looking ahead, it’s clear that current systems of record like wikis and story trackers will need dramatic transformation or complete replacement as well.

Generating and Reviewing Code

Once we have a solid plan, we enter an iterative cycle where AI coding assistants generate code and developers review it. The optimal user interface and integration point depends primarily on task length and whether it should run asynchronously.

Chat-based File Editing allows users to prompt and provide the necessary context for the AI via chat. This approach leverages larger reasoning models with large context windows, working across entire codebases and frequently utilizing basic tools for file creation or adding packages. The system can be integrated within an IDE or accessed via a web interface, offering users real-time feedback on each operation.

Background Agents operate differently by working over extended periods without direct user interaction. They often employ automated tests to ensure solution accuracy, which is crucial given the absence of immediate user feedback. The result is a modified code tree or a pull request submitted to the code repository. Examples include Devin, Anthropic Code, and Cursor Background Agents.

AI App Builders and Prototyping Tools–such as Lovable, Bolt/Stackblitz, Vercel v0, and Replit–represent a rapidly scaling category. These platforms generate fully functional applications — not just UIs — from natural language prompts, wireframes, or visual examples. Today, they’re popular among vibe coders building simple applications as well as professionals prototyping fully functional applications. While so far, very little of the AI-generated UI is going into production code bases, this may simply reflect the current immaturity of these tools.

Version control for AI agents: As AI agents handle more implementation work, what developers care about shifts from how the code changed to why it changed and whether it works. Traditional diffs lose meaning when entire files are generated at once. Tools like Gitbutler are reimagining version control around intent, rather than text—capturing prompt history, test results, and agent provenance. In this world, Git becomes a backend ledger while the real action happens in a semantic layer tracking goals, decisions, and outcomes.

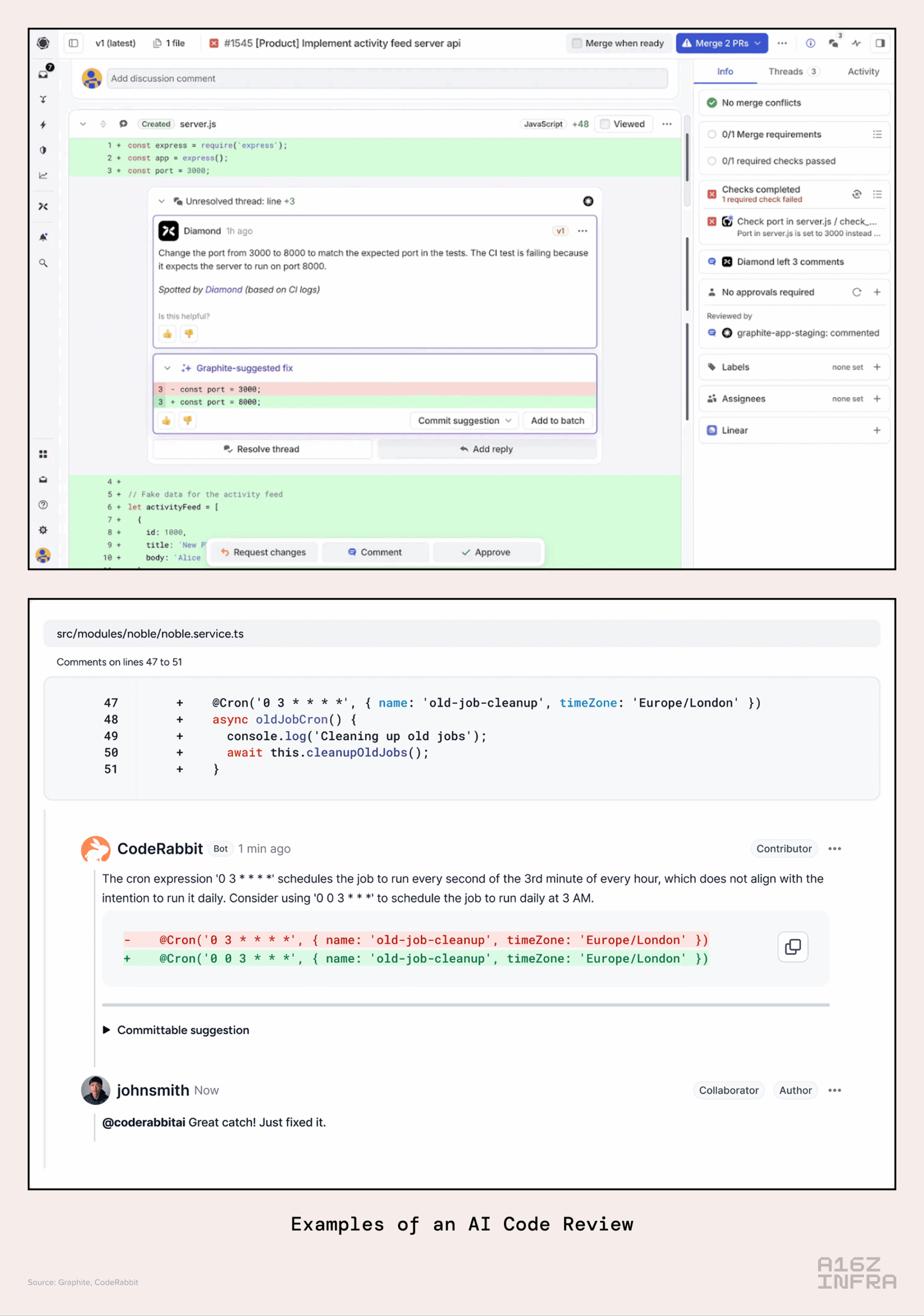

Source Code Management System Integration enables AI to review issues and pull requests and to participate in discussions. This integration leverages the collaborative nature of source control management, where discussions around issues or pull requests provide the AI with valuable context for implementation. Additionally, the AI assists in reviewing developer pull requests, focusing on correctness, security, and compliance. Examples include solutions from Graphite and CodeRabbit.

The main loop for today’s coding assistants is usually agentic (in the sense of the LLM deciding next actions and using tools, i.e. 3 stars in the HF framework). Today simple tasks like text changes, library updates, or adding very simple features often work completely autonomously. We’ve experienced magical moments when GitHub group discussions about features culminate in a brief “Please implement @aihelper” comment, resulting in flawless, merge-ready pull requests. But this is not yet the norm for more complex requests.

Legacy Code Migration consistently ranks among the most successful AI coding use cases. (e.g. see here). Common use cases include migrating from Fortran or COBOL to Java, Perl to Python, or replacing ancient Java libraries. A common strategy involves first generating functional specifications from legacy code and once that is correct use it to generate the new implementation using the old code base only as a reference to resolve ambiguities. We are seeing companies being created in this space, and the market is huge.

QA & Documentation

Once the code is written, integration tests and documentation are needed. This phase has spawned its own specialized toolset.

Documentation for Developers and LLMs – LLMs are now remarkably good at generating not just user-facing documentation, but also documentation leveraged at run time by LLMs. Tools like Context7 can automatically pull in the right context at the right time—retrieving relevant code, comments, and examples—so the generated documentation stays consistent with the actual implementation. Beyond static pages, products such as Mintlify create dynamic documentation sites where developers can interact with a Q&A assistant directly, and even provide agents that let users update or regenerate sections on demand through simple prompts. Last but not least, AI can generate specialized documentation for security and compliance which in large enterprises is important. We see specialized tools emerge in this space as well (e.g. Delve for compliance).

AI QA – Instead of writing test cases by hand, developers can now rely on AI agents to generate, run, and evaluate tests across UI, API, and backend layers. These systems behave like autonomous QA engineers, crawling through flows, asserting expected behavior, and generate bug reports with suggested fixes. As software becomes increasingly AI-generated, having AI QA closes the development loop: it’s no longer code -> review -> test -> commit – in the extreme case the code is becoming opaque and the only thing that matters to the developer is correctness, performance and expected behavior.

Tools for Agents

In addition to the above tools which are meant for human developers, a separate category of tools has emerged that are explicitly built to be used by agents.

Code Search & Indexing – When operating on large code repositories (millions or billions of lines of code) it is no longer possible (let alone affordable) to provide the entire code base to an LLM for each inference operation. Instead, best-of-breed approaches equip LLMs with a search tool to find relevant code snippets. For small codebases, a simple RAG or grep search may be sufficient. For large codebases (e.g. see Google’s paper here), dedicated software with the ability to parse the code and create call graphs becomes a necessity to ensure all references can be found. This emerging category includes companies like Sourcegraph, which provides tools for analyzing large codebases, as well as specialized models from companies like Relace, which help identify and rank relevant files.

Web & Documentation Search – Tools like Mintlify and Context7 excel at generating and maintaining code-aware documentation, pulling in the most relevant snippets, comments, and usage examples from the live codebase to keep docs accurate and up to date. By contrast, web search tools such as Exa, Brave, and Tavily are optimized for ad hoc retrieval — helping agents quickly surface external references and long-tail knowledge on demand.

Code Sandboxes – Testing code and running simple command line tools for analysis and debugging is an important tool for agents. However, due to hallucinations or potential malicious context, executing code on local development systems carries risk. In other cases, dev environments may be complex and automated environments have the advantage of ensuring test repeatability. Execution sandbox vendors such as E2B, Daytona, Morph, Runloop, and Together’s Code Sandbox address this need and have become critical components in the AI dev stack.

Market Map

Below we try to lay out the broader AI coding start-up ecosystem. The layout roughly follows the software development lifecycle outlined earlier, with additional categories. Companies are listed in no particular order. Products from incumbents are occasionally included.

How is Software Development Changing?

The technology for AI based software development has arrived, now organizations have to operationalize it. A recent Reddit thread asked “Claude Code is super duper expensive, any tips to optimise?”. Cost can indeed be high: Assume your code base fills the entire 100k context window, we use Claude Opus 4.1 in reasoning mode, and you generate 10k output and thinking tokens. At $15/$75 per input/output MTOK this costs us $2.50 per query. Scale that to 3 queries per hour, 7 hours per day and 200 days per year that comes out to about to $10,000 annually. In many regions, this exceeds the cost of a junior developer.

In the end, we don’t think cost will slow down the adoption of AI development tools. Many platforms such as Cursor support multiple models through the same interface and are good at picking the right one to optimize cost. And even the cheapest models give massive benefits. But the conversation has shifted from who has the best model to who can deliver value at the right price point. For decades, software development cost was almost purely people’s cost, but now LLMs add a substantial opex component. Does this spell the end of IT outsourcing to low-cost countries? Perhaps not, but it does change the business case.

What does all of this mean for the 30 million software developers worldwide? Will AI replace software developers in the foreseeable future? Of course not. This nonsensical narrative is triggered by a mix of media sensationalism and aggressive marketing that attempts to price software not as per-seat, but as a replacement to human labor cost. History tells us that while substitution pricing works in early markets, eventually the cost of a good converges to its marginal cost, and so does pricing. So far, the limited actual data points that we have suggest that the most AI savvy enterprises increase hiring of developers, as they see a broad range of use cases with a short term positive ROI.

However, the job of a software developer itself has changed, and training will have to change accordingly. Today’s university curriculums will change drastically; unfortunately, no one (including us) really understands yet how. Algorithms, architecture and human-computer interaction will still be relevant and even coding still matters as frequently you have to drag the LLM out of a hole it dug itself into. But a typical software development course at a university is best seen as a relic of a different age with little practical relevance for today’s software industry.

More long term, the AI coding stack allows software to extend itself. For example, Gumloop allows the user to describe additional functionality they would like to see in the product, and the application will use AI to write code that implements this functionality. How far will this go? Could we do application integration by having LLMs do late binding based on human language API specs? Will the average desktop app have an “Vibe Code Additional Feature” menu button? Long term it seems implausible that an application ships as immutable code without any ability to extend itself.

Can we eventually eliminate code entirely and instead have LLM’s directly executing our high-level intentions (as Andrej suggests here)? In the most simple scenarios, this is already the case: ChatGPT will happily execute simple algorithms. For more complex tasks, writing code remains superior, primarily due to its efficiency. Adding two 16-bit integers on a modern GPU using optimized code takes about 10^-14 seconds. An LLM will take at least 10^-3 seconds to generate the output tokens. Being a 100 billion times faster is enough of a moat that we expect code to be around for a very long time.

It’s time to build, with the help of AI

Historically a technology supercycle has been the best time in history to start a company, and this is no exception. The combination of AI requiring new tools and at the same time accelerating the dev cycle hugely favors start-ups. Take coding assistants as an example: Microsoft’s GitHub Copilot seemed unstoppable being first to market, having the OpenAI partnership, the #1 IDE (VSCode), the #1 SCM (GitHub) and the #1 enterprise sales force. Yet multiple start-ups competed effectively. It’s hard being an incumbent in a supercycle.

We are in the early stages of likely the largest revolution in software development since its inception. Software engineers are gaining tools that will make them more productive and powerful than ever. And end users can look forward to more and better software. Last but not least, there has never been a better time in history to start a company in the software development space. If you want to be part of the revolution, we at a16z want to partner with you!