“Being deeply loved by someone gives you strength, while loving someone deeply gives you courage. But being understood by someone is everything.” – Lao Tzu

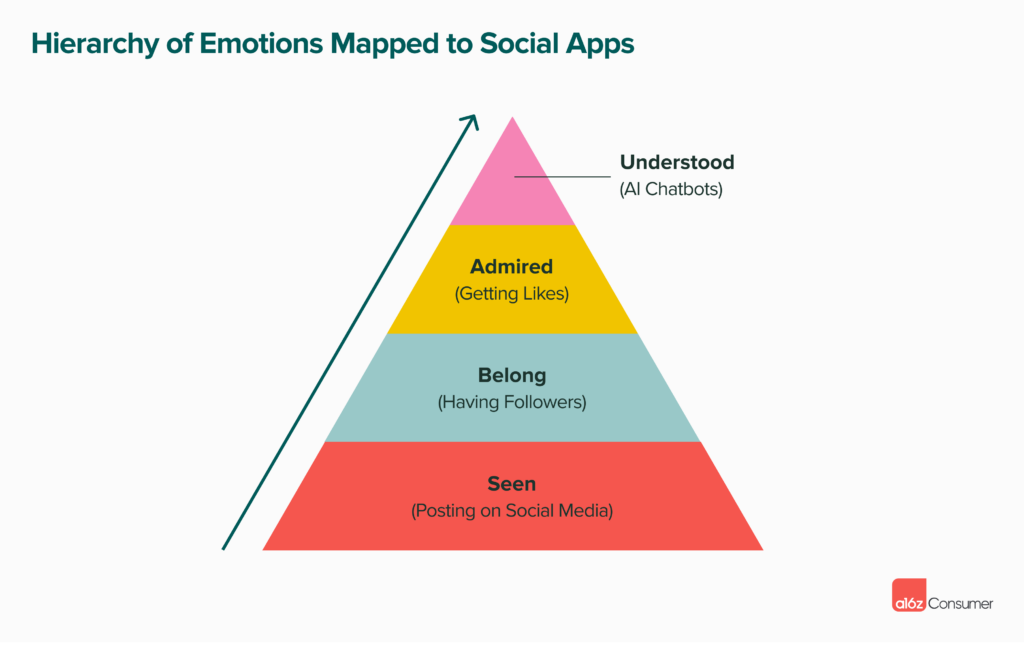

Psychologist Abraham Maslow famously posited that human behavior can be classified into a hierarchy of needs, starting with the physiological and ending with self-actualization. I believe a similar pyramid exists for human emotional needs: wanting to be seen, wanting to belong, wanting to be admired, and ultimately, wanting to be understood.

Perhaps unsurprisingly, each of these needs maps to an existing social media app behavior (see below). When we post pictures and videos to Instagram, TikTok, and BeReal, we feel seen by our friends and family, and by the larger communities we engage with. When we get requests to connect and see our contacts’ updates, we feel a sense of belonging. And when we get likes, comments, or even monetary tips on our posts, we feel admired. No app, however, has yet been able to help us help the most elusive emotional need of all: to feel truly and genuinely understood.

That’s why despite living in a world of retweets, upvotes, and right swipes, we still feel lonely. It’s not a coincidence that the number of new mental apps is expanding; as we observed in our latest Marketplace 100 report, mental health was far and away the fastest-growing category. It’s also why people are often drawn to anonymous apps, despite the fact that anonymity can lead to being cyberbullied.

Thanks to the rise of generative AI, we potentially have a new solution for this long-standing concern. Today’s AI chatbots are more human-like and empathetic than ever before; they are able to analyze text inputs and use natural language processing to identify emotional cues and respond accordingly. But because they aren’t actually human, they don’t carry the same baggage that people do. Chatbots won’t gossip about us behind our backs, ghost us, or undermine us. Instead, they are here to offer us judgment-free friendship, providing us with a safe space when we need to speak freely. In short, chatbot relationships can feel “safer” than human relationships, and in turn, we can be our unguarded, emotionally vulnerable, honest selves with them.

Today’s AI chatbots are able to invoke emotions and provide companionship by asking us probing questions that go deeper into our psyche than chatbots of the past. (In fact, some are finding that these chatbots are better at providing companionship than providing facts.) By pulling information out of us, like therapists, and then having perfect recall of every detail we’ve ever told it, these companion chatbots can pattern match our behavior—and ultimately help us understand ourselves better.

Accessible on our mobile phones, companionship chatbots also have the benefit of being physically available and attentive 24/7—they never tire, get busy, or need to sleep. If you’re struggling with insomnia and need someone to talk to at odd hours, or suffer from social anxiety and need to build confidence in your communication skills, your loyal chatbot is ready to help. And with all the world’s knowledge at the chatbot’s fingertips, one can imagine how they’ll eventually introduce us to new hobbies or languages, making them excellent companions for users who seek personal development.

While companion chatbots have clear benefits, there are still reasons why they can’t completely replace the warmth and depth of human connections. Companion chatbots can’t, for example, give us physical affection yet, nor single-handedly replace a professionally trained therapist. They also are unlikely to repeatedly challenge us, especially in a way that might hurt our feelings in the short term (e.g., warn us when we start behaving in a way that’s detrimental to ourselves). After all, our friends are supposed to give us real feedback, even when it’s hard to swallow.

Over time, however, this “tough love” dilemma could be solved by directing companion chatbots to learn our values and decision-making processes, so that they can ask us tough questions when we need it, and help us change our behavior for the better.

One approach could be starting with an AI assistant that naturally learns your schedule, basic needs, common purchases, decision-making process, friend and colleague networks, and more. Now imagine if that digital assistant got to know you so well that you became completely reliant on it to help you plan your life. Eventually, you might begin to see such a productivity tool evolve into a trustworthy agent and friend – one who you might even begin to emotionally rely on.

Another wedge could be a journaling app that occasionally responds with appropriate encouragement and relevant questions as you share your innermost thoughts with it. This way, a one-way engagement can become a conversation that evolves into a two-way relationship with… a companion chatbot. Or, instead of a journaling app, imagine a calendaring assistant who comments on your day, as well as gives you a prompt—and a safe place—to let off some steam.

There are many ways one could go about designing a companion chatbot (and as we’ve pointed out above, it doesn’t even have to launch as a stand-alone chatbot; it can evolve into its chatbot status from a different wedge). The winners in this space will be the ones who best understand the end users and are able to provide them with a safe, low-friction environment where they can be their unguarded, honest selves. Does this mean our best friends of the future, the ones we share our deepest secrets, goals, and fears with, will be bots? It’s certainly possible.